How Has the Field Changed?

LearnLab and its researchers were critical in creating the new field of “educational data mining”. The center’s role is detailed further below. One of most highly cited center publications is a summary of the state of educational data mining (Baker & Yacef, 2009; cited by 590), which is the lead piece in the first issue of the Journal of Educational Data Mining.

We have made in vivo experimentation a common practice. These in vivo experiments have a distinct set of feature that differentiate from laboratory experiments: they do not require participant recruitment and scheduling, they are of longer, more realistic duration (many hours or days rather than many minutes), and they gather data from students engaged in genuine academic (real courses rather than arbitrary lab tasks). We facilitated over 360 such experiments as part of the center (e.g., Liu, Wang, Perfetti, Brubaker, Wu & MacWhinney, 2011; Frishkoff, White, & Perfetti, 2009; Hausmann, Nokes, VanLehn, & Gershman, 2009; Salden, Koedinger, Renkl, Aleven, & McLaren, 2010; Schwonke, Renkl, Krieg, Wittwer, Aleven, & Salden, 2009; Wylie, Koedinger, & Mitamura, 2009). We have influenced others in employing in vivo experimentation (e.g., Carvalho et al., 2016; Chen et al., 2016) and in creating other infrastructures to support it (see ASSISTments; Heffernan & Heffernan, 2014).

LearnLab produced multiple demonstrations that fine-grain educational technology data streams (e.g., 10 second interactions recorded over weeks) can be used to build accurate and useful models that represent discoveries about human performance, learning, and engagement. These data streams have been used to discover models that accurately (R=.82) predict standardized test results (Feng, Heffernan, & Koedinger, 2009; cited by 111), that capture engagement and predict learning outcomes (e.g., Baker, et al., 2006, cited by 175; 2008b, cited by 151), and that automate discovery of cognitive models that decompose course content into core skills and concepts (Koedinger, McLaughlin, & Stamper, 2012).

The LearnLab’s DataShop was the first and remains the largest open repository and web-based tool suite for storing and analyzing fine-grained and longitudinal “click-stream” data, generated by online courses, assessments, intelligent tutoring systems, virtual labs, simulations, games, and other forms of educational technology (Koedinger et al., 2010 cited by 208; see learnlab.org/datashop). In addition to facilitating the storage and analysis of data by primary researchers, DataShop also supports subsequent collaborative or independent secondary analysis by augmented research teams. We name just a few examples out of the hundreds of the issues investigated through secondary analyses of DataShop datasets: (a) models of student engagement and affect (Baker, D’Mello, Rodrigo, & Graesser, 2010, cited by 278; Baker et al., 2008b, cited by 151), (b) structured time-series models (Kowalski, Zhang & Gordon, 2014), (c) discovering better cognitive models (e.g., Cen, Koedinger, & Junker, 2006, cited by 191), (d) designing better tutors (e.g., Liu, Koedinger & McLaughlin, 2014), and (e) Integrating representation learning and skill learning in a human-like intelligent agent (Li, Matsuda, Cohen & Koedinger, 2015).

Through LearnLab’s social and technical infrastructure development, particularly LearnLab courses and DataShop, we have demonstrated the value and have been a stalwart champion of the acceptance of evidence-based research in developing technology-enhanced learning experiments. Further, we have shown the value of these technologies as research platforms that support experimental work and provide fine-grain longitudinal data sets that are being used toward unlocking the mysteries of human learning. We have also demonstrated a new synergy between research and instructional development, establishing unique collaborations between laboratory scientists, instructional designers, and course instructors that have led to novel learning science, advances in research methods, and unique training of future scientists.

What Is New That Could Not be Done Before LearnLab?

As consequence of LearnLab methods, social support, and tools, it is now much easier to collect tightly controlled experimental data with real students in real courses and at a level of detail, rigor, scale, and theoretical richness than was never possible before. One important consequence is that we are now much better able to test the external validity or generalizability of principles of learning and instruction across a wide variety of contexts, including variations in course content and student characteristics (cf., Koedinger & Aleven, 2007, cited by 335; Koedinger, Corbett & Perfetti, 2012, cited by 147).

We can now test theories of learning across many of the 850+ openly available learning datasets in DataShop. Research on the psychology of learning has historically either relied only on comparing outcome measures under different learning conditions or, when focused on process, has relied on changes in reaction times or latency in correct performance. Increases in speed of correct performance is an important part of the tail end of the learning process, but the more fundamental question of learning is the process of a student going from being unable, inaccurate, or inflexible to being able, accurate, and flexible. Researchers are able to now use the hundreds of datasets in DataShop to unlock the mysteries of how humans are able to learn complex academics (e.g., math, science, second language) and go from being novices to being experts.

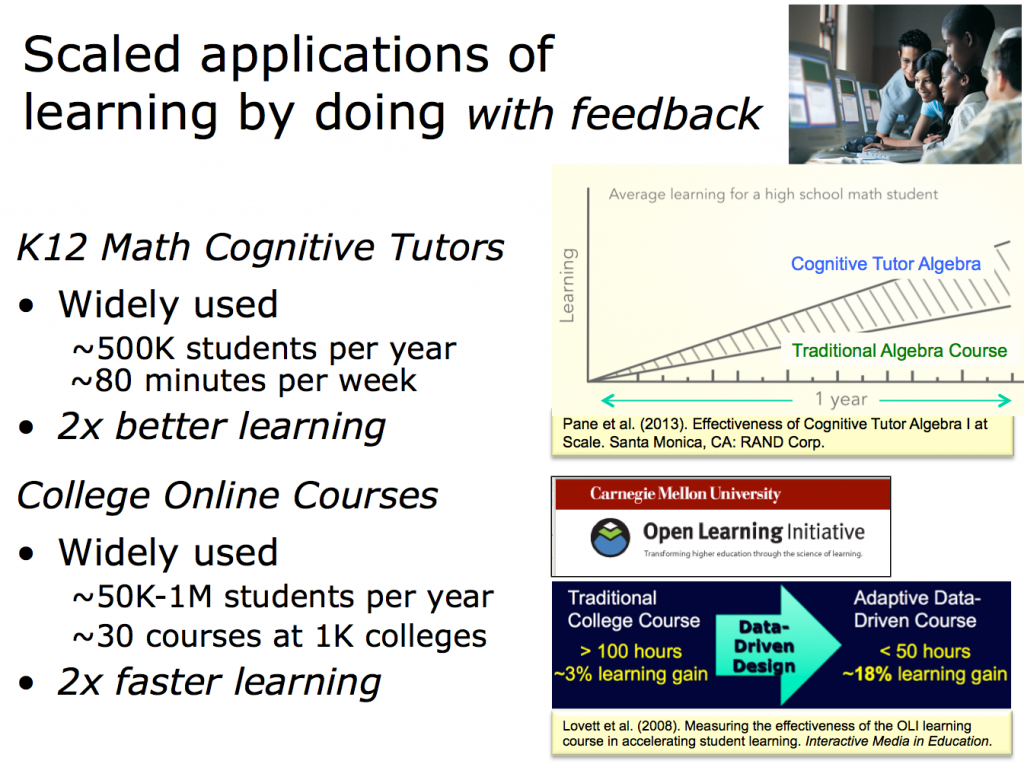

Educational technology is having a much greater impact on student learning (e.g., Pane, Griffin, McCaffrey, & Karam, 2014; Lovett, Meyer, & Thille, 2008, cited by 107) than it did at the start of the start of the center (see Figure 2), though challenges remain in getting course developers to adopt the best of learning science (cf., Koedinger & Aleven, 2016).

Figure 2. LearnLab research contributes to course improvements that not only have broad impact on large numbers of students, but also have deep and lasting impact through improving the how much students learn and reducing the time required.