Difference between revisions of "Hausmann Study2"

(→Glossary) |

(→Independent variables) |

||

| (59 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

== The Effects of Interaction on Robust Learning == | == The Effects of Interaction on Robust Learning == | ||

''Robert Hausmann and Kurt VanLehn'' | ''Robert Hausmann and Kurt VanLehn'' | ||

| + | |||

| + | === Summary Table === | ||

| + | {| border="1" cellspacing="0" cellpadding="5" style="text-align: left;" | ||

| + | | '''PIs''' || Robert G.M. Hausmann & Kurt VanLehn | ||

| + | |- | ||

| + | | '''Other Contributers''' || Robert N. Shelby (USNA), Brett van de Sande (Pitt) | ||

| + | |- | ||

| + | | '''Study Start Date''' || Sept. 1, 2006 | ||

| + | |- | ||

| + | | '''Study End Date''' || Aug. 31, 2007 | ||

| + | |- | ||

| + | | '''LearnLab Site''' || none (''in vitro'' study) | ||

| + | |- | ||

| + | | '''LearnLab Course''' || Physics | ||

| + | |- | ||

| + | | '''Number of Students''' || ''N'' = 39 | ||

| + | |- | ||

| + | | '''Total Participant Hours''' || 78 hrs. | ||

| + | |- | ||

| + | | '''DataShop''' || Loaded: 11/02/07 | ||

| + | |} | ||

| + | <br> | ||

=== Abstract === | === Abstract === | ||

| − | It is widely assumed that an interactive learning resource is more effective in producing learning gains than non-interactive [[sources]]. It turns out, however, that this assumption may not be completely accurate. For instance, research on human tutoring suggests that human tutoring (i.e., interactive) is just as effective as reading a textbook (i.e., non-interactive) under very particular circumstances (VanLehn et al., 2007). This | + | It is widely assumed that an interactive learning resource is more effective in producing learning gains than non-interactive [[sources]]. It turns out, however, that this assumption may not be completely accurate. For instance, research on human tutoring suggests that human tutoring (i.e., interactive) is just as effective as reading a textbook (i.e., non-interactive) under very particular circumstances (VanLehn et al., 2007). This raises the question, under which conditions should we expect to observe strong learning gains from interactive learning situations? |

The current project seeks to address this question by contrasting interactive learning (i.e., jointly constructing explanations) with non-interactive learning (i.e., individually constructing explanations). Students were prompted to either self-explain in the singleton condition or to jointly construct explanations in the dyad condition. | The current project seeks to address this question by contrasting interactive learning (i.e., jointly constructing explanations) with non-interactive learning (i.e., individually constructing explanations). Students were prompted to either self-explain in the singleton condition or to jointly construct explanations in the dyad condition. | ||

=== Background and Significance === | === Background and Significance === | ||

| − | Several studies on collaborative learning have shown that it is more effective in producing learning gains than learning the same material alone. This finding has been replicated in many different configurations of students and across several different domains. Once the effect was established, the field moved into a more interesting phase, which was to accurately describe the interactions themselves and their impact on student learning (Dillenbourg, 1999). One of the hot topics in collaborative research is on the "co-construction" of new knowledge. Co-construction has been defined in many different ways. Therefore, the present study limits the scope of co-constructed ideas to jointly constructed | + | Several studies on collaborative learning have shown that it is more effective in producing learning gains than learning the same material alone. This finding has been replicated in many different configurations of students and across several different domains. Once the effect was established, the field moved into a more interesting phase, which was to accurately describe the interactions themselves and their impact on student learning (Dillenbourg, 1999). One of the hot topics in collaborative research is on the "co-construction" of new knowledge. Co-construction has been defined in many different ways. Therefore, the present study limits the scope of co-constructed ideas to [[jointly constructed explanation]]s. |

| − | Evidence supporting jointly constructed | + | Evidence supporting [[jointly constructed explanation]]s is sparse, but can be found in a study by McGregor and Chi (2002). They found that collaborative peers are able to not only jointly constructed ideas, but they will also reuse the ideas in a later problem-solving session. One of the limitations of their study was that it did not measure the impact of jointly constructed ideas on robust learning. In a related study, Hausmann, Chi, and Roy (2004) found correlational evidence for learning from co-construction. To provide more stringent evidence for the impact of jointly constructed explanations, the present study will manipulate the types of conversations dyads have by [[prompting]] for [[jointly constructed explanation]]s and measuring the effect on robust learning. |

=== Glossary === | === Glossary === | ||

| − | [[Jointly constructed explanation]] | + | * [[Jointly constructed explanation]] |

| − | [[Prompting]] | + | * [[Prompting]] |

See [[:Category:Hausmann_Study2|Hausmann_Study2 Glossary]] | See [[:Category:Hausmann_Study2|Hausmann_Study2 Glossary]] | ||

=== Research question === | === Research question === | ||

| − | How is robust learning affected by self-explanation vs. jointly constructed | + | How is [[robust learning]] affected by [[self-explanation]] vs. [[jointly constructed explanation]]s? |

=== Independent variables === | === Independent variables === | ||

| − | + | Only one independent variable, with two levels, was used: | |

| − | * | + | * Explanation-construction: individually constructed explanations vs. jointly constructed explanations |

| − | + | ||

| + | [[Prompting]] for an explanation was intended to increase the probability that the individual or dyad will traverse a useful learning-event path. | ||

| − | + | <b>Figure 1</b>. An example from the Individual Self-explanation condition<Br><Br> | |

| + | [[Image:Individual_SE.JPG]]<Br> | ||

| − | + | <b>Figure 2</b>. An example from the Joint Self-explanation condition<Br><Br> | |

| + | [[Image:Joint_SE.JPG]]<Br> | ||

=== Hypothesis === | === Hypothesis === | ||

| − | The Interactive Hypothesis: collaborative peers will learn more than the individual learners because they benefit from the process of negotiating meaning with a peer, of appropriating part of the peers’ perspective, of building and maintaining common ground, and of articulating their knowledge and clarifying it when the peer misunderstands. In terms of the Interactive Communication cluster, the hypothesis states that, even when controlling for the amount of knowledge components covered, the dyads will learn more than the individuals. | + | '''The Interactive Hypothesis''': collaborative peers will learn more than the individual learners because they benefit from the process of negotiating meaning with a peer, of appropriating part of the peers’ perspective, of building and maintaining common ground, and of articulating their knowledge and clarifying it when the peer misunderstands. In terms of the Interactive Communication cluster, the hypothesis states that, even when controlling for the amount of knowledge components covered, the dyads will learn more than the individuals. |

| − | + | This experiment implements the following instructional principle: [[Prompted Self-explanation]] | |

=== Dependent variables === | === Dependent variables === | ||

| − | * ''Near transfer, immediate'': electrodynamics problems solved in Andes during the laboratory period. | + | * ''Near transfer, immediate'': electrodynamics problems solved in [[Andes]] during the laboratory period (2 hrs.). |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

=== Results === | === Results === | ||

| − | + | '''Laboratory Experiment''' | |

| − | + | We conducted a laboratory (''in vitro'' not ''in vivo'') experiment during the Spring 2007 semester. Undergraduate volunteers, who were enrolled in the second semester of physics at the University of Pittsburgh, composed the sample for the study. Unfortunately, the sample size was small because our pool of participants was extremely limited. | |

| − | ''' | + | '''Procedure''' |

| + | Participants were randomly assigned to condition. The first activity was to train the participants in their respective explanation activities. They read the instructions to the experiment, presented on a webpage, followed by the prompts used after each step of the example. Finally, they read an example of a hypothetical self-explanation or joint explanation. | ||

| − | + | After reading the experimental instructions, they then watched an introductory video to the Andes physics tutor. Afterwards, they solved a simple, single-principle electrodynamics problem (i.e., the warm-up problem). Once they finished, participants then watched a video solving an ''isomorphic problem''. Note that this procedure is slightly different from those used in the past where examples are presented before solving problems (e.g., Sweller & Cooper, 1985; Exper. 2). The videos decomposed into steps, and students were prompted to explain each step. The cycle of explaining examples and solving problems repeated until either 4 problems were solved or 2 hours elapsed. The first problem was used as a warm-up exercise, and the problems became progressively more complex. | |

| − | + | As in our [[Hausmann_Study | first experiment]], we used normalize assistance scores. Normalize assistance scores were defined as the sum of all the errors and requests for help on that problem divided by the number of entries made in solving that problem. Thus, lower assistance scores indicate that the student derived a solution while making fewer mistakes and getting less help, and thus demonstrating better performance and understanding. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | As in our first experiment, we used normalize assistance scores. Normalize assistance scores were defined as the sum of all the errors and requests for help on that problem divided by the number of entries made in solving that problem. Thus, lower assistance scores indicate that the student derived a solution while making fewer mistakes and getting less help, and thus demonstrating better performance and understanding. | ||

The results from the laboratory study were as follows: | The results from the laboratory study were as follows: | ||

| Line 83: | Line 78: | ||

* '''Differences between conditions''' | * '''Differences between conditions''' | ||

| − | The jointly constructed explanation (JCE) condition (''M'' = .45, ''SD'' = . | + | The jointly constructed explanation (JCE) condition (''M'' = .45, ''SD'' = .33) demonstrated lower assistance scores than the individually constructed explanation (ICE) condition (''M'' = 1.00, ''SD'' = .67). The difference between experimental conditions was statistically reliable and of high practical significance, ''F''(1, 23) = 7.33, ''p'' = .01, η<sub>p</sub><sup>2</sup> = .24. |

* '''Problem by condition''' | * '''Problem by condition''' | ||

| − | The pattern observed at the level of conditions replicated at the level of problem. That is, when the problem was used as a | + | The pattern observed at the level of conditions replicated at the level of problem. That is, when the problem was used as a within factor in a repeated measures analysis of variance (MANOVA), the JCE condition demonstrated lower normalized assistance scores for all of the problems, except for the first, warm-up problem (see Table). |

<br> | <br> | ||

| Line 97: | Line 92: | ||

|η<sub>p</sub><sup>2</sup> | |η<sub>p</sub><sup>2</sup> | ||

|- | |- | ||

| − | | | + | |Warm-up ||0.75 ||0.63 ||.483 ||.024 |

| − | |0.75 | ||

| − | |0.63 | ||

| − | |.483 | ||

| − | |.024 | ||

|- | |- | ||

| − | | | + | |Problem 2 || 1.09 || 0.32 ||.003 ||.341 |

| − | | 1.09 | ||

| − | | 0.32 | ||

| − | |.003 | ||

| − | |.341 | ||

|- | |- | ||

| − | | | + | |Problem 3 || 1.08 || 0.51 ||.059 ||.160 |

| − | | 1.08 | ||

| − | | 0.51 | ||

| − | |.059 | ||

| − | |.160 | ||

|- | |- | ||

| − | | | + | |Problem 4 || 0.67 || 0.29 ||.034 ||.196 |

| − | | 0.67 | ||

| − | | 0.29 | ||

| − | |.034 | ||

| − | |.196 | ||

|} | |} | ||

<br> | <br> | ||

| − | In addition to providing higher quality solutions, the jointly constructed explanation condition (''M'' = 985.71, ''SD'' = 45.60) also solved their problems more quickly than the individually constructed explanation condition (''M'' = 1097.75, ''SD'' = 51.45). Although the omnibus difference between experimental conditions was not statistically reliable, ''F''(1, 23) = 2.66, ''p'' = .12, η<sub>p</sub><sup>2</sup> = .10, the differences in solution times for the second and third problem were reliably lower for the JCE condition. This finding is particularly interesting because the experiment was capped at two hours; therefore, the dyads were able to complete the problem set more often than the individuals | + | In addition to providing higher quality solutions, the jointly constructed explanation condition (''M'' = 985.71, ''SD'' = 45.60) also solved their problems more quickly than the individually constructed explanation condition (''M'' = 1097.75, ''SD'' = 51.45). Although the omnibus difference between experimental conditions was not statistically reliable, ''F''(1, 23) = 2.66, ''p'' = .12, η<sub>p</sub><sup>2</sup> = .10, the differences in solution times for the second and third problem were reliably lower for the JCE condition. This finding is particularly interesting because the experiment was capped at two hours; therefore, the dyads were able to complete the problem set more often than the individuals, χ<sup>2</sup> = 4.81, ''p'' = .03. There were no reliable differences in terms of the amount of time explaining the steps of the worked-out examples. |

* '''Knowledge component (KC) by condition''' | * '''Knowledge component (KC) by condition''' | ||

Because not all of the individuals were able to complete the entire problem set, their data could not be included in an analysis of the knowledge components. A MANOVA assumes that each individual participates in all of the measures. However, this is not the case when the individuals did not complete the last problem. Therefore, this fine-grained analysis of learning will need to wait until the study can be replicated in the classroom, with a larger sample size. | Because not all of the individuals were able to complete the entire problem set, their data could not be included in an analysis of the knowledge components. A MANOVA assumes that each individual participates in all of the measures. However, this is not the case when the individuals did not complete the last problem. Therefore, this fine-grained analysis of learning will need to wait until the study can be replicated in the classroom, with a larger sample size. | ||

| + | |||

| + | In an effort to anticipate the replication, we used a data imputation method for estimating missing values for each student (SPSS v14.0: Transform/Replace missing values/Median of nearby points). This provided a complete dataset for all participants. The results are summarized in the table below. | ||

| + | |||

| + | <tt> | ||

| + | {| border="1" cellspacing="0" cellpadding="5" align="center" | ||

| + | |- | ||

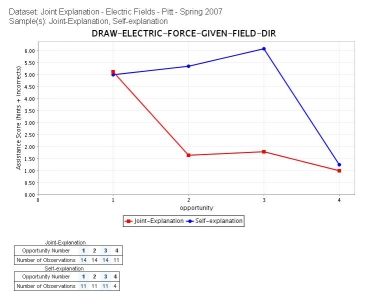

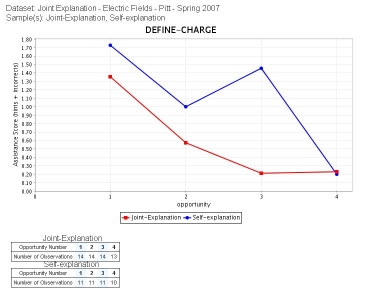

| + | |MAG || <center>[[Image:mag_learning_curves.JPG]]</center> || EFIELD || <center>[[Image:efield_learning_curves.JPG]]</center> | ||

| + | |- | ||

| + | |FORCE || <center>[[Image:force_learning_curves.JPG]]</center> ||CHARGE || <center>[[Image:charge_learning_curves.JPG]]</center> | ||

| + | |- | ||

| + | |} | ||

| + | </tt> | ||

| + | <center>'''Table 2'''. Learning curves for each knowledge component.</center> | ||

| + | <br> | ||

=== Explanation === | === Explanation === | ||

| − | This study is part of the [[Interactive_Communication|Interactive Communication cluster]], and it hypothesizes that the | + | This study is part of the [[Interactive_Communication|Interactive Communication cluster]], and it hypothesizes that the [[prompting]] singletons and dyads should increase the probability that they traverse useful [[learning events]]. However, it is unclear if the act of communicating with a partner should increase learning, if the type of statements (i.e., explanations) are held constant. A strong-sense version of the Interactive Communication hypothesis would suggest that interacting with a peer is beneficial for learning because dyads can learn from their partner by assimilating [[knowledge component]]s articulated by the partner or via corrective comments that help to refine vague or incorrect [[knowledge component]]s. |

| − | + | Both [[self-explanation]] and joint-explanation should lead to deeper [[Knowledge Construction Dialogues]] or monolgues because they are likely to include integrative statements that connect information with prior knowledge, connect information with or previously stated material, or infer new knowledge. However, it may be more likely that deeper knowledge construction occurs during dialogue because the communicative partner provides a social cue to avoid glossing over the material. | |

| − | === Annotated bibliography === | + | === Further Information === |

| + | ==== Annotated bibliography ==== | ||

| + | * Hausmann, R. G. M., van de Sande, B., & VanLehn, K. (2008, July). Are self-explaining and coached problem solving more effective when done by pairs of students than alone? Poster presented at the 30th meeting of the Cognitive Science Society, Washington, DC. | ||

| + | * Hausmann, R. G. M., van de Sande, B., & VanLehn, K. (2008, June). Shall we explain? Augmenting Learning from Intelligent Tutoring Systems and Peer Collaboration. Paper presented at the 9th meeting of the International Conference on Intelligent Tutoring Systems, Montréal, Canada. | ||

| + | * Hausmann, R. G. M., van de Sande, B., van de Sande, C., & VanLehn, K. (2008, June). Productive Dialog During Collaborative Problem Solving. Paper presented at the 2008 International Conference for the Learning Sciences, Utrecht, Netherlands. | ||

| + | * Hausmann, R. G. M., van de Sande, B., & VanLehn, K. (2008, May). Trialog: How Peer Collaboration Helps Remediate Errors in an ITS. Paper presented at the 21st meeting of the International FLAIRS Conference, Coconut Grove, FL. | ||

| + | * Presentation to the PSLC Advisory Board: January, 2008 | ||

| + | * Conference proceeding submitted to CogSci 2008 | ||

* Presented at a PSLC lunch: June 12, 2006 [http://www.learnlab.org/uploads/mypslc/talks/hausmannprojectproposalv4.ppt] | * Presented at a PSLC lunch: June 12, 2006 [http://www.learnlab.org/uploads/mypslc/talks/hausmannprojectproposalv4.ppt] | ||

| + | * Presented to the Physics LearnLab: April 4, 2007 | ||

| − | === References === | + | ==== References ==== |

# Dillenbourg, P. (1999). What do you mean "collaborative learning"? In P. Dillenbourg (Ed.), ''Collaborative learning: Cognitive and computational approaches'' (pp. 1-19). Oxford: Elsevier. [http://tecfa.unige.ch/tecfa/publicat/dil-papers-2/Dil.7.1.14.pdf] | # Dillenbourg, P. (1999). What do you mean "collaborative learning"? In P. Dillenbourg (Ed.), ''Collaborative learning: Cognitive and computational approaches'' (pp. 1-19). Oxford: Elsevier. [http://tecfa.unige.ch/tecfa/publicat/dil-papers-2/Dil.7.1.14.pdf] | ||

# Hausmann, R. G. M., & Chi, M. T. H. (2002). Can a computer interface support self-explaining? ''Cognitive Technology, 7''(1), 4-14. [http://www.pitt.edu/~bobhaus/hausmann2002.pdf] | # Hausmann, R. G. M., & Chi, M. T. H. (2002). Can a computer interface support self-explaining? ''Cognitive Technology, 7''(1), 4-14. [http://www.pitt.edu/~bobhaus/hausmann2002.pdf] | ||

# Hausmann, R. G. M., Chi, M. T. H., & Roy, M. (2004). Learning from collaborative problem solving: An analysis of three hypothesized mechanisms. In K. D. Forbus, D. Gentner & T. Regier (Eds.), ''26nd Annual Conference of the Cognitive Science Society'' (pp. 547-552). Mahwah, NJ: Lawrence Erlbaum. [http://www.cogsci.northwestern.edu/cogsci2004/papers/paper445.pdf] | # Hausmann, R. G. M., Chi, M. T. H., & Roy, M. (2004). Learning from collaborative problem solving: An analysis of three hypothesized mechanisms. In K. D. Forbus, D. Gentner & T. Regier (Eds.), ''26nd Annual Conference of the Cognitive Science Society'' (pp. 547-552). Mahwah, NJ: Lawrence Erlbaum. [http://www.cogsci.northwestern.edu/cogsci2004/papers/paper445.pdf] | ||

# McGregor, M., & Chi, M. T. H. (2002). Collaborative interactions: The process of joint production and individual reuse of novel ideas. In W. D. Gray & C. D. Schunn (Eds.), ''24nd Annual Conference of the Cognitive Science Society.'' Mahwah, NJ: Lawerence Erlbaum. [http://www.cognitivesciencesociety.org/confproc/gmu02/final_ind_files/McGregor_chi.pdf] | # McGregor, M., & Chi, M. T. H. (2002). Collaborative interactions: The process of joint production and individual reuse of novel ideas. In W. D. Gray & C. D. Schunn (Eds.), ''24nd Annual Conference of the Cognitive Science Society.'' Mahwah, NJ: Lawerence Erlbaum. [http://www.cognitivesciencesociety.org/confproc/gmu02/final_ind_files/McGregor_chi.pdf] | ||

| + | # Sweller, J., & Cooper, G. A. (1985). The use of worked examples as a substitute for problem solving in learning algebra. ''Cognition and Instruction, 2''(1), 59-89. [http://web.ebscohost.com/ehost/pdf?vid=2&hid=105&sid=34dbdad3-7874-419b-aace-3a9b56a3f41d%40sessionmgr107] | ||

# VanLehn, K., Graesser, A. C., Jackson, G. T., Jordan, P., Olney, A., & Rose, C. P. (2007). When are tutorial dialogues more effective than reading? ''Cognitive Science. 31''(1), 3-62. [http://www.leaonline.com/doi/pdfplus/10.1207/s15516709cog3101_2] | # VanLehn, K., Graesser, A. C., Jackson, G. T., Jordan, P., Olney, A., & Rose, C. P. (2007). When are tutorial dialogues more effective than reading? ''Cognitive Science. 31''(1), 3-62. [http://www.leaonline.com/doi/pdfplus/10.1207/s15516709cog3101_2] | ||

| − | === Connections === | + | ==== Connections ==== |

| − | + | * [[Craig observing | Learning from Problem Solving while Observing Worked Examples (Craig Gadgil, & Chi)]] | |

| + | |||

| + | ==== Future plans ==== | ||

| + | Our future plans for June 2007 - August 2007: | ||

| + | * Transcribe the interactions and code them for explanations, either constructed jointly or individually. | ||

Latest revision as of 14:38, 10 October 2008

Contents

The Effects of Interaction on Robust Learning

Robert Hausmann and Kurt VanLehn

Summary Table

| PIs | Robert G.M. Hausmann & Kurt VanLehn |

| Other Contributers | Robert N. Shelby (USNA), Brett van de Sande (Pitt) |

| Study Start Date | Sept. 1, 2006 |

| Study End Date | Aug. 31, 2007 |

| LearnLab Site | none (in vitro study) |

| LearnLab Course | Physics |

| Number of Students | N = 39 |

| Total Participant Hours | 78 hrs. |

| DataShop | Loaded: 11/02/07 |

Abstract

It is widely assumed that an interactive learning resource is more effective in producing learning gains than non-interactive sources. It turns out, however, that this assumption may not be completely accurate. For instance, research on human tutoring suggests that human tutoring (i.e., interactive) is just as effective as reading a textbook (i.e., non-interactive) under very particular circumstances (VanLehn et al., 2007). This raises the question, under which conditions should we expect to observe strong learning gains from interactive learning situations?

The current project seeks to address this question by contrasting interactive learning (i.e., jointly constructing explanations) with non-interactive learning (i.e., individually constructing explanations). Students were prompted to either self-explain in the singleton condition or to jointly construct explanations in the dyad condition.

Background and Significance

Several studies on collaborative learning have shown that it is more effective in producing learning gains than learning the same material alone. This finding has been replicated in many different configurations of students and across several different domains. Once the effect was established, the field moved into a more interesting phase, which was to accurately describe the interactions themselves and their impact on student learning (Dillenbourg, 1999). One of the hot topics in collaborative research is on the "co-construction" of new knowledge. Co-construction has been defined in many different ways. Therefore, the present study limits the scope of co-constructed ideas to jointly constructed explanations.

Evidence supporting jointly constructed explanations is sparse, but can be found in a study by McGregor and Chi (2002). They found that collaborative peers are able to not only jointly constructed ideas, but they will also reuse the ideas in a later problem-solving session. One of the limitations of their study was that it did not measure the impact of jointly constructed ideas on robust learning. In a related study, Hausmann, Chi, and Roy (2004) found correlational evidence for learning from co-construction. To provide more stringent evidence for the impact of jointly constructed explanations, the present study will manipulate the types of conversations dyads have by prompting for jointly constructed explanations and measuring the effect on robust learning.

Glossary

Research question

How is robust learning affected by self-explanation vs. jointly constructed explanations?

Independent variables

Only one independent variable, with two levels, was used:

- Explanation-construction: individually constructed explanations vs. jointly constructed explanations

Prompting for an explanation was intended to increase the probability that the individual or dyad will traverse a useful learning-event path.

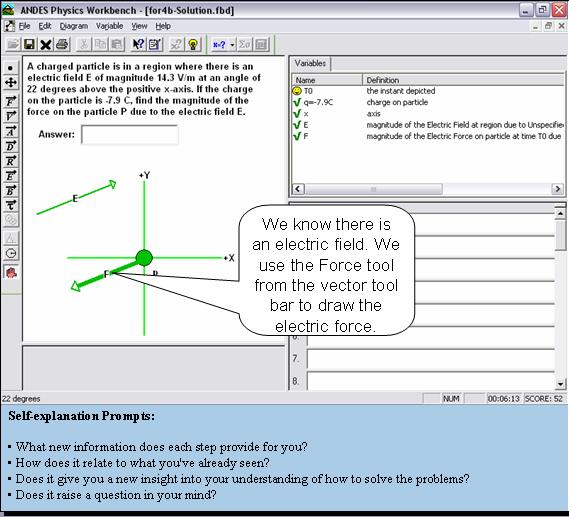

Figure 1. An example from the Individual Self-explanation condition

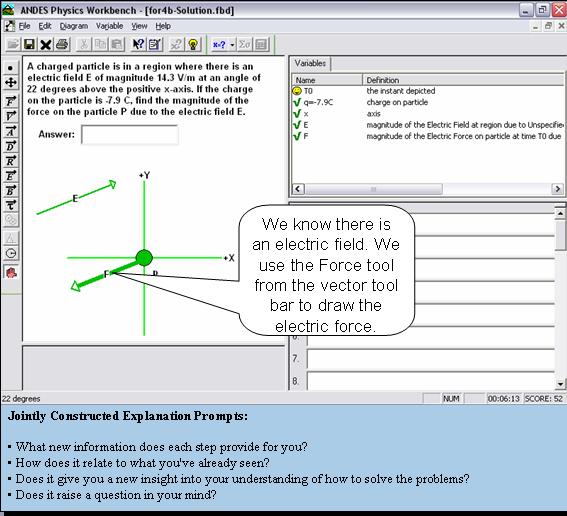

Figure 2. An example from the Joint Self-explanation condition

Hypothesis

The Interactive Hypothesis: collaborative peers will learn more than the individual learners because they benefit from the process of negotiating meaning with a peer, of appropriating part of the peers’ perspective, of building and maintaining common ground, and of articulating their knowledge and clarifying it when the peer misunderstands. In terms of the Interactive Communication cluster, the hypothesis states that, even when controlling for the amount of knowledge components covered, the dyads will learn more than the individuals.

This experiment implements the following instructional principle: Prompted Self-explanation

Dependent variables

- Near transfer, immediate: electrodynamics problems solved in Andes during the laboratory period (2 hrs.).

Results

Laboratory Experiment We conducted a laboratory (in vitro not in vivo) experiment during the Spring 2007 semester. Undergraduate volunteers, who were enrolled in the second semester of physics at the University of Pittsburgh, composed the sample for the study. Unfortunately, the sample size was small because our pool of participants was extremely limited.

Procedure Participants were randomly assigned to condition. The first activity was to train the participants in their respective explanation activities. They read the instructions to the experiment, presented on a webpage, followed by the prompts used after each step of the example. Finally, they read an example of a hypothetical self-explanation or joint explanation.

After reading the experimental instructions, they then watched an introductory video to the Andes physics tutor. Afterwards, they solved a simple, single-principle electrodynamics problem (i.e., the warm-up problem). Once they finished, participants then watched a video solving an isomorphic problem. Note that this procedure is slightly different from those used in the past where examples are presented before solving problems (e.g., Sweller & Cooper, 1985; Exper. 2). The videos decomposed into steps, and students were prompted to explain each step. The cycle of explaining examples and solving problems repeated until either 4 problems were solved or 2 hours elapsed. The first problem was used as a warm-up exercise, and the problems became progressively more complex.

As in our first experiment, we used normalize assistance scores. Normalize assistance scores were defined as the sum of all the errors and requests for help on that problem divided by the number of entries made in solving that problem. Thus, lower assistance scores indicate that the student derived a solution while making fewer mistakes and getting less help, and thus demonstrating better performance and understanding.

The results from the laboratory study were as follows:

- Differences between conditions

The jointly constructed explanation (JCE) condition (M = .45, SD = .33) demonstrated lower assistance scores than the individually constructed explanation (ICE) condition (M = 1.00, SD = .67). The difference between experimental conditions was statistically reliable and of high practical significance, F(1, 23) = 7.33, p = .01, ηp2 = .24.

- Problem by condition

The pattern observed at the level of conditions replicated at the level of problem. That is, when the problem was used as a within factor in a repeated measures analysis of variance (MANOVA), the JCE condition demonstrated lower normalized assistance scores for all of the problems, except for the first, warm-up problem (see Table).

| ICE (n = 9) |

JCE (n = 14) |

p | ηp2 | |

| Warm-up | 0.75 | 0.63 | .483 | .024 |

| Problem 2 | 1.09 | 0.32 | .003 | .341 |

| Problem 3 | 1.08 | 0.51 | .059 | .160 |

| Problem 4 | 0.67 | 0.29 | .034 | .196 |

In addition to providing higher quality solutions, the jointly constructed explanation condition (M = 985.71, SD = 45.60) also solved their problems more quickly than the individually constructed explanation condition (M = 1097.75, SD = 51.45). Although the omnibus difference between experimental conditions was not statistically reliable, F(1, 23) = 2.66, p = .12, ηp2 = .10, the differences in solution times for the second and third problem were reliably lower for the JCE condition. This finding is particularly interesting because the experiment was capped at two hours; therefore, the dyads were able to complete the problem set more often than the individuals, χ2 = 4.81, p = .03. There were no reliable differences in terms of the amount of time explaining the steps of the worked-out examples.

- Knowledge component (KC) by condition

Because not all of the individuals were able to complete the entire problem set, their data could not be included in an analysis of the knowledge components. A MANOVA assumes that each individual participates in all of the measures. However, this is not the case when the individuals did not complete the last problem. Therefore, this fine-grained analysis of learning will need to wait until the study can be replicated in the classroom, with a larger sample size.

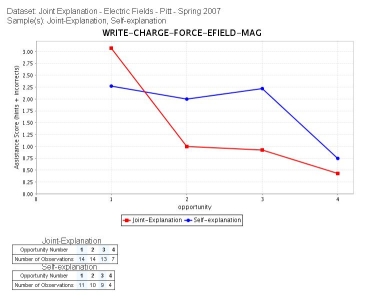

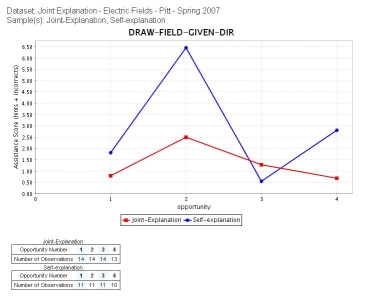

In an effort to anticipate the replication, we used a data imputation method for estimating missing values for each student (SPSS v14.0: Transform/Replace missing values/Median of nearby points). This provided a complete dataset for all participants. The results are summarized in the table below.

| MAG |  |

EFIELD |  |

| FORCE |  |

CHARGE |  |

Explanation

This study is part of the Interactive Communication cluster, and it hypothesizes that the prompting singletons and dyads should increase the probability that they traverse useful learning events. However, it is unclear if the act of communicating with a partner should increase learning, if the type of statements (i.e., explanations) are held constant. A strong-sense version of the Interactive Communication hypothesis would suggest that interacting with a peer is beneficial for learning because dyads can learn from their partner by assimilating knowledge components articulated by the partner or via corrective comments that help to refine vague or incorrect knowledge components.

Both self-explanation and joint-explanation should lead to deeper Knowledge Construction Dialogues or monolgues because they are likely to include integrative statements that connect information with prior knowledge, connect information with or previously stated material, or infer new knowledge. However, it may be more likely that deeper knowledge construction occurs during dialogue because the communicative partner provides a social cue to avoid glossing over the material.

Further Information

Annotated bibliography

- Hausmann, R. G. M., van de Sande, B., & VanLehn, K. (2008, July). Are self-explaining and coached problem solving more effective when done by pairs of students than alone? Poster presented at the 30th meeting of the Cognitive Science Society, Washington, DC.

- Hausmann, R. G. M., van de Sande, B., & VanLehn, K. (2008, June). Shall we explain? Augmenting Learning from Intelligent Tutoring Systems and Peer Collaboration. Paper presented at the 9th meeting of the International Conference on Intelligent Tutoring Systems, Montréal, Canada.

- Hausmann, R. G. M., van de Sande, B., van de Sande, C., & VanLehn, K. (2008, June). Productive Dialog During Collaborative Problem Solving. Paper presented at the 2008 International Conference for the Learning Sciences, Utrecht, Netherlands.

- Hausmann, R. G. M., van de Sande, B., & VanLehn, K. (2008, May). Trialog: How Peer Collaboration Helps Remediate Errors in an ITS. Paper presented at the 21st meeting of the International FLAIRS Conference, Coconut Grove, FL.

- Presentation to the PSLC Advisory Board: January, 2008

- Conference proceeding submitted to CogSci 2008

- Presented at a PSLC lunch: June 12, 2006 [1]

- Presented to the Physics LearnLab: April 4, 2007

References

- Dillenbourg, P. (1999). What do you mean "collaborative learning"? In P. Dillenbourg (Ed.), Collaborative learning: Cognitive and computational approaches (pp. 1-19). Oxford: Elsevier. [2]

- Hausmann, R. G. M., & Chi, M. T. H. (2002). Can a computer interface support self-explaining? Cognitive Technology, 7(1), 4-14. [3]

- Hausmann, R. G. M., Chi, M. T. H., & Roy, M. (2004). Learning from collaborative problem solving: An analysis of three hypothesized mechanisms. In K. D. Forbus, D. Gentner & T. Regier (Eds.), 26nd Annual Conference of the Cognitive Science Society (pp. 547-552). Mahwah, NJ: Lawrence Erlbaum. [4]

- McGregor, M., & Chi, M. T. H. (2002). Collaborative interactions: The process of joint production and individual reuse of novel ideas. In W. D. Gray & C. D. Schunn (Eds.), 24nd Annual Conference of the Cognitive Science Society. Mahwah, NJ: Lawerence Erlbaum. [5]

- Sweller, J., & Cooper, G. A. (1985). The use of worked examples as a substitute for problem solving in learning algebra. Cognition and Instruction, 2(1), 59-89. [6]

- VanLehn, K., Graesser, A. C., Jackson, G. T., Jordan, P., Olney, A., & Rose, C. P. (2007). When are tutorial dialogues more effective than reading? Cognitive Science. 31(1), 3-62. [7]

Connections

Future plans

Our future plans for June 2007 - August 2007:

- Transcribe the interactions and code them for explanations, either constructed jointly or individually.