Difference between revisions of "Hausmann Diss"

(→Independent variables) |

|||

| (64 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

== Elaborative and critical dialog: Two potentially effective problem-solving and learning interactions == | == Elaborative and critical dialog: Two potentially effective problem-solving and learning interactions == | ||

''Robert G.M. Hausmann and Michelene T.H. Chi'' | ''Robert G.M. Hausmann and Michelene T.H. Chi'' | ||

| + | |||

| + | === Summary Table === | ||

| + | {| border="1" cellspacing="0" cellpadding="5" style="text-align: left;" | ||

| + | | '''PIs''' || Robert G.M. Hausmann & Michelene T.H. Chi | ||

| + | |- | ||

| + | | '''Study Start Date''' || Sept. 20, 2004 | ||

| + | |- | ||

| + | | '''Study End Date''' || April 28, 2005 | ||

| + | |- | ||

| + | | '''LearnLab Site''' || none | ||

| + | |- | ||

| + | | '''LearnLab Course''' || none | ||

| + | |- | ||

| + | | '''Number of Students''' || ''N'' = 136 | ||

| + | |- | ||

| + | | '''Total Participant Hours''' || 68 hrs. | ||

| + | |- | ||

| + | | '''DataShop''' || no | ||

| + | |} | ||

| + | <br> | ||

=== Abstract === | === Abstract === | ||

| − | |||

| − | + | Recent research on peer dialog suggests that some dialog patterns are more strongly correlated with learning than others. A peer dialog, which is a subordinate category of interactive communication, occurs when two novices work together to collaboratively learn a set of [[knowledge component]]s, solve a problem, or both. Two aspects of peer dialog that have been shown to be correlated with learning are elaboration and constructive criticism. Elaboration can be defined as a conditionally relevant contribution that significantly develops another person’s idea. Constructive criticism is defined as either a request for justification or an evaluation of an idea. The primary goal for this project was to move beyond correlating dialog patterns with outcomes by training the participants to interact in specific ways. | |

| − | The results indicated that the | + | Participants were randomly assigned to one of four conditions: elaborative dyads, critical dyads, control dyads, and individuals. They were asked to solve a design problem, which was to optimize the design of a pre-existing bridge structure. Participants iteratively edited their design, analyzed its cost and effectiveness, and discussed their analyses to formulate their next modification. This process continued for thirty minutes, after which a post-test measuring both text-explicit and deep knowledge was administered. |

| + | |||

| + | The results indicated that the generated the same number of critical statements as control dyads; therefore, the critical condition was collapsed into the control condition. Alternatively, the elaborative condition generated better designs and learned more deep knowledge than the control condition. The elaborations led to shorter negotiations about what design modification to try next, so more designs were tried. These students thus sampled more of the underlying design space. This may also explain their increased learning because more appropriate [[learning events]] occurred. The problem-solving and learning outcomes also suggest that training individuals to elaborate may have been easier than asking them to produce evaluative statements. | ||

| + | |||

| + | === Background and Significance === | ||

| + | |||

| + | Past research on collaborative problem solving and learning has painted a fairly consistent picture of both its costs and benefits. For instance, [[collaboration]] seems to be an effective educational intervention; however, not all collaborative dialogs lead to positive outcomes. Instead, only certain dialog patterns tend to result in strong learning gains. For example, generating explanations tends to be associated with understanding (Chi, Bassok, Lewis, Reimann, & Glaser, 1989) while paraphrasing does not (Hausmann & Chi, 2002). Therefore, one of the goals of the learning sciences is to identify dialog patterns that tend to produce strong learning gains (Dillenbourg, Baker, Blaye, & O'Malley, 1995). One such candidate is elaborative dialogs (Brown & Palincsar, 1989). Elaboration is a likely candidate because it has been shown to support individual learning. Why is it the case that elaboration is an effective problem solving interaction? Critical interactions are another useful collaborative dialog. For example, the argumentation literature has shown that argumentation and counter-argument are related to a deep understanding of a domain (Leitao, 2003). However, an open question is how elaborative and critical interactions compare. | ||

=== Glossary === | === Glossary === | ||

| − | + | * '''[[Elaborative interaction]]''' | |

| − | * Elaborative | + | * '''[[Critical interaction]]''' |

| − | * Critical | + | See [[:Category:Hausmann_Diss|Hausmann_Diss Glossary]] |

| − | |||

=== Research question === | === Research question === | ||

| + | * Can students be trained to collaborate in specific ways? If so, what is the effect of collaborative training on problem-solving performance and learning? | ||

| + | * Do elaborative or critical interactions lead to better problem solving and/or learning than unscripted interactions? | ||

| + | * Why do elaborative dialogs lead to efficient problem solving and deep learning? | ||

=== Independent variables === | === Independent variables === | ||

| + | |||

| + | * Collaboration training: elaboration vs. critical vs. control vs. individual | ||

| + | |||

| + | |||

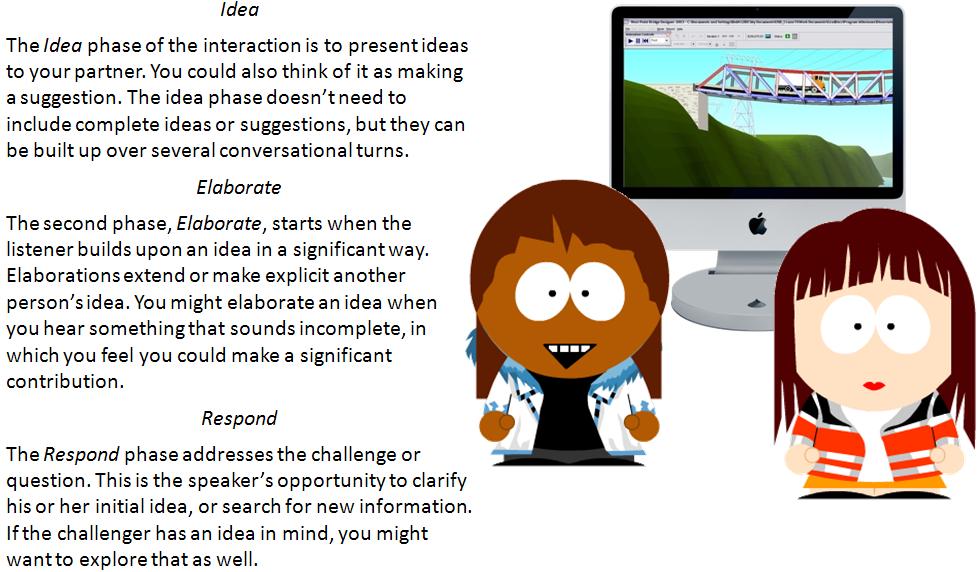

| + | <b>Figure 1</b>. An example from the Elaboration condition<Br><Br> | ||

| + | {| border="1" | ||

| + | | [[Image:IER.JPG]]<Br> | ||

| + | |} | ||

| + | |||

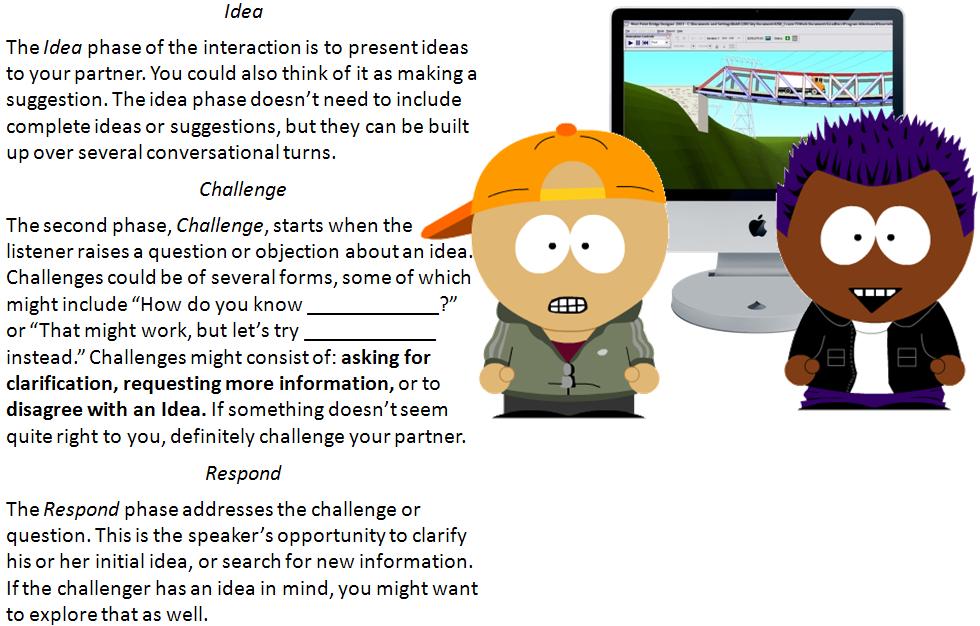

| + | <b>Figure 2</b>. An example from the Critical condition<Br><Br> | ||

| + | {| border="1" | ||

| + | | [[Image:ICR.JPG]]<Br> | ||

| + | |} | ||

| + | |||

| + | <b>Figure 3</b>. An example from the Control Paraphrase condition<Br><Br> | ||

| + | {| border="1" | ||

| + | | [[Image:Diss_Control.JPG]]<Br> | ||

| + | |} | ||

| + | |||

| + | <b>Figure 4</b>. An example from the Individual Paraphrase condition<Br><Br> | ||

| + | {| border="1" | ||

| + | | [[Image:IND.JPG]]<Br> | ||

| + | |} | ||

=== Hypothesis === | === Hypothesis === | ||

| + | |||

| + | The Interactive Communication cluster assumes that different types of interactions lead to different types of learning. | ||

| + | |||

| + | Elaborative dialog is hypothesized to enhance learning and problem solving by increasing the specification of another person’s message. To illustrate the hypothesis, consider an example from the domain in which this study was conducted: [http://bridgecontest.usma.edu/ bridge design]. Suppose one member of the dyad suggests they decrease the cross-sectional diameter of the members. The second member takes up this suggestion and extends it by proposing to only reduce the diameter of the vertical members. The verb "to change" requires the assignment of three variables: 1. An object to be modified, 2. The old property, and 3. The new property. Therefore, problem solving can be more efficient if the dyad elaborates the ideas by filling open variables. In contrast to elaboration, one member of the dyad could ask for clarification of the first member's idea (i.e., to request that the individual fill his or her own unfilled variable assignments). If the dyad is efficient, then they will be able to expose themselves to a wider array of knowledge components embedded in the simulated environment, thereby enhancing their learning from [[collaboration]]. | ||

| + | |||

| + | Critical dialogs are hypothesized to enhance problem solving by avoiding the space of designs that are ineffective, thereby saving time testing fewer bridges. The process of evaluation may also increase learning because the knowledge components used in this task may be more highly specified for particular applications. For instance, the participants are told that shorter bars are stronger under compression. Until they have seen this knowledge component in an actual design, they might not appreciate its importance. Thus, the knowledge component is reified under a concrete application. | ||

| + | |||

| + | This experiment implements the following instructional principle: [[Collaboration scripts]] | ||

=== Dependent variables === | === Dependent variables === | ||

| − | * ''Near transfer, immediate'': | + | ==== Learning ==== |

| + | |||

| + | * ''[[Normal post-test]], Near transfer, immediate'': Text-explicit learning was defined as the acquisition of information explicitly stated in the text. Text-explicit learning was measured by administering identical pre- and post-tests. Standardized gain scores were used to measure learning. | ||

| − | * '' | + | * ''Far [[transfer]], immediate'': Deep, inferential learning was defined as concepts that were not explicitly stated, and thus needed to be inferred from reading the text or interacting with the simulation. Inferential learning was also measured by administering identical pre- and post-tests, using standardized gain scores. |

| − | + | ==== Problem Solving ==== | |

| − | * '' | + | * ''Iterations'': the number of bridges tested. Measuring the number of designs tested served as a proxy variable for productivity. That is, if the pair is able to make a suggestion and agree quickly, then they will be able to test more designs, signalling higher levels of productivity. |

| + | |||

| + | * ''Optimization score'': the summation of the stress-to-strength ratios for each member, which was then divided by the total number of members: | ||

| + | |||

| + | <math>\frac{\sum_{n=1}^i}{\frac{stress_{i}}{strength_{i}}}{n}</math> | ||

| + | |||

| + | where n = the number of members per bridge, stress is the amount of force loaded on the i-th member of the bridge, and strength is the maximum loading the i-th member can withstand before failure. The optimization score represents the average load per member; thus, higher values indicate better optimized designs. | ||

| + | |||

| + | * ''Savings score'': the amount of money saved was calculated by subtracting the price of their final bridge from the starting price. The savings was measured because it was the top-level goal given to the participants. | ||

| + | |||

| + | ==== Communication ==== | ||

| + | * ''Elaborative statements'': the number of elaboration, providing a justification, or providing an implication for a statement were counted and summed and divided by the total number of statements for each group to derive a ''elaborative'' score. | ||

| + | * ''Critical statements'': the number of counter-suggestions, clarification questions, requests for justification, and evaluation statements were counted and summed and divided by the total number of statements for each group to derive a ''critical'' score. | ||

| + | |||

| + | === Findings === | ||

| + | '''Learning''' | ||

| + | |||

| + | * For ''shallow'' measures of learning, that is, the [[normal post-test]] measures, there were no differences between conditions ''F'' (2, 133) < 1. See the text-explicit column in the table below. | ||

| + | * For ''deep'' measures of [[transfer]] of learning (see the inferential column), there was a main effect of condition, reflecting a higher score for the elaborative dyads (''M'' = 11.18, ''SD'' = 18.81) than the control dyads (''M'' = 3.31, ''SD'' = 15.33), ''F'' (2, 133) = 3.08, ''p'' < .05. | ||

| + | |||

| + | <tt> | ||

| + | {| border="1" cellpadding="5" cellspacing="0" align="center" | ||

| + | | rowspan="2"| | ||

| + | ! colspan="2" | Text-explicit | ||

| + | ! colspan="2" | Inferential | ||

| + | |- | ||

| + | | M | ||

| + | | SD | ||

| + | | M | ||

| + | | SD | ||

| + | |- | ||

| + | | Individuals||0.51||0.32||0.05||0.16 | ||

| + | |- | ||

| + | | Control Dyads||0.59||0.27||0.03||0.19 | ||

| + | |- | ||

| + | | Critical Dyads||0.52||0.23||0.02||0.20 | ||

| + | |- | ||

| + | | Elaborative Dyads||0.53||0.41||0.12||0.21 | ||

| + | |- | ||

| + | |} | ||

| + | </tt> | ||

| + | |||

| + | '''Problem solving''' | ||

| + | |||

| + | * There was a marginal effect of condition on optimization score, ''F'' (2, 75) = 2.60, ''p'' = .08. Post-hoc analyses revealed a reliable difference between the elaborative dyads and control dyads, ''d'' = .61, but no difference between the individuals. | ||

| + | * Elaborating a partner’s ideas and suggestions increased the dyads’ ability to optimize their designs. | ||

| + | * On the other hand, there was no effect of condition on savings, suggesting all conditions constructed equally priced bridges, ''F'' (2, 75) < 1. | ||

| + | |||

| + | <tt> | ||

| + | {| border="1" cellpadding="5" cellspacing="0" align="center" | ||

| + | | rowspan="2"| | ||

| + | ! colspan="2" | Iterations | ||

| + | ! colspan="2" | Savings | ||

| + | ! colspan="2" | Optimization Score | ||

| + | |- | ||

| + | | M | ||

| + | | SD | ||

| + | | M | ||

| + | | SD | ||

| + | | M | ||

| + | | SD | ||

| + | |- | ||

| + | |Individuals||49.55||23.36||50283.47||23073.10||0.56||0.13 | ||

| + | |- | ||

| + | |Control Dyads||39.65||8.73||49974.52||20718.92||0.57||0.11 | ||

| + | |- | ||

| + | |Critical Dyads||33.80||16.66||42415.56||24537.62||0.53||0.11 | ||

| + | |- | ||

| + | |Elaborative Dyads||41.00||18.62||55541.21||26621.19||0.63||0.15 | ||

| + | |- | ||

| + | |} | ||

| + | </tt> | ||

| + | |||

| + | '''Communication''' | ||

| + | |||

| + | * The elaborative dyads asked fewer ''clarification questions'' than the control condition. | ||

| + | * The elaborative condition explicitly mentioned and used the ''simulation feedback'' more than the control condition. | ||

| + | * The elaborative dyads were marginally more likely to be classified as using a ''positive problem-solving strategy'' than the control dyads. | ||

=== Explanation === | === Explanation === | ||

| − | === Annotated bibliography === | + | Elaboration may facilitate the rapid assignment of variables to unfilled slots. The results suggest that elaboration may have been effective in filling unassigned variables because the elaborative dyads asked fewer clarification questions than the control condition. Additionally, the results indicate that the elaborative dyads were better able to use the feedback from the simulation in deciding where to implement the changes. |

| − | * Presentation to the NSF Site Visitors, May, 2005 | + | |

| + | Combining these two results, making faster variable assignments and better use of the simulation’s feedback, an exchange between two dyads in the elaboration condition might be interpreted in the following way. One person suggests that they modify the member properties by switching solid members to hollow. The second person may elaborate the suggestion by looking at the feedback and making a recommendation. If the second person makes explicit how she made her recommendation, then the use of the feedback is now available to the dyad for future use. The finding that the elaborative dyads were more likely to be classified as using positive [[strategies]] supports this interpretation. If the dyad is able to cover more of the design space, they will more likely be exposed to more knowledge components thereby learning deeper knowledge. Furthermore, the partner in the group can serve as an additional source of knowledge components. Members of a dyad are exposed to the knowledge embedded in the simulation, as well as the information shared by the partner. | ||

| + | |||

| + | === Further Information === | ||

| + | ==== Annotated bibliography ==== | ||

| + | * Submitted to the ''Journal of Educational Psychology'' on March 19, 2008. | ||

| + | * Presented at the Festschrift for Lauren Resnick, May, 2005 [http://www.pitt.edu/AFShome/c/h/chi/public/html/TalkAndDialogue] | ||

| + | * Presentation to the NSF Site Visitors, May, 2005 | ||

* Presented at CogSci2006, July, 2006 | * Presented at CogSci2006, July, 2006 | ||

| + | * Some results were presented to the Intelligent Tutoring in Serious Games workshop, Aug. 2006 [http://projects.ict.usc.edu/itgs/talks/Hausmann_Generative%20Dialogue%20Patterns.ppt] | ||

| + | |||

| + | ==== References ==== | ||

| + | # Hausmann, R. G. M. (2006). Why do elaborative dialogs lead to effective problem solving and deep learning? In R. Sun & N. Miyake (Eds.), [http://www.learnlab.org/uploads/mypslc/publications/pos491-hausmann.pdf 28th Annual Meeting of the Cognitive Science Society] (pp. 1465-1469). Vancouver, B.C.: Sheridan Printing. | ||

| + | # Hausmann, R. G. M. (2005). [http://etd.library.pitt.edu/ETD/available/etd-08172005-144859 Elaborative and critical dialog: Two potentially effective problem-solving and learning interactions.] Unpublished Dissertation, University Of Pittsburgh, Pittsburgh, PA. | ||

| + | |||

| + | ==== Connections ==== | ||

| + | * [[Rummel Scripted Collaborative Problem Solving | Collaborative Extensions to the Cognitive Tutor Algebra: Scripted Collaborative Problem Solving]] | ||

| + | * [[Using Elaborated Explanations to Support Geometry Learning (Aleven & Butcher) | Using Elaborated Explanations to Support Geometry Learning]] | ||

| − | === | + | ==== Future plans ==== |

| − | + | * Replicate with stronger manipulation of critical interactions. | |

Latest revision as of 17:02, 10 October 2008

Contents

- 1 Elaborative and critical dialog: Two potentially effective problem-solving and learning interactions

Elaborative and critical dialog: Two potentially effective problem-solving and learning interactions

Robert G.M. Hausmann and Michelene T.H. Chi

Summary Table

| PIs | Robert G.M. Hausmann & Michelene T.H. Chi |

| Study Start Date | Sept. 20, 2004 |

| Study End Date | April 28, 2005 |

| LearnLab Site | none |

| LearnLab Course | none |

| Number of Students | N = 136 |

| Total Participant Hours | 68 hrs. |

| DataShop | no |

Abstract

Recent research on peer dialog suggests that some dialog patterns are more strongly correlated with learning than others. A peer dialog, which is a subordinate category of interactive communication, occurs when two novices work together to collaboratively learn a set of knowledge components, solve a problem, or both. Two aspects of peer dialog that have been shown to be correlated with learning are elaboration and constructive criticism. Elaboration can be defined as a conditionally relevant contribution that significantly develops another person’s idea. Constructive criticism is defined as either a request for justification or an evaluation of an idea. The primary goal for this project was to move beyond correlating dialog patterns with outcomes by training the participants to interact in specific ways.

Participants were randomly assigned to one of four conditions: elaborative dyads, critical dyads, control dyads, and individuals. They were asked to solve a design problem, which was to optimize the design of a pre-existing bridge structure. Participants iteratively edited their design, analyzed its cost and effectiveness, and discussed their analyses to formulate their next modification. This process continued for thirty minutes, after which a post-test measuring both text-explicit and deep knowledge was administered.

The results indicated that the generated the same number of critical statements as control dyads; therefore, the critical condition was collapsed into the control condition. Alternatively, the elaborative condition generated better designs and learned more deep knowledge than the control condition. The elaborations led to shorter negotiations about what design modification to try next, so more designs were tried. These students thus sampled more of the underlying design space. This may also explain their increased learning because more appropriate learning events occurred. The problem-solving and learning outcomes also suggest that training individuals to elaborate may have been easier than asking them to produce evaluative statements.

Background and Significance

Past research on collaborative problem solving and learning has painted a fairly consistent picture of both its costs and benefits. For instance, collaboration seems to be an effective educational intervention; however, not all collaborative dialogs lead to positive outcomes. Instead, only certain dialog patterns tend to result in strong learning gains. For example, generating explanations tends to be associated with understanding (Chi, Bassok, Lewis, Reimann, & Glaser, 1989) while paraphrasing does not (Hausmann & Chi, 2002). Therefore, one of the goals of the learning sciences is to identify dialog patterns that tend to produce strong learning gains (Dillenbourg, Baker, Blaye, & O'Malley, 1995). One such candidate is elaborative dialogs (Brown & Palincsar, 1989). Elaboration is a likely candidate because it has been shown to support individual learning. Why is it the case that elaboration is an effective problem solving interaction? Critical interactions are another useful collaborative dialog. For example, the argumentation literature has shown that argumentation and counter-argument are related to a deep understanding of a domain (Leitao, 2003). However, an open question is how elaborative and critical interactions compare.

Glossary

Research question

- Can students be trained to collaborate in specific ways? If so, what is the effect of collaborative training on problem-solving performance and learning?

- Do elaborative or critical interactions lead to better problem solving and/or learning than unscripted interactions?

- Why do elaborative dialogs lead to efficient problem solving and deep learning?

Independent variables

- Collaboration training: elaboration vs. critical vs. control vs. individual

Figure 1. An example from the Elaboration condition

|

Figure 2. An example from the Critical condition

|

Figure 3. An example from the Control Paraphrase condition

|

Figure 4. An example from the Individual Paraphrase condition

|

Hypothesis

The Interactive Communication cluster assumes that different types of interactions lead to different types of learning.

Elaborative dialog is hypothesized to enhance learning and problem solving by increasing the specification of another person’s message. To illustrate the hypothesis, consider an example from the domain in which this study was conducted: bridge design. Suppose one member of the dyad suggests they decrease the cross-sectional diameter of the members. The second member takes up this suggestion and extends it by proposing to only reduce the diameter of the vertical members. The verb "to change" requires the assignment of three variables: 1. An object to be modified, 2. The old property, and 3. The new property. Therefore, problem solving can be more efficient if the dyad elaborates the ideas by filling open variables. In contrast to elaboration, one member of the dyad could ask for clarification of the first member's idea (i.e., to request that the individual fill his or her own unfilled variable assignments). If the dyad is efficient, then they will be able to expose themselves to a wider array of knowledge components embedded in the simulated environment, thereby enhancing their learning from collaboration.

Critical dialogs are hypothesized to enhance problem solving by avoiding the space of designs that are ineffective, thereby saving time testing fewer bridges. The process of evaluation may also increase learning because the knowledge components used in this task may be more highly specified for particular applications. For instance, the participants are told that shorter bars are stronger under compression. Until they have seen this knowledge component in an actual design, they might not appreciate its importance. Thus, the knowledge component is reified under a concrete application.

This experiment implements the following instructional principle: Collaboration scripts

Dependent variables

Learning

- Normal post-test, Near transfer, immediate: Text-explicit learning was defined as the acquisition of information explicitly stated in the text. Text-explicit learning was measured by administering identical pre- and post-tests. Standardized gain scores were used to measure learning.

- Far transfer, immediate: Deep, inferential learning was defined as concepts that were not explicitly stated, and thus needed to be inferred from reading the text or interacting with the simulation. Inferential learning was also measured by administering identical pre- and post-tests, using standardized gain scores.

Problem Solving

- Iterations: the number of bridges tested. Measuring the number of designs tested served as a proxy variable for productivity. That is, if the pair is able to make a suggestion and agree quickly, then they will be able to test more designs, signalling higher levels of productivity.

- Optimization score: the summation of the stress-to-strength ratios for each member, which was then divided by the total number of members:

<math>\frac{\sum_{n=1}^i}{\frac{stress_{i}}{strength_{i}}}{n}</math>

where n = the number of members per bridge, stress is the amount of force loaded on the i-th member of the bridge, and strength is the maximum loading the i-th member can withstand before failure. The optimization score represents the average load per member; thus, higher values indicate better optimized designs.

- Savings score: the amount of money saved was calculated by subtracting the price of their final bridge from the starting price. The savings was measured because it was the top-level goal given to the participants.

Communication

- Elaborative statements: the number of elaboration, providing a justification, or providing an implication for a statement were counted and summed and divided by the total number of statements for each group to derive a elaborative score.

- Critical statements: the number of counter-suggestions, clarification questions, requests for justification, and evaluation statements were counted and summed and divided by the total number of statements for each group to derive a critical score.

Findings

Learning

- For shallow measures of learning, that is, the normal post-test measures, there were no differences between conditions F (2, 133) < 1. See the text-explicit column in the table below.

- For deep measures of transfer of learning (see the inferential column), there was a main effect of condition, reflecting a higher score for the elaborative dyads (M = 11.18, SD = 18.81) than the control dyads (M = 3.31, SD = 15.33), F (2, 133) = 3.08, p < .05.

| Text-explicit | Inferential | |||

|---|---|---|---|---|

| M | SD | M | SD | |

| Individuals | 0.51 | 0.32 | 0.05 | 0.16 |

| Control Dyads | 0.59 | 0.27 | 0.03 | 0.19 |

| Critical Dyads | 0.52 | 0.23 | 0.02 | 0.20 |

| Elaborative Dyads | 0.53 | 0.41 | 0.12 | 0.21 |

Problem solving

- There was a marginal effect of condition on optimization score, F (2, 75) = 2.60, p = .08. Post-hoc analyses revealed a reliable difference between the elaborative dyads and control dyads, d = .61, but no difference between the individuals.

- Elaborating a partner’s ideas and suggestions increased the dyads’ ability to optimize their designs.

- On the other hand, there was no effect of condition on savings, suggesting all conditions constructed equally priced bridges, F (2, 75) < 1.

| Iterations | Savings | Optimization Score | ||||

|---|---|---|---|---|---|---|

| M | SD | M | SD | M | SD | |

| Individuals | 49.55 | 23.36 | 50283.47 | 23073.10 | 0.56 | 0.13 |

| Control Dyads | 39.65 | 8.73 | 49974.52 | 20718.92 | 0.57 | 0.11 |

| Critical Dyads | 33.80 | 16.66 | 42415.56 | 24537.62 | 0.53 | 0.11 |

| Elaborative Dyads | 41.00 | 18.62 | 55541.21 | 26621.19 | 0.63 | 0.15 |

Communication

- The elaborative dyads asked fewer clarification questions than the control condition.

- The elaborative condition explicitly mentioned and used the simulation feedback more than the control condition.

- The elaborative dyads were marginally more likely to be classified as using a positive problem-solving strategy than the control dyads.

Explanation

Elaboration may facilitate the rapid assignment of variables to unfilled slots. The results suggest that elaboration may have been effective in filling unassigned variables because the elaborative dyads asked fewer clarification questions than the control condition. Additionally, the results indicate that the elaborative dyads were better able to use the feedback from the simulation in deciding where to implement the changes.

Combining these two results, making faster variable assignments and better use of the simulation’s feedback, an exchange between two dyads in the elaboration condition might be interpreted in the following way. One person suggests that they modify the member properties by switching solid members to hollow. The second person may elaborate the suggestion by looking at the feedback and making a recommendation. If the second person makes explicit how she made her recommendation, then the use of the feedback is now available to the dyad for future use. The finding that the elaborative dyads were more likely to be classified as using positive strategies supports this interpretation. If the dyad is able to cover more of the design space, they will more likely be exposed to more knowledge components thereby learning deeper knowledge. Furthermore, the partner in the group can serve as an additional source of knowledge components. Members of a dyad are exposed to the knowledge embedded in the simulation, as well as the information shared by the partner.

Further Information

Annotated bibliography

- Submitted to the Journal of Educational Psychology on March 19, 2008.

- Presented at the Festschrift for Lauren Resnick, May, 2005 [1]

- Presentation to the NSF Site Visitors, May, 2005

- Presented at CogSci2006, July, 2006

- Some results were presented to the Intelligent Tutoring in Serious Games workshop, Aug. 2006 [2]

References

- Hausmann, R. G. M. (2006). Why do elaborative dialogs lead to effective problem solving and deep learning? In R. Sun & N. Miyake (Eds.), 28th Annual Meeting of the Cognitive Science Society (pp. 1465-1469). Vancouver, B.C.: Sheridan Printing.

- Hausmann, R. G. M. (2005). Elaborative and critical dialog: Two potentially effective problem-solving and learning interactions. Unpublished Dissertation, University Of Pittsburgh, Pittsburgh, PA.

Connections

- Collaborative Extensions to the Cognitive Tutor Algebra: Scripted Collaborative Problem Solving

- Using Elaborated Explanations to Support Geometry Learning

Future plans

- Replicate with stronger manipulation of critical interactions.