Difference between revisions of "Application of SimStudent for Error Analysis"

(→An application of a computational model of learning as a model of learning errors) |

m (Reverted edits by Woolerystixmaker (Talk); changed back to last version by Nmatsuda) |

||

| (22 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

==Towards a theory of learning errors== | ==Towards a theory of learning errors== | ||

| − | + | ===Personnel=== | |

*PI: Noboru Matsuda | *PI: Noboru Matsuda | ||

| Line 8: | Line 8: | ||

===Abstract=== | ===Abstract=== | ||

| − | The purpose of this project is to study how students ''learn'' | + | The purpose of this project is to study how students ''fail to learn'' correct knowledge components when studying from examples and make (typical) errors by applying such incorrect knowledge components later when solving problems. We utilize a computational model of learning, called [http://www.SimStudent.org SimStudent] that learns cognitive skills inductively from examples either by passively reviewing worked-out examples or by actively engaged in tutored problem-solving. |

We are particularly interested in studying how the differences in prior knowledge affect the nature and rate of learning. We hypothesize that when students rely on shallow, domain general features (which we call "weak" features) as opposed to deep, more domain specific features ("strong" features), then students would more likely to make induction errors. | We are particularly interested in studying how the differences in prior knowledge affect the nature and rate of learning. We hypothesize that when students rely on shallow, domain general features (which we call "weak" features) as opposed to deep, more domain specific features ("strong" features), then students would more likely to make induction errors. | ||

| − | To test this hypothesis, we | + | To test this hypothesis, we control SimStudent's prior knowledge to study how and when erroneous skills are learned by analyze learning outcomes (both the process of learning and the performance on the post-test). |

| + | ===Overview of SimStudent=== | ||

| + | |||

| + | A fundamental technology used for SimStudent is called Inductive Logic Programming (Muggleton, 1999) as an application for programming by demonstration (Cypher, 1993). Prior to learning, SimStudent is given a set of ''feature predicates'' and ''operators'' as prior knowledge. | ||

| + | |||

| + | Feature predicate is a Boolean function to test an existence of a certain feature. For example, isPolynomial("3x+1") returns true, but isConstantTerm("3x") returns false. An operators, on the other hand, is a more generic function to manipulate various form of objects involved in a target task. For example, addTerm("3x", "2x") returns "5x" and getCoefficient("-4y") returns "-4." | ||

| + | |||

| + | To learn cognitive skills, SimStudent generalizes ''examples'' of each individual skill applications. There are two types of examples necessary to given to SimStudent: (1) positive examples that show when to apply a particular skill, and (2) negative examples that show when ''not'' to apply a particular skill. | ||

| + | |||

| + | Positive examples are acquired either from (1) steps demonstrated in worked-out examples, (2) steps demonstrated as a hint during tutoring, and (3) steps performed correctly by SimStudent itself during tutoring. In either case, a context of a skill application (i.e., a problem status) is stored as a positive examples for that particular skill. | ||

| + | |||

| + | Negative examples are acquired either when (1) a positive example is generated, or (2) SimStudent made an error during tutoring. When a positive example is made for a certain skill, say S, the example also becomes negative examples for all other skills than S. Such an example is called ''implicit negative example.'' An implicit negative example becomes a positive example if the corresponding skill is applied in the specified situation. | ||

| + | |||

| + | Given a set of positive and negative examples for a skill, SimStudent generates a hypothesis (in the form of production rule) representing when and how to apply the skill. The hypothesis is generated so that it applies to all positive examples and none of the negative examples. | ||

===Background and Significance=== | ===Background and Significance=== | ||

| + | |||

| + | There are a number of models of student errors proposed so far (Brown & Burton, 1978; Langley & Ohlsson, 1984; Sleeman, Kelly, Martinak, Ward, & Moore, 1989; Weber, 1996; Young & O'Shea, 1981). Our effort builds on the past works by exploring how differences in prior knowledge affect the nature of the incorrect skills acquired and the errors derived. We are particularly interested in errors that are made by applying incorrect skills, and our computational model explains the processes of learning such incorrect skills as incorrect induction from examples. | ||

| + | |||

| + | We hypothesize that incorrect generalizations are more likely when students have weaker, more general prior knowledge for encoding incoming information. This knowledge is typically perceptually grounded and is in contrast to deeper or more abstract encoding knowledge. An example of such perceptually grounded prior knowledge is to recognize 3 in x/3 simply as a number instead of as a denominator. Such an interpretation might lead students to learn an inappropriate generalization such as "multiply both sides by a number in the left hand side of the equation" after observing x/3=5 gets x=15. If this generalization gets applied to an equation like 4x=2, the error of multiplying both sides by 4 is produced. | ||

| + | |||

| + | We call this type of perceptually grounded prior knowledge "weak" prior knowledge in a similar sense as Newell and Simon’s weak reasoning methods (1972). Weak knowledge can apply across domains and can yield successful results prior to domain-specific instruction. However, in contrast to "strong" domain-specific knowledge, weak knowledge is more likely to lead to incorrect conclusions. | ||

| + | |||

| + | In general, a particular example can be modeled both with weak and strong operators. For example, suppose a step x/3=5 gets demonstrated to "multiply by 3." Such step can be explained by a strong operator getDenominator(x/3), which returns a denominator of a given fraction term and multiply that number to both sides. On the other hand, the same step can be explained by a weak operator | ||

| + | getNumberStr(x/3), which returns the left-most number in a given expression. In this context, the operator getNumberStr() is considered to be weaker than the operator getDemonimator(), because a production rule with getNumberStr() explains broader errors. For example, imagine how we could model the error schema for "multiply by A." This error schema can be modeled with getNumberString() and multiply() – get a number and multiply both sides by that number. Without the weak operator, we need to have different (disjunctive) production rules to model the same error schema for different problem schemata – getNumerator() for A/v=C and getCoefficient() for Av=C. | ||

| + | |||

| + | ===Human Students Error Analysis=== | ||

===Research Question=== | ===Research Question=== | ||

| + | |||

| + | How do the differences in prior knowledge affect the type and rate of learning errors? Especially, does "weak" prior knowledge foster more induction errors than "strong" prior knowledge, and if so, to what extent does such "weak" prior-knowledge learning account for errors that (human) students commonly make? | ||

===Hypothesis=== | ===Hypothesis=== | ||

| + | |||

| + | Since "weak" prior knowledge applies broader context than "strong" prior knowledge, when given "weak" prior knowledge SimStudent would learn overly general rules that make more human-like errors. | ||

===Study Variables=== | ===Study Variables=== | ||

| Line 28: | Line 56: | ||

====Dependent Variables==== | ====Dependent Variables==== | ||

| + | |||

| + | '''Step score''': For a quantitative assessment, we computed a ''step score'' for each step in the test problems as follows: 0 if there is no correct rule application made, otherwise it is a ratio of the number of correct rule applications to the number of all rule applications allowing SimStudent to show all possible rule applications on the step. | ||

| + | |||

| + | '''Error prediction''': For a qualitative assessment, we are particularly interested in errors made by applying learned rules as well as the accuracy of prediction. Given a step ''S'' performed by a human student at an intermediate state ''N'', SimStudent is asked to compute a conflict set on ''N''. Rule application R''i'' (''i'' = 1, …, ''n'') is coded as follows: | ||

| + | |||

| + | : True Positive: R''i'' yields the same step as ''S'', and ''S'' is a correct step. | ||

| + | : False Positive: R''i'' yields a correct step that is not same as ''S'' (''S'' may be incorrect). | ||

| + | : False Negative: R''i'' yields an incorrect step that is not same as ''S'' (''S'' may be correct). | ||

| + | : True Negative: R''i'' yields the same step as ''S'' and ''S'' is an incorrect step. | ||

| + | |||

| + | Error prediction is computed as True Negative / (True Negative + False Negative) to understand how well SimStudent predicted human-like errors. | ||

===Findings=== | ===Findings=== | ||

| − | ==== | + | ====Learning Curve==== |

| + | |||

| + | Figure 1 shows average step score, aggregated across the test problems and student conditions. The X-axis shows the number of training iterations. | ||

| + | |||

| + | The Weak-PK and Strong-PK conditions had similar success rates on test problems after the first 8 training problems. After that, the performance of the two conditions began to diverge. On the final test after 20 training problems, the Strong-PK condition was 82% correct while the Weak-PK was 66%, a large and statistically significant difference (t = 4.00, p < .001). | ||

| + | |||

| + | A simple fit to power law functions to the learning curves (converting success rate to log-odds) showed that the slope (or rate) of the Weak-PK learning curve (.78) is smaller (or slower) than that of the Strong-PK learning curve (.82). We then subtracted the two functions in their log-log form and verified in a linear regression analysis that the coefficient of the number of training problems (which predicts the difference in rate) is significantly greater than 0 (p < .05). | ||

| + | |||

| + | [[Image:NM-LearningCurve.jpg]] | ||

| + | |||

| + | Figure 1: Average step score after each of the 20 training problems for SimStudents with either strong or weak prior knowledge. | ||

| + | |||

| + | ====Error Prediction==== | ||

| + | |||

| + | Figure 2 shows a number of true negative predictions made on the test problems for each of the training iterations. | ||

| + | |||

| + | Surprisingly, the Weak PK condition did make as many as 22 human-like errors on the 11 test problems. On the other hand, the Strong PK condition hardly made human-like errors. | ||

| + | |||

| + | [[Image:NM-Num-TN-Prediction.jpg]] | ||

| + | |||

| + | Figure 2: Number of True Negative predictions, which are the same errors made both by SimStudent and human students on the same step in the test problems. | ||

===Publications=== | ===Publications=== | ||

| Line 40: | Line 99: | ||

*Booth, J. L., & Koedinger, K. R. (2008). Key misconceptions in algebraic problem solving. In B. C. Love, K. McRae & V. M. Sloutsky (Eds.), Proceedings of the 30th Annual Conference of the Cognitive Science Society (pp. 571-576). Austin, TX: Cognitive Science Society. | *Booth, J. L., & Koedinger, K. R. (2008). Key misconceptions in algebraic problem solving. In B. C. Love, K. McRae & V. M. Sloutsky (Eds.), Proceedings of the 30th Annual Conference of the Cognitive Science Society (pp. 571-576). Austin, TX: Cognitive Science Society. | ||

| + | |||

| + | *Muggleton, S. (1999). Inductive Logic Programming: Issues, results and the challenge of Learning Language in Logic. Artificial Intelligence, 114(1-2), 283-296. | ||

| + | |||

| + | *Cypher, A. (Ed.). (1993). Watch what I do: Programming by Demonstration. Cambridge, MA: MIT Press. | ||

Latest revision as of 13:30, 29 August 2011

Contents

Towards a theory of learning errors

Personnel

- PI: Noboru Matsuda

- Key Faculty: William W. Cohen, Kenneth R. Koedinger

Abstract

The purpose of this project is to study how students fail to learn correct knowledge components when studying from examples and make (typical) errors by applying such incorrect knowledge components later when solving problems. We utilize a computational model of learning, called SimStudent that learns cognitive skills inductively from examples either by passively reviewing worked-out examples or by actively engaged in tutored problem-solving.

We are particularly interested in studying how the differences in prior knowledge affect the nature and rate of learning. We hypothesize that when students rely on shallow, domain general features (which we call "weak" features) as opposed to deep, more domain specific features ("strong" features), then students would more likely to make induction errors.

To test this hypothesis, we control SimStudent's prior knowledge to study how and when erroneous skills are learned by analyze learning outcomes (both the process of learning and the performance on the post-test).

Overview of SimStudent

A fundamental technology used for SimStudent is called Inductive Logic Programming (Muggleton, 1999) as an application for programming by demonstration (Cypher, 1993). Prior to learning, SimStudent is given a set of feature predicates and operators as prior knowledge.

Feature predicate is a Boolean function to test an existence of a certain feature. For example, isPolynomial("3x+1") returns true, but isConstantTerm("3x") returns false. An operators, on the other hand, is a more generic function to manipulate various form of objects involved in a target task. For example, addTerm("3x", "2x") returns "5x" and getCoefficient("-4y") returns "-4."

To learn cognitive skills, SimStudent generalizes examples of each individual skill applications. There are two types of examples necessary to given to SimStudent: (1) positive examples that show when to apply a particular skill, and (2) negative examples that show when not to apply a particular skill.

Positive examples are acquired either from (1) steps demonstrated in worked-out examples, (2) steps demonstrated as a hint during tutoring, and (3) steps performed correctly by SimStudent itself during tutoring. In either case, a context of a skill application (i.e., a problem status) is stored as a positive examples for that particular skill.

Negative examples are acquired either when (1) a positive example is generated, or (2) SimStudent made an error during tutoring. When a positive example is made for a certain skill, say S, the example also becomes negative examples for all other skills than S. Such an example is called implicit negative example. An implicit negative example becomes a positive example if the corresponding skill is applied in the specified situation.

Given a set of positive and negative examples for a skill, SimStudent generates a hypothesis (in the form of production rule) representing when and how to apply the skill. The hypothesis is generated so that it applies to all positive examples and none of the negative examples.

Background and Significance

There are a number of models of student errors proposed so far (Brown & Burton, 1978; Langley & Ohlsson, 1984; Sleeman, Kelly, Martinak, Ward, & Moore, 1989; Weber, 1996; Young & O'Shea, 1981). Our effort builds on the past works by exploring how differences in prior knowledge affect the nature of the incorrect skills acquired and the errors derived. We are particularly interested in errors that are made by applying incorrect skills, and our computational model explains the processes of learning such incorrect skills as incorrect induction from examples.

We hypothesize that incorrect generalizations are more likely when students have weaker, more general prior knowledge for encoding incoming information. This knowledge is typically perceptually grounded and is in contrast to deeper or more abstract encoding knowledge. An example of such perceptually grounded prior knowledge is to recognize 3 in x/3 simply as a number instead of as a denominator. Such an interpretation might lead students to learn an inappropriate generalization such as "multiply both sides by a number in the left hand side of the equation" after observing x/3=5 gets x=15. If this generalization gets applied to an equation like 4x=2, the error of multiplying both sides by 4 is produced.

We call this type of perceptually grounded prior knowledge "weak" prior knowledge in a similar sense as Newell and Simon’s weak reasoning methods (1972). Weak knowledge can apply across domains and can yield successful results prior to domain-specific instruction. However, in contrast to "strong" domain-specific knowledge, weak knowledge is more likely to lead to incorrect conclusions.

In general, a particular example can be modeled both with weak and strong operators. For example, suppose a step x/3=5 gets demonstrated to "multiply by 3." Such step can be explained by a strong operator getDenominator(x/3), which returns a denominator of a given fraction term and multiply that number to both sides. On the other hand, the same step can be explained by a weak operator getNumberStr(x/3), which returns the left-most number in a given expression. In this context, the operator getNumberStr() is considered to be weaker than the operator getDemonimator(), because a production rule with getNumberStr() explains broader errors. For example, imagine how we could model the error schema for "multiply by A." This error schema can be modeled with getNumberString() and multiply() – get a number and multiply both sides by that number. Without the weak operator, we need to have different (disjunctive) production rules to model the same error schema for different problem schemata – getNumerator() for A/v=C and getCoefficient() for Av=C.

Human Students Error Analysis

Research Question

How do the differences in prior knowledge affect the type and rate of learning errors? Especially, does "weak" prior knowledge foster more induction errors than "strong" prior knowledge, and if so, to what extent does such "weak" prior-knowledge learning account for errors that (human) students commonly make?

Hypothesis

Since "weak" prior knowledge applies broader context than "strong" prior knowledge, when given "weak" prior knowledge SimStudent would learn overly general rules that make more human-like errors.

Study Variables

Independent Variable

Prior knowledge: implemented as "operator" and "feature predicates" for SimStudent.

Dependent Variables

Step score: For a quantitative assessment, we computed a step score for each step in the test problems as follows: 0 if there is no correct rule application made, otherwise it is a ratio of the number of correct rule applications to the number of all rule applications allowing SimStudent to show all possible rule applications on the step.

Error prediction: For a qualitative assessment, we are particularly interested in errors made by applying learned rules as well as the accuracy of prediction. Given a step S performed by a human student at an intermediate state N, SimStudent is asked to compute a conflict set on N. Rule application Ri (i = 1, …, n) is coded as follows:

- True Positive: Ri yields the same step as S, and S is a correct step.

- False Positive: Ri yields a correct step that is not same as S (S may be incorrect).

- False Negative: Ri yields an incorrect step that is not same as S (S may be correct).

- True Negative: Ri yields the same step as S and S is an incorrect step.

Error prediction is computed as True Negative / (True Negative + False Negative) to understand how well SimStudent predicted human-like errors.

Findings

Learning Curve

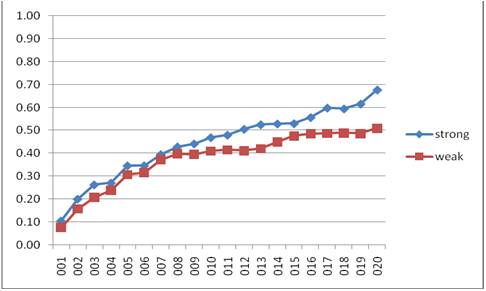

Figure 1 shows average step score, aggregated across the test problems and student conditions. The X-axis shows the number of training iterations.

The Weak-PK and Strong-PK conditions had similar success rates on test problems after the first 8 training problems. After that, the performance of the two conditions began to diverge. On the final test after 20 training problems, the Strong-PK condition was 82% correct while the Weak-PK was 66%, a large and statistically significant difference (t = 4.00, p < .001).

A simple fit to power law functions to the learning curves (converting success rate to log-odds) showed that the slope (or rate) of the Weak-PK learning curve (.78) is smaller (or slower) than that of the Strong-PK learning curve (.82). We then subtracted the two functions in their log-log form and verified in a linear regression analysis that the coefficient of the number of training problems (which predicts the difference in rate) is significantly greater than 0 (p < .05).

Figure 1: Average step score after each of the 20 training problems for SimStudents with either strong or weak prior knowledge.

Error Prediction

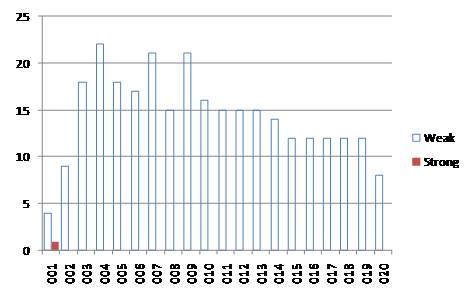

Figure 2 shows a number of true negative predictions made on the test problems for each of the training iterations.

Surprisingly, the Weak PK condition did make as many as 22 human-like errors on the 11 test problems. On the other hand, the Strong PK condition hardly made human-like errors.

Figure 2: Number of True Negative predictions, which are the same errors made both by SimStudent and human students on the same step in the test problems.

Publications

- Matsuda, N., Lee, A., Cohen, W. W., & Koedinger, K. R. (2009; to appear). A Computational Model of How Learner Errors Arise from Weak Prior Knowledge. In Conference of the Cognitive Science Society.

References

- Booth, J. L., & Koedinger, K. R. (2008). Key misconceptions in algebraic problem solving. In B. C. Love, K. McRae & V. M. Sloutsky (Eds.), Proceedings of the 30th Annual Conference of the Cognitive Science Society (pp. 571-576). Austin, TX: Cognitive Science Society.

- Muggleton, S. (1999). Inductive Logic Programming: Issues, results and the challenge of Learning Language in Logic. Artificial Intelligence, 114(1-2), 283-296.

- Cypher, A. (Ed.). (1993). Watch what I do: Programming by Demonstration. Cambridge, MA: MIT Press.