Difference between revisions of "Klahr - TED"

(→Independent Variables) |

m (Reverted edits by Ularedmond (Talk); changed back to last version by Cmagaro) |

||

| (7 intermediate revisions by 2 users not shown) | |||

| Line 55: | Line 55: | ||

''Table 1. Study Procedure.'' | ''Table 1. Study Procedure.'' | ||

<table width="900" cellspacing="1" cellpadding="1" border="1"><tr><td> </td><td style="text-align: center;"><b>Control</b></td><td style="text-align: center;"><b>TED</b></td></tr><tr><td>Story Pre-Test</td><td colspan="2" style="text-align: center;">(One day before intervention)<br> | <table width="900" cellspacing="1" cellpadding="1" border="1"><tr><td> </td><td style="text-align: center;"><b>Control</b></td><td style="text-align: center;"><b>TED</b></td></tr><tr><td>Story Pre-Test</td><td colspan="2" style="text-align: center;">(One day before intervention)<br> | ||

| − | (computerized; 6 questions: 3 design; 3 evaluate + explanations)</td></tr><tr><td>Intervention</td><td>Day 1: <ul><li>Intro to lesson (including statement of CVS logic)</li><li>Intro to experiment (exp question, hypothesis) | + | (computerized; 6 questions: 3 design; 3 evaluate + explanations)</td></tr><tr><td>Intervention</td><td>Day 1: <ul><li>Intro to lesson (including statement of CVS logic)</li><li>Intro to experiment (exp question, hypothesis)</li><li>Ran (hands-on) ramps experiment</li><li> Discussed of results/confounds (CVS logic)</li></ul> |

Day 2 | Day 2 | ||

<ul><li>Re-run controlled exp</li> | <ul><li>Re-run controlled exp</li> | ||

| Line 61: | Line 61: | ||

</td><td>Day 1: | </td><td>Day 1: | ||

| − | <ul><li>Video intro to lesson | + | <ul><li>Video intro to lesson </li><li>Ramps intro and pretest </li><li>Explicit instruction (EO or AQ) experiment #1</li></ul> |

Day 2 | Day 2 | ||

<ul><li>Explicit instruction (experiments 2 & 3)</li><li>Video summary</li><li>Ramps posttest</li></ul></td></tr><tr><td>Immediate Story Post-Test</td><td style="text-align: center;" colspan="2">(Day 2)<br> | <ul><li>Explicit instruction (experiments 2 & 3)</li><li>Video summary</li><li>Ramps posttest</li></ul></td></tr><tr><td>Immediate Story Post-Test</td><td style="text-align: center;" colspan="2">(Day 2)<br> | ||

| Line 68: | Line 68: | ||

(paper/pencil; 4 questions) | (paper/pencil; 4 questions) | ||

</td></tr><tr><td style="text-align: center;" colspan="3">(Three weeks later…after some intervening class instruction that touched on CVS.) </td></tr><tr><td> Motivational survey</td><td colspan="2"> (paper/pencil: Students were asked how much they enjoyed different portions of instruction and if the lesson affected their interest in science)</td></tr><tr><td>Story follow-up </td><td style="text-align: center;" colspan="2">(paper/pencil) </td></tr><tr><td>Standardized follow-up</td><td style="text-align: center;" colspan="2">(paper/pencil) </td></tr></table> | </td></tr><tr><td style="text-align: center;" colspan="3">(Three weeks later…after some intervening class instruction that touched on CVS.) </td></tr><tr><td> Motivational survey</td><td colspan="2"> (paper/pencil: Students were asked how much they enjoyed different portions of instruction and if the lesson affected their interest in science)</td></tr><tr><td>Story follow-up </td><td style="text-align: center;" colspan="2">(paper/pencil) </td></tr><tr><td>Standardized follow-up</td><td style="text-align: center;" colspan="2">(paper/pencil) </td></tr></table> | ||

| − | |||

== Findings == | == Findings == | ||

| Line 74: | Line 73: | ||

=== TED: Explanation only vs. Additional Questions comparisons. === | === TED: Explanation only vs. Additional Questions comparisons. === | ||

| − | Immediate near-transfer performance | + | ==== Immediate near-transfer performance ==== |

Ramp posttest (near transfer) performance: Comparing TED-AQ students to TED-EO students, there was a significant ramps pretest by condition interaction (Figure 1 below), where there was a significant relationship between ramps pre and posttest only for students in the EO condition. Thus, the added question aided near transfer performance, especially for lowest-scoring students. | Ramp posttest (near transfer) performance: Comparing TED-AQ students to TED-EO students, there was a significant ramps pretest by condition interaction (Figure 1 below), where there was a significant relationship between ramps pre and posttest only for students in the EO condition. Thus, the added question aided near transfer performance, especially for lowest-scoring students. | ||

| Line 97: | Line 96: | ||

''Figure 2. Story performance by time and condition.''<br> | ''Figure 2. Story performance by time and condition.''<br> | ||

[[Image:Wiki_figure2.jpg]] | [[Image:Wiki_figure2.jpg]] | ||

| − | |||

=== Control vs. TED === | === Control vs. TED === | ||

| Line 113: | Line 111: | ||

<sup>b</sup> adjusted for Story pretest (reading level was not significantly related to standardized test) | <sup>b</sup> adjusted for Story pretest (reading level was not significantly related to standardized test) | ||

| − | However, there were no differences on the four standardized items (Table 3), ''F''(1, 22) = 1.25, ''p'' = .28, again factoring out both | + | However, there were no differences on the four standardized items (Table 3), ''F''(1, 22) = 1.25, ''p'' = .28, again factoring out both Story pretest and reading level. |

| Line 137: | Line 135: | ||

== Explanation == | == Explanation == | ||

| − | In summary, comparing the control group with the TED groups, students in the TED conditions performed significantly higher on the immediate far-transfer Story post-test, but there were no differences on the distant-transfer “standardized” immediate or follow-up posttests. Though students in the TED conditions continued to out-perform their Control | + | In summary, comparing the control group with the TED groups, students in the TED conditions performed significantly higher on the immediate far-transfer Story post-test, but there were no differences on the distant-transfer “standardized” immediate or follow-up posttests. Though students in the TED conditions continued to out-perform their Control counterparts on the follow-up Story posttest, this difference was no longer significant. It should be reiterated, however, that the students in the TED condition spent 40 minutes less in completing their lesson than the control condition, thus achieving greater or equal scores on the post-tests while having spent much less time covering the content. Thus, the TED-delivered instruction was more efficient than Control instruction. Furthermore, there were no differences in students’ report of the impact of the lesson on their liking science, though students in the Control condition performed experiments using expensive ramps apparatuses. Thus, TED instruction faired well against a solid comparison lesson on CVS. |

Furthermore, within the TED condition, students given the additional questions requiring them to identify all potential causal variables showed higher near-transfer performance on the ramps posttest. Though they did not demonstrate better performance on the immediate Story posttest, they gained significantly more from the Story posttest to the Story follow-up test. These results support the hypothesis that understanding the determinacy or indeterminacy of experimental designs supports both initial learning of and transfer performance for CVS. However, these results should be replicated before drawing firm conclusions. | Furthermore, within the TED condition, students given the additional questions requiring them to identify all potential causal variables showed higher near-transfer performance on the ramps posttest. Though they did not demonstrate better performance on the immediate Story posttest, they gained significantly more from the Story posttest to the Story follow-up test. These results support the hypothesis that understanding the determinacy or indeterminacy of experimental designs supports both initial learning of and transfer performance for CVS. However, these results should be replicated before drawing firm conclusions. | ||

| Line 153: | Line 151: | ||

Klahr, D. & Nigam, M. (2004) The equivalence of learning paths in early science instruction: effects of direct instruction and discovery learning. Psychological Science, 15, 661-667.<br><br> | Klahr, D. & Nigam, M. (2004) The equivalence of learning paths in early science instruction: effects of direct instruction and discovery learning. Psychological Science, 15, 661-667.<br><br> | ||

Pottenger & Young (1992). The local environment. Curriculum and Development Group: Honolulu.<br><br> | Pottenger & Young (1992). The local environment. Curriculum and Development Group: Honolulu.<br><br> | ||

| − | Strand-Cary, M. & Klahr, D. (2008). Developing Elementary Science Skills: Instructional Effectiveness and Path Independence. Cognitive Development, 23, 488–511<br><br> | + | Strand-Cary, M. & Klahr, D. (2008). Developing Elementary Science Skills: Instructional Effectiveness and Path Independence. Cognitive Development, 23, 488–511.<br><br> |

Schauble, L., Klopfer, L., & Raghavan, K. (1991). Students’ transition from an engineering model to a science model of experimentation. Journal of Research in Science Teaching, 18(9), 859-882.<br> | Schauble, L., Klopfer, L., & Raghavan, K. (1991). Students’ transition from an engineering model to a science model of experimentation. Journal of Research in Science Teaching, 18(9), 859-882.<br> | ||

| − | |||

== Bibliography == | == Bibliography == | ||

| Line 163: | Line 160: | ||

Chen, Z. & Klahr, D., (1999) All Other Things being Equal: Children's Acquisition of the Control of Variables Strategy, Child Development , 70 (5), 1098 - 1120.<br><br> | Chen, Z. & Klahr, D., (1999) All Other Things being Equal: Children's Acquisition of the Control of Variables Strategy, Child Development , 70 (5), 1098 - 1120.<br><br> | ||

| − | Chen, Z. & Klahr, D., (2008) Remote Transfer of Scientific Reasoning and Problem-Solving Strategies in Children. In R. V. Kail (Ed.) Advances in Child Development and Behavior, Vol. 36. (pp. 419 – 470) Amsterdam: Elsevier<br><br> | + | Chen, Z. & Klahr, D., (2008) Remote Transfer of Scientific Reasoning and Problem-Solving Strategies in Children. In R. V. Kail (Ed.) Advances in Child Development and Behavior, Vol. 36. (pp. 419 – 470) Amsterdam: Elsevier.<br><br> |

Dean, D., & Kuhn, D. (2007). Direct instruction vs. discovery: The long view. Science Education, 91, 384 – 397.<br><br> | Dean, D., & Kuhn, D. (2007). Direct instruction vs. discovery: The long view. Science Education, 91, 384 – 397.<br><br> | ||

Klahr, D. (2009) “To every thing there is a season, and a time to every purpose under the heavens”: What about Direct Instruction? In S. Tobias and T. M. Duffy (Eds.) Constructivist Theory Applied to Instruction: Success or Failure? Taylor and Francis.<br><br> | Klahr, D. (2009) “To every thing there is a season, and a time to every purpose under the heavens”: What about Direct Instruction? In S. Tobias and T. M. Duffy (Eds.) Constructivist Theory Applied to Instruction: Success or Failure? Taylor and Francis.<br><br> | ||

Latest revision as of 19:07, 9 September 2011

Contents

Project Overview

TED (Training in Experimental Design)

Co-PI: Dr. David Klahr, Carnegie Mellon University, Department of Psychology

Co-PI: Dr. Stephanie Siler, Carnegie Mellon University, Department of Psychology

Abstract

The aim of this project on Training in Experimental Design (TED) is to develop a computer-based intelligent tutoring system to improve science instruction in late elementary through middle school grades. The proposed intervention focuses on the conceptual understanding and procedural skills of designing and interpreting scientific experiments. The primary support of the project has come from the Institute of Education Sciences over the past 6 years: the specific aspect of TED that is supported by the PSLC's cognitive thrust involves a professional development component, in which the TED researchers work with a teacher in the Pittsburgh Public School's new Science and Technology Academy to develop effective implementations of TED, and to create plausible comparisons between TED's method and content, and the method and content of "normal" teacher delivered instruction on experimental design. Although the long term aim of the TED project is to transform TED-1 from a non-adaptive "straight line" instructional delivery system into an intelligent adaptive tutor (TED-2), the current PSLC project involves empirical studies of comparisons between human and TED-1, delivering a non-adaptive lesson plan.

Background and Significance

A thorough understanding of the “Control of Variables Strategy" (CVS) is essential for doing and understanding experimental science, whether it is school children studying the effect of sunlight on plant growth, or consumers attempting to assess reports of the latest drug efficacy study. As fundamental to the scientific enterprise as CVS is, it tends to be taught in a shallow manner. Typically, only its procedures are explicitly taught, while the conceptual basis for why those procedures are necessary and sufficient for causal inference is seldom addressed. Brief statements about experimental design procedures, without subsequent instruction about the rationale for such designs, appear to be the norm in science textbooks, though there are notable exceptions (e.g., Hsu, 2002). Even when students are provided with rich interactive contexts in which to design experiments (Kali, & Linn, 2008; Kali, Linn, & Roseman, 2008), the focus for any particular module is on using experimentation to advance domain knowledge, rather than on CVS per se as a domain-general acquisition.

The consequences of meager levels of CVS instruction are clear. A substantial body of research evidence shows that, absent explicit instruction, CVS is not easily learned. For example, Kuhn, Garcia-Mila, Zohar and Andersen’s (1995) classic study demonstrated that—in a variety of scientific discovery tasks in which participants explored the effects of several variables—even after 20 sessions spread over 10 weeks, fewer than 25% of 4th grader’s inferences were valid. Other studies of children’s understanding of evidence generation and interpretation (e.g., Amsel & Brock, 1996; Chen & Klahr, 1999; Bullock & Ziegler, 1996; Dean & Kuhn, 2007; Klahr, 2000; Klahr & Nigam, 2004; Schauble, Klopfer, & Raghavan, 1991, Strand-Cary & Klahr, 2008) reveal their fragile initial grasp of CVS, and the difficulty that they have in learning it.

Over the past three years, our project has developed computerized instruction (“TED” for “Training in Experimental Design”) based on the method of “direct” CVS instruction found effective by Klahr and colleagues (e.g., Chen & Klahr, 1999; Klahr & Nigam, 2004; Strand-Cary & Klahr, 2008). We recently compared student learning and transfer from TED-delivered instruction to the same instruction delivered by human tutors using physical materials and found no outcome differences. However, “direct” CVS instruction has never been compared to lessons aimed at teaching CVS; thus, we wanted to know how TED-delivered instruction compared to a teacher-delivered lesson on CVS in a school curriculum. Findings that TED-delivered instruction leads to higher transfer rates than the control lesson would be practical justification for incorporating TED within the science curriculum.

Furthermore, in prior analyses, we found that students’ complete causal explanations of CVS (e.g., in which they explained that an unconfounded experiment was “good” because only the target variable could cause any differences in outcomes or explained that an experiment was not good because variables other than the target variable could also cause differences in outcomes, and so it’s not possible to know whether the target variable was causal) given during the “direct” instruction were significantly correlated with transfer performance. Thus, we were interested in whether the addition of more directive questions in TED, asking students to identify the potential causal factors in experiments, would lead to improved transfer performance. Increases in transfer rates support the hypothesis that understanding the determinacy/indeterminacy of outcomes is a key knowledge component for CVS transfer.

Glossary

- CVS: control of variables strategy. The procedures for designing simple, unconfounded experiments by varying only one thing at a time and keeping all others constant.

- Target varialble: the factor whose values are varied in order to determine whether or not that factor is causal with respect to the outcome.

- Direct Instruction vs Discovery Learning: two important, contentious, and extraordinarily difficult to define constructs unless clear operational descriptions are provided that fully convey the instructional context for each. See Tobias & Duffy (2009) and Strand-Cary & Klahr, 2008, for a full discussion.

Research Questions

How does the TED tutor—even in its “non-intelligent” state—compare with an alternative lesson on CVS delivered by a live teacher in terms of CVS transfer rates, student enjoyment of the lesson, and of science more generally?

Does making a hypothesized “key” knowledge component more explicit via asking targeted questions lead to improved transfer performance? That is, does also asking students to identify the possible causal factors in a confounded experiment (i.e., the reason why it is necessary to control variables) promote far-transfer above telling students?

Independent Variables

The study compared three conditions in which two classes of 8th-grade students at a local school, both taught by the same teacher, received instruction in CVS in their regular science classrooms. The first independent variable manipulated was the instructional lesson; in one classroom, students received a teacher-delivered CVS lesson from the CPO science textbook (Condition 1), and in the other classroom students received TED-delivered CVS instruction (Conditions 2.1 and 2.2).

- (Condition 1) The control condition, in which students participated in a whole-class teacher-directed lesson with a laboratory component, as aligned with their science textbook and curriculum.

The second independent variable, manipulated within the two TED conditions, is the addition of causal questions.

- (Condition 2.1) “Explanation only” (EO) TED condition, in which students used a computerized tutor to cover the same content, i.e., to learn how and why to create controlled experiments.

- (Condition 2.2.) “Added Questions” (AQ) TED condition, in which students used a computerized tutor that had been slightly modified to include an additional question during each exercise that asked them to identify what variables, if any, could affect experimental results.

Dependent Variables

- Ramps test (TED conditions only), in which students design four experiments, each to test a different variable. Because both the domain and task demands are the same as those of instruction, this is considered a measure of near transfer.

- “Story” test (all conditions), in which students design 3 experiments and evaluate 3 experiments in three different contexts (designing rockets, baking cookies, and selling drinks). Because this assessment covers problems that have similar task demands, but with formatting and domains that differ from the content of the lesson, we consider this to be an assessment of far-transfer.

- “Standardized question” test (all conditions), comprised of four CVS problems gleaned from age-appropriate standardized tests (TIMSS and NAEP). Because the items on this assessment differed from instruction both in the domains and task demands, we consider this to be an assessment of distant-transfer.

Study Procedure

Table 1. Study Procedure.

| Control | TED | |

| Story Pre-Test | (One day before intervention) (computerized; 6 questions: 3 design; 3 evaluate + explanations) | |

| Intervention | Day 1:

Day 2

| Day 1:

Day 2

|

| Immediate Story Post-Test | (Day 2) (computerized; 6 questions: 3 design; 3 evaluate + explanations) | |

| Immediate Standardized posttest | (Day 2) (paper/pencil; 4 questions) | |

| (Three weeks later…after some intervening class instruction that touched on CVS.) | ||

| Motivational survey | (paper/pencil: Students were asked how much they enjoyed different portions of instruction and if the lesson affected their interest in science) | |

| Story follow-up | (paper/pencil) | |

| Standardized follow-up | (paper/pencil) | |

Findings

TED: Explanation only vs. Additional Questions comparisons.

Immediate near-transfer performance

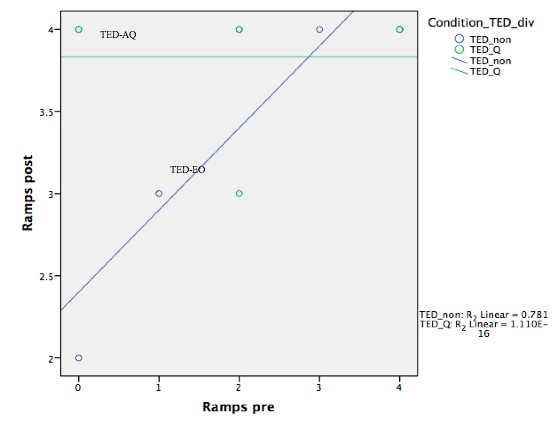

Ramp posttest (near transfer) performance: Comparing TED-AQ students to TED-EO students, there was a significant ramps pretest by condition interaction (Figure 1 below), where there was a significant relationship between ramps pre and posttest only for students in the EO condition. Thus, the added question aided near transfer performance, especially for lowest-scoring students.

Figure 1. Ramps post by pre and condition.

Immediate far-transfer performance

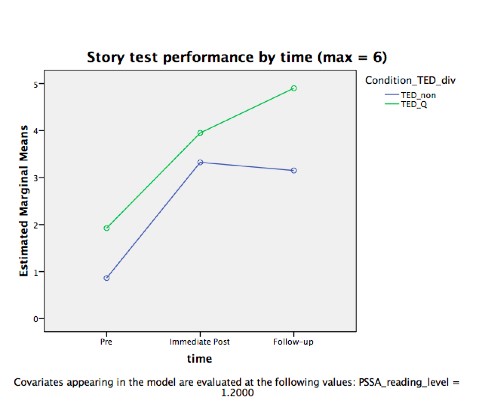

As shown in Figure 2 (and Table 2), TED students who were asked the additional question did not significantly out-perform students who were not asked this question on the immediate Story posttest, F(1, 8) = 0.16, p = .70. Similarly, there was no difference in performance on the immediate standardized posttest, F(1, 8) = 1.64, p = .24.

Table 2. Means (and standard deviations) by test type and time.

| Immediate | Follow-Up | |||

| Condition | Story | Standardized | Story | Standardized |

| Additional Question | 4.17 (1.33) | 2.00 (0.89) | 5.17 (1.60) | 3.40 (1.14) |

| Explanation only | 3.00 (2.45) | 2.40 (0.89) | 2.75 (2.75) | 2.80 (1.64) |

Follow-up performance

On the follow-up Story posttest, though students in the question condition tended to score higher than those in the explanation-only condition (Table 2), this difference just missed significance, F(1, 7) = 3.44, p = .11. Story pretest and reading level (where below basic = 0; basic = 1; proficient = 2; advanced = 3) were included in the ANCOVA. As in the immediate standardized posttest, there was no difference between conditions in performance on the follow-up standardized posttest, F(1, 7) = 0.02, p = .90.

Immediate to follow-up gains

However, the added-question students showed marginally higher Story test gains from the immediate to follow-up posttest, F(1, 10) = 4.55, p = .06 (ANCOVA, factoring out Story post; reading was not a significant source of variance, and was removed from the model, F(1, 11) = 5.17, p = .04, repeated measures ANOVA). Students who answered the additional questions gained significantly between the immediate and follow-up posttests, whereas the performance of students in the explanation-only condition did not differ from immediate to follow-up post.

Figure 2. Story performance by time and condition.

Control vs. TED

Next, we will discuss comparisons of TED to Control instruction. First, it is important to note that instruction in the Control condition took significantly longer (40 minutes) than in the TED conditions. Furthermore, students in the TED conditions took significantly less time than students in the Control conditions to complete the immediate Story posttest (6.88 and 9.85 minutes, respectively, p < .01).

Immediate performance

Story posttest performance: Students in the TED conditions scored significantly higher on the immediate Story posttest (Table 3 and shown in Figure 3) than students in the Control condition, F(1, 22) = 4.78, p = .04, factoring out Story pretest and reading level, both of which were significantly correlated with Story posttest score.

Table 3. Means (and standard errors) for immediate posttests.

| Condition | Story Post | Adjusted Story Posta | Standardized | Adjusted Standardizedb |

| Control | 2.53 (2.23) | 2.58 (0.45) | 2.57 (1.22) | 2.60(0.27) |

| TED | 3.50 (2.10) | 4.08 (0.51) | 2.18 (0.87) | 2.14 (0.31) |

a adjusted for Story pretest, reading level

b adjusted for Story pretest (reading level was not significantly related to standardized test)

However, there were no differences on the four standardized items (Table 3), F(1, 22) = 1.25, p = .28, again factoring out both Story pretest and reading level.

Table 4. Means (and standard errors) for follow-up posttests.

| Condition | Story | Adjusted Storya | Standardized | Adjusted Standardizedb |

| Control | 3.21 (2.12) | 3.13 (0.49) | 2.71 (0.99) | 2.74 (0.27) |

| TED | 4.20 (2.35) | 4.32 (0.58) | 3.00 (1.41) | 2.96 (0.34) |

aadjusted for Story pretest, reading level

badjusted for Story pretest (reading level was not significantly related to measure

Follow-up performance

On the Story follow-up paper-pencil test taken three weeks after instruction, though TED students tended to score higher than Control students (Table 4), this difference was no longer significant, F(1, 20) = 2.44, p = .13. Both Control and TED-AQ students (though not TED-EO students) gained significantly between the immediate and follow-up Story test. These gains may be due to teacher-reported intervening incidental in-class discussions of CVS in subsequent lessons on scientific method.

As with the immediate test, on the standardized follow-up test, there was no difference between conditions (Table 4), F(1, 20) = 0.25, p = .62.

Motivational Survey

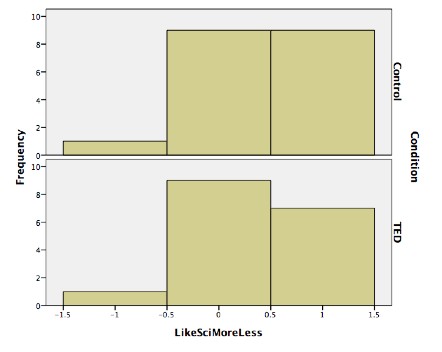

Finally, there was no difference between the Control and TED conditions in students’ reports of the effect of the lesson on how much they like science (Figure 3).

Figure 3. Frequency of responses to “How would you say doing this lesson made you feel about science (check one)?”

Explanation

In summary, comparing the control group with the TED groups, students in the TED conditions performed significantly higher on the immediate far-transfer Story post-test, but there were no differences on the distant-transfer “standardized” immediate or follow-up posttests. Though students in the TED conditions continued to out-perform their Control counterparts on the follow-up Story posttest, this difference was no longer significant. It should be reiterated, however, that the students in the TED condition spent 40 minutes less in completing their lesson than the control condition, thus achieving greater or equal scores on the post-tests while having spent much less time covering the content. Thus, the TED-delivered instruction was more efficient than Control instruction. Furthermore, there were no differences in students’ report of the impact of the lesson on their liking science, though students in the Control condition performed experiments using expensive ramps apparatuses. Thus, TED instruction faired well against a solid comparison lesson on CVS.

Furthermore, within the TED condition, students given the additional questions requiring them to identify all potential causal variables showed higher near-transfer performance on the ramps posttest. Though they did not demonstrate better performance on the immediate Story posttest, they gained significantly more from the Story posttest to the Story follow-up test. These results support the hypothesis that understanding the determinacy or indeterminacy of experimental designs supports both initial learning of and transfer performance for CVS. However, these results should be replicated before drawing firm conclusions.

References Cited

Amsel, E., & Brock, S. (1996). The development of evidence evaluation skills. Cognitive Development, 11, 523-550.

Bullock, M. & Ziegler, A. (1996). Thinking scientifically: conceptual or procedural problem? Second International Baltic Psychology Conference, Tallinn, Estonia.

Dean, D., & Kuhn, D. (2007). Direct instruction vs. discovery: The long view. Science Education, 91, 384-397.

Hsu, T. (2002). Integrated Physics and Chemistry. Peabody, MA: CPO Science.

Kali, Y. & Linn, M. C. (2008). Technology-Enhanced Support Strategies for Inquiry Learning. In J. M. Spector, M. D. Merrill, J. J. G. Van Merriënboer, & M. P. Driscoll (Eds.), Handbook of Research on Educational Communications and Technology (3rd Edition, pp. 145-161). New York: Lawrence Erlbaum Associates.

Kali, Y., Linn, M. C., & Roseman, J. E. (Eds.). (2008). Designing Coherent Science Education. New York: Teachers College Press.

Kuhn, D., Garcia-Mila, M., Zohar, A., & Andersen, C. (1995). Strategies of knowledge acquisition. Monographs of the Society for Research in Child Development, 60(4), Serial no. 245.

Klahr, D. (2000). Exploring Science: The Cognition and Development of Discovery Processes. Cambridge, MA: MIT Press.

Klahr, D. & Nigam, M. (2004) The equivalence of learning paths in early science instruction: effects of direct instruction and discovery learning. Psychological Science, 15, 661-667.

Pottenger & Young (1992). The local environment. Curriculum and Development Group: Honolulu.

Strand-Cary, M. & Klahr, D. (2008). Developing Elementary Science Skills: Instructional Effectiveness and Path Independence. Cognitive Development, 23, 488–511.

Schauble, L., Klopfer, L., & Raghavan, K. (1991). Students’ transition from an engineering model to a science model of experimentation. Journal of Research in Science Teaching, 18(9), 859-882.

Bibliography

The current TED project is the outgrowth of several years of empirical and theoretical work in our lab on different instructional approaches, different instructional media (physical vs virtual instructional materials), different theoretical perspectives on transfer, and the impact of different SES levels on the effectiveness of different types of instruction. Below we list some of our publications related to this project, as well as other papers, some extending and replicating our work (Lorch, et al) and some critiquing our approaches and interpretations (Kuhn, and Dean & Kuhn).

Chen, Z. & Klahr, D., (1999) All Other Things being Equal: Children's Acquisition of the Control of Variables Strategy, Child Development , 70 (5), 1098 - 1120.

Chen, Z. & Klahr, D., (2008) Remote Transfer of Scientific Reasoning and Problem-Solving Strategies in Children. In R. V. Kail (Ed.) Advances in Child Development and Behavior, Vol. 36. (pp. 419 – 470) Amsterdam: Elsevier.

Dean, D., & Kuhn, D. (2007). Direct instruction vs. discovery: The long view. Science Education, 91, 384 – 397.

Klahr, D. (2009) “To every thing there is a season, and a time to every purpose under the heavens”: What about Direct Instruction? In S. Tobias and T. M. Duffy (Eds.) Constructivist Theory Applied to Instruction: Success or Failure? Taylor and Francis.

Klahr, D. & Li, J. (2005) Cognitive Research and Elementary Science Instruction: From the Laboratory, to the Classroom, and Back. Journal of Science Education and Technology, 4, 217-238.

Klahr, D. & Nigam, M. (2004) The equivalence of learning paths in early science instruction: effects of direct instruction and discovery learning. Psychological Science, 15, 661-667.

Klahr, D., Triona, L. M., & Williams, C. (2007) Hands On What? The Relative Effectiveness of Physical vs. Virtual Materials in an Engineering Design Project by Middle School Children. Journal of Research in Science Teaching , 44, 183-203.

Klahr, D., Triona, L., Strand-Cary, M., & Siler, S. (2008) Virtual vs. Physical Materials in Early Science Instruction: Transitioning to an Autonomous Tutor for Experimental Design. In Jörg Zumbach, Neil Schwartz, Tina Seufert and Liesbeth Kester (Eds) Beyond Knowledge: The Legacy of Competence Meaningful Computer-based Learning Environments. SpringerLink.

Kuhn, D. (2007). Is direct instruction an answer to the right question? Educational Psychologist, 42(2), 109-113.

Li, J. & Klahr, D. (2006). The Psychology of Scientific Thinking:Implications for Science Teaching and Learning. In J. Rhoton & P. Shane (Eds.) Teaching Science in the 21st Century. National Science Teachers Association and National Science Education Leadership Association: NSTA Press.

Li, J., Klahr, D. & Siler, S. (2006). What Lies Beneath the Science Achievement Gap? The Challenges of Aligning Science Instruction with Standards and Tests. Science Educator, 15, 1-12.

Lorch, R.F., Jr., Calderhead, W.J., Dunlap, E.E., Hodell, E.C., Freer, B.D., & Lorch, E.P. (2008). Teaching the control of variables strategy in fourth grade. Presented at the Annual Meeting of the American Educational Research Association, New York, March 23-28.

Lorch, E.P., Freer, B.D., Hodell, E.C., Dunlap, E.E., Calderhead, W.J., & Lorch, R.F., Jr. (2008). Thinking aloud interferes with application of the control of variables strategy. Presented at the Annual Meeting of the American Educational Research Association, New York, March 23-28.

Strand-Cary, M. & Klahr, D. (2008). Developing Elementary Science Skills: Instructional Effectiveness and Path Independence. Cognitive Development, 23, 488–511.

Toth, E. E., Klahr, D., & Chen, Z. (2000) Bridging Research and Practice: a Cognitively-Based Classroom Intervention for Teaching Experimentation Skills to Elementary School Children. Cognition & Instruction, 18 (4), 423-459.

Triona, L. & Klahr, D. (2008) "Hands-on science: Does it matter what the student's hands are on in 'hands-on science? The Science Education Review.

Triona, L. M. & Klahr, D. (2003) Point and Click or Grab and Heft: Comparing the influence of physical and virtual instructional materials on elementary school students’ ability to design experiments Cognition & Instruction, 21, 149-173.