Difference between revisions of "Wylie - Intelligent Writing Tutor"

Ruth-Wylie (talk | contribs) (→Publications) |

|||

| (19 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | {| class="wikitable" | + | == '''Project Title: Generalizing Self-Explanation''' == |

| − | + | Report prepared for PSLC Site Visit, January 2011<br> | |

| − | + | PSLC Research Thrust(s): Cognitive Factors<br> | |

| − | + | PSLC Learnlab Course(s): English as a Second Language | |

| + | |||

| + | {| class="wikitable" border=1 | ||

|- | |- | ||

| − | + | | width="150pt" | PI: Ruth Wylie <br> Department: HCII <br> Organization: CMU | |

| − | | | + | | width="150pt" | PI: Ken Koedinger <br> Department: HCII <br> Organization: CMU |

| + | | width="150pt" | PI: Teruko Mitamura <br> Department: HCII <br> Organization: CMU | ||

| + | |} | ||

| + | |||

| + | Others who have contributed 160 hours or more:<br> | ||

| + | PSLC Undergraduate Intern: Melissa Sheng, Rice University | ||

| + | |||

| + | {| border="1" cellspacing="0" cellpadding="5" style="text-align: left;" | ||

| + | | '''Study Name/Number''' || Pilot Study: Modalities of Self-Explanation || Study 1: Practice vs. Self-Explanation Only || Study 2: Analogy, Explanation, and Practice || Study 3: Worked Examples, Explanation, and Practice | ||

| + | |- | ||

| + | | '''Study Date''' || Fall 2008 || Spring 2009 || Fall 2009 || Spring 2010 | ||

| + | |- | ||

| + | | '''LearnLab Site''' || University of Pittsburgh, ELI || University of Pittsburgh, ELI || University of Pittsburgh, ELI || University of Pittsburgh, ELI | ||

| + | |- | ||

| + | | '''LearnLab Course''' || ESL || ESL || ESL || ESL | ||

| + | |- | ||

| + | | '''Number of Students''' || 63 || 118 || 99 || 97 | ||

| + | |- | ||

| + | |- | ||

| + | | '''DataShop''' || | ||

| + | * '''Pre/Post Test Score Data:''' Yes | ||

| + | * '''Paper or Online Tests:''' Online | ||

| + | * '''Scanned Paper Tests:''' n/a | ||

| + | * '''Blank Tests:''' uploaded soon | ||

| + | * '''Answer Keys: ''' uploaded soon | ||

| + | || | ||

| + | * '''Pre/Post Test Score Data:''' Yes | ||

| + | * '''Paper or Online Tests:''' Online | ||

| + | * '''Scanned Paper Tests:''' n/a | ||

| + | * '''Blank Tests:''' uploaded soon | ||

| + | * '''Answer Keys: ''' uploaded soon | ||

| + | || | ||

| + | * '''Pre/Post Test Score Data:''' Yes | ||

| + | * '''Paper or Online Tests:''' Online | ||

| + | * '''Scanned Paper Tests:''' n/a | ||

| + | * '''Blank Tests:''' uploaded soon | ||

| + | * '''Answer Keys: ''' uploaded soon | ||

| + | || | ||

| + | * '''Pre/Post Test Score Data:''' Yes | ||

| + | * '''Paper or Online Tests:''' Online | ||

| + | * '''Scanned Paper Tests:''' n/a | ||

| + | * '''Blank Tests:''' uploaded soon | ||

| + | * '''Answer Keys: ''' uploaded soon | ||

| + | |} | ||

| + | |||

| + | |||

| + | {| border="1" cellspacing="0" cellpadding="5" style="text-align: left;" | ||

| + | | '''Study Name/Number''' || Study 4: Adaptive Self-Explanation Tutor | ||

| + | |- | ||

| + | | '''Study Date''' || Fall 2010 | ||

| + | |- | ||

| + | | '''LearnLab Site''' || University of Pittsburgh, ELI | ||

| + | |- | ||

| + | | '''LearnLab Course''' || ESL | ||

| + | |- | ||

| + | | '''Number of Students''' || 131 | ||

| + | |- | ||

| + | |- | ||

| + | | '''DataShop''' || | ||

| + | * '''Pre/Post Test Score Data:''' Yes | ||

| + | * '''Paper or Online Tests:''' Online | ||

| + | * '''Scanned Paper Tests:''' n/a | ||

| + | * '''Blank Tests:''' uploaded soon | ||

| + | * '''Answer Keys: ''' uploaded soon | ||

| + | |} | ||

| + | |||

| + | == Project Plan Abstract == | ||

| + | Prompting students to self-explain during problem solving has proven to be an effective instructional strategy across many domains. However, despite being called a “domain general” strategy, very little work has been done in areas outside of math and science. Thus, it remains an open question whether the self-explanation effect will hold in new and different domains like second language grammar learning. Through a series of classroom studies, we compare the effects of four tutoring systems: two that facilitate deep processing (prompted self-explanation and analogical comparison) and two that increase the rate of processing (example study and practice). Results show that while deep processing in general, and self-explanation specifically, is an effective strategy, it may not be an efficient approach compared to practice alone. These results suggest that the benefits of self-explanation over traditional practice may not be truly domain independent. | ||

| + | |||

| + | |||

| + | == Achievements == | ||

| + | ===Findings=== | ||

| + | We have conducted four in-vivo studies with plans to do a fifth study during Fall 2010. The first study compared two different forms of self-explanation to a ecologically valid control condition of practice-only. We looked at both menu-based self-explanations (students chose the rule from a provided menu) and free-form responses (students typed their explanation into an empty text box). The second study compared self-explanation only to practice only in order to understand the effects that self-explanation alone had on student learning. Studies 3 and 4 introduced new instructional manipulations with goals to both increase learning and efficiency. Study 3 used analogical comparisons in an attempt to reduce the metalinguistic demands placed on the students, and Study 4 used example study in an attempt to reduce the amount of time students spent working with the tutor. The main finding from this series of studies is that while prompting students to self-explain does lead to significant learning gains, students who self-explain perform no better than students in a traditional practice condition. Furthermore, self-explanation requires significantly more time meaning it is inefficient compared to practice. These results differ from those found in previous self-explanation studies conducted largely in math and science domains. | ||

| + | |||

| + | {| class="wikitable" | ||

| + | |- | ||

| + | | [[Image:IWT_study1_learninggains.jpg]] | ||

| + | | [[Image:IWT_study2_learninggains.jpg]] | ||

| + | |- | ||

| + | | Fig. 1. Study 1 Learning Gains | ||

| + | | Fig. 2. Study 2 Learning Gains | ||

| + | |- | ||

| + | | [[Image:IWT_study3_learninggains.jpg]] | ||

| + | | [[Image:IWT_study4_learninggains.jpg]] | ||

| + | |- | ||

| + | | Fig. 3. Study 3 Learning Gains | ||

| + | | Fig. 4. Study 4 Learning Gains | ||

| + | |} | ||

| + | Figures 1-4: Across all four studies, students in all conditions show significant, but equal, learning gains. Students improve when prompted to self-explanation either alone (Study 2) or paired with tutored practice (Studies 1, 3 and 4), but there is no difference in learning between students who are prompted to self-explain and those that are not. | ||

| + | |||

| + | |||

| + | In addition to learning gain analysis, we have also compared efficiency scores across conditions for Studies 2, 3, and 4. Efficiency scores combine learning gains and time to complete instruction into a single measure. These results reveal a similar pattern: practice is much more efficient than self-explanation for learning the English article system. | ||

| + | |||

| + | {| class="wikitable" | ||

| + | |- | ||

| + | | [[Image:IWT_study2_efficiency.jpg]] | ||

| + | | [[Image:IWT_study3_efficiency.jpg]] | ||

| + | |- | ||

| + | | Fig. 5. Study 2 Efficiency Analysis | ||

| + | | Fig. 6. Study 3 Efficiency Analysis | ||

|- | |- | ||

| − | + | | [[Image:IWT_study4_efficiency.jpg]] | |

| − | | | ||

|- | |- | ||

| − | + | | Fig. 7. Study 4 Learning Gains | |

| − | | | + | |} |

| + | Figures 5-7: Efficiency Score Analysis. Across all studies, students in the practice-only condition were much more efficient than those in the self-explanation condition. | ||

| + | |||

| + | |||

| + | These studies address a key PSLC goal of identifying under what conditions instructional strategies lead to robust learning. Our results to date suggest that self-explanation may not be the best form of instruction for all domains. In addition to planning our final study, our current work investigates the effects of self-explanation prompts on robust learning measures like transfer and long-term retention. | ||

| + | |||

| + | ===Studies=== | ||

| − | [ | + | Pilot Study: Free-form versus menu-based self-explanations |

| + | * Date: Fall 2008 | ||

| + | * LearnLab Site and Courses: English LearnLab, Grammar | ||

| + | * Number of Students: 65 students | ||

| + | * Total Participant Hours for the study: ~50-65 hours | ||

| + | * Data in the Data Shop: Yes | ||

| + | ** [https://pslcdatashop.web.cmu.edu/DatasetInfo?datasetId=307 Dataset: IWT Self-Explanation Study 0 (pilot) (Fall 2008) (tutors only)] | ||

| + | ** '''Pre/Post Test Score Data:''' Yes | ||

| + | ** '''Paper or Online Tests:''' Online | ||

| + | ** '''Scanned Paper Tests:''' N/A | ||

| + | ** '''Blank Tests:''' No | ||

| + | ** '''Answer Keys: ''' No | ||

| + | Study 1: Self-Explanation Only versus Practice Only | ||

| + | * Date: Spring 2009 | ||

| + | * LearnLab Site and Courses: English LearnLab, Grammar | ||

| + | * Number of Students: 118 students | ||

| + | * Total Participant Hours for the study: ~100-120 hours | ||

| + | * Data in the Data Shop: Yes | ||

| + | ** [https://pslcdatashop.web.cmu.edu/DatasetInfo?datasetId=312 Dataset: IWT Self-Explanation Study 1 (Spring 2009) (tests only)] | ||

| + | **[https://pslcdatashop.web.cmu.edu/DatasetInfo?datasetId=313 Dataset: IWT Self-Explanation Study 1 (Spring 2009) (tutors only)] | ||

| + | ** '''Pre/Post Test Score Data:''' Yes | ||

| + | ** '''Paper or Online Tests:''' Online | ||

| + | ** '''Scanned Paper Tests:''' N/A | ||

| + | ** '''Blank Tests:''' No | ||

| + | ** '''Answer Keys: ''' No | ||

| − | + | Study 2: Analogy, Self-Explanation, and Practice | |

| − | + | * Date: Fall 2009 | |

| + | * LearnLab Site and Courses: English LearnLab, Grammar | ||

| + | * Number of Students: 99 students | ||

| + | * Total Participant Hours for the study: ~75-100 hours | ||

| + | * Data in the Data Shop: Yes | ||

| + | **[https://pslcdatashop.web.cmu.edu/DatasetInfo?datasetId=328 Dataset: IWT Self-Explanation Study 2 (Fall 2009) (tests only)] | ||

| + | **[https://pslcdatashop.web.cmu.edu/DatasetInfo?datasetId=372 Dataset: IWT Self-Explanation Study 2 (Fall 2009) (tutors only)] | ||

| + | ** '''Pre/Post Test Score Data:''' Yes | ||

| + | ** '''Paper or Online Tests:''' Online | ||

| + | ** '''Scanned Paper Tests:''' N/A | ||

| + | ** '''Blank Tests:''' No | ||

| + | ** '''Answer Keys: ''' No | ||

| − | == | + | Study 3: Example Study, Self-Explanation, and Practice |

| + | * Date: Spring 2010 | ||

| + | * LearnLab Site and Courses: English LearnLab, Grammar | ||

| + | * Number of Students: 93 students | ||

| + | * Total Participant Hours for the study: ~80-95 hours | ||

| + | * Data in the Data Shop: Yes | ||

| + | **[https://pslcdatashop.web.cmu.edu/DatasetInfo?datasetId=393 Dataset: IWT Self-Explanation Study 3 (Spring 2010) (tests only)] | ||

| + | **[https://pslcdatashop.web.cmu.edu/DatasetInfo?datasetId=394 Dataset: IWT Self-Explanation Study 3 (Spring 2010) (tutors only)] | ||

| + | ** '''Pre/Post Test Score Data:''' Yes | ||

| + | ** '''Paper or Online Tests:''' Online | ||

| + | ** '''Scanned Paper Tests:''' N/A | ||

| + | ** '''Blank Tests:''' No | ||

| + | ** '''Answer Keys: ''' No | ||

| − | |||

| − | + | Study 4: Adaptive Self-Explanation vs. Practice-Only | |

| + | * Date: Fall 2010 | ||

| + | * LearnLab Site and Courses: English LearnLab, Grammar | ||

| + | * Number of Students: 142 students total (tutor data for 131 students) | ||

| + | * Total Participant Hours for the study: ~100 hours | ||

| + | * Data in the Data Shop: Yes | ||

| + | ===Publications=== | ||

| − | + | Wylie, R., Koedinger, K., and Mitamura T. (submitted) Testing the Generality and Efficiency of Self-Explanation in Second Language Learning. | |

| − | |||

| − | + | Wylie, R., Sheng, M., Mitamura, T., and Koedinger, K. (submitted) Effects of Adapted Self-Explanation on Robust Learning of Second Language Grammar. | |

| − | |||

| − | + | Wylie, R., Koedinger, K., and Mitamura, T. (2010) Extending the Self-Explanation Effect to Second Language Grammar Learning. International Conference of the Learning Sciences. Chicago, Illinois. June 29-July 2, 2010. | |

| − | + | Wylie, R., Koedinger, K., and Mitamura, T. (2010) Analogies, Explanation, and Practice: Examining how task types affect second language grammar learning. Tenth International Conference on Intelligent Tutoring Systems. Pittsburgh, Pennsylvania. June 14-18, 2010. | |

| − | + | Wylie, R., Koedinger, K., and Mitamura, T. (2009) Is Self-Explanation Always Better? The Effects of Adding Self-Explanation Prompts to an English Grammar Tutor. Cognitive Science. Amsterdam, The Netherlands. July 29 – August 1, 2009. | |

| − | + | ===Future Plans=== | |

| + | * Data Analysis for Study 5 | ||

| + | * Cross-study data analysis | ||

| + | * Robust Learning Analysis | ||

| + | ** Learning gains by Knowledge Component | ||

| + | ** Long-term Retention Measure | ||

| + | ** Transfer Measure (student writing samples) | ||

| + | * Paper on Efficiency Scores | ||

| − | + | [[Category:Data available in DataShop]] | |

| − | |||

Latest revision as of 15:26, 14 February 2011

Contents

Project Title: Generalizing Self-Explanation

Report prepared for PSLC Site Visit, January 2011

PSLC Research Thrust(s): Cognitive Factors

PSLC Learnlab Course(s): English as a Second Language

| PI: Ruth Wylie Department: HCII Organization: CMU |

PI: Ken Koedinger Department: HCII Organization: CMU |

PI: Teruko Mitamura Department: HCII Organization: CMU |

Others who have contributed 160 hours or more:

PSLC Undergraduate Intern: Melissa Sheng, Rice University

| Study Name/Number | Pilot Study: Modalities of Self-Explanation | Study 1: Practice vs. Self-Explanation Only | Study 2: Analogy, Explanation, and Practice | Study 3: Worked Examples, Explanation, and Practice |

| Study Date | Fall 2008 | Spring 2009 | Fall 2009 | Spring 2010 |

| LearnLab Site | University of Pittsburgh, ELI | University of Pittsburgh, ELI | University of Pittsburgh, ELI | University of Pittsburgh, ELI |

| LearnLab Course | ESL | ESL | ESL | ESL |

| Number of Students | 63 | 118 | 99 | 97 |

| DataShop |

|

|

|

|

| Study Name/Number | Study 4: Adaptive Self-Explanation Tutor |

| Study Date | Fall 2010 |

| LearnLab Site | University of Pittsburgh, ELI |

| LearnLab Course | ESL |

| Number of Students | 131 |

| DataShop |

|

Project Plan Abstract

Prompting students to self-explain during problem solving has proven to be an effective instructional strategy across many domains. However, despite being called a “domain general” strategy, very little work has been done in areas outside of math and science. Thus, it remains an open question whether the self-explanation effect will hold in new and different domains like second language grammar learning. Through a series of classroom studies, we compare the effects of four tutoring systems: two that facilitate deep processing (prompted self-explanation and analogical comparison) and two that increase the rate of processing (example study and practice). Results show that while deep processing in general, and self-explanation specifically, is an effective strategy, it may not be an efficient approach compared to practice alone. These results suggest that the benefits of self-explanation over traditional practice may not be truly domain independent.

Achievements

Findings

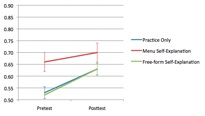

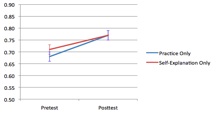

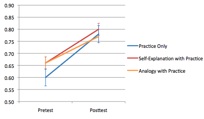

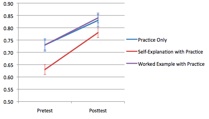

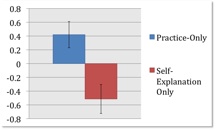

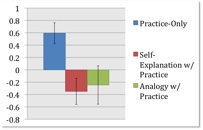

We have conducted four in-vivo studies with plans to do a fifth study during Fall 2010. The first study compared two different forms of self-explanation to a ecologically valid control condition of practice-only. We looked at both menu-based self-explanations (students chose the rule from a provided menu) and free-form responses (students typed their explanation into an empty text box). The second study compared self-explanation only to practice only in order to understand the effects that self-explanation alone had on student learning. Studies 3 and 4 introduced new instructional manipulations with goals to both increase learning and efficiency. Study 3 used analogical comparisons in an attempt to reduce the metalinguistic demands placed on the students, and Study 4 used example study in an attempt to reduce the amount of time students spent working with the tutor. The main finding from this series of studies is that while prompting students to self-explain does lead to significant learning gains, students who self-explain perform no better than students in a traditional practice condition. Furthermore, self-explanation requires significantly more time meaning it is inefficient compared to practice. These results differ from those found in previous self-explanation studies conducted largely in math and science domains.

|

|

| Fig. 1. Study 1 Learning Gains | Fig. 2. Study 2 Learning Gains |

|

|

| Fig. 3. Study 3 Learning Gains | Fig. 4. Study 4 Learning Gains |

Figures 1-4: Across all four studies, students in all conditions show significant, but equal, learning gains. Students improve when prompted to self-explanation either alone (Study 2) or paired with tutored practice (Studies 1, 3 and 4), but there is no difference in learning between students who are prompted to self-explain and those that are not.

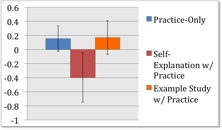

In addition to learning gain analysis, we have also compared efficiency scores across conditions for Studies 2, 3, and 4. Efficiency scores combine learning gains and time to complete instruction into a single measure. These results reveal a similar pattern: practice is much more efficient than self-explanation for learning the English article system.

|

|

| Fig. 5. Study 2 Efficiency Analysis | Fig. 6. Study 3 Efficiency Analysis |

| |

| Fig. 7. Study 4 Learning Gains |

Figures 5-7: Efficiency Score Analysis. Across all studies, students in the practice-only condition were much more efficient than those in the self-explanation condition.

These studies address a key PSLC goal of identifying under what conditions instructional strategies lead to robust learning. Our results to date suggest that self-explanation may not be the best form of instruction for all domains. In addition to planning our final study, our current work investigates the effects of self-explanation prompts on robust learning measures like transfer and long-term retention.

Studies

Pilot Study: Free-form versus menu-based self-explanations

- Date: Fall 2008

- LearnLab Site and Courses: English LearnLab, Grammar

- Number of Students: 65 students

- Total Participant Hours for the study: ~50-65 hours

- Data in the Data Shop: Yes

- Dataset: IWT Self-Explanation Study 0 (pilot) (Fall 2008) (tutors only)

- Pre/Post Test Score Data: Yes

- Paper or Online Tests: Online

- Scanned Paper Tests: N/A

- Blank Tests: No

- Answer Keys: No

Study 1: Self-Explanation Only versus Practice Only

- Date: Spring 2009

- LearnLab Site and Courses: English LearnLab, Grammar

- Number of Students: 118 students

- Total Participant Hours for the study: ~100-120 hours

- Data in the Data Shop: Yes

- Dataset: IWT Self-Explanation Study 1 (Spring 2009) (tests only)

- Dataset: IWT Self-Explanation Study 1 (Spring 2009) (tutors only)

- Pre/Post Test Score Data: Yes

- Paper or Online Tests: Online

- Scanned Paper Tests: N/A

- Blank Tests: No

- Answer Keys: No

Study 2: Analogy, Self-Explanation, and Practice

- Date: Fall 2009

- LearnLab Site and Courses: English LearnLab, Grammar

- Number of Students: 99 students

- Total Participant Hours for the study: ~75-100 hours

- Data in the Data Shop: Yes

- Dataset: IWT Self-Explanation Study 2 (Fall 2009) (tests only)

- Dataset: IWT Self-Explanation Study 2 (Fall 2009) (tutors only)

- Pre/Post Test Score Data: Yes

- Paper or Online Tests: Online

- Scanned Paper Tests: N/A

- Blank Tests: No

- Answer Keys: No

Study 3: Example Study, Self-Explanation, and Practice

- Date: Spring 2010

- LearnLab Site and Courses: English LearnLab, Grammar

- Number of Students: 93 students

- Total Participant Hours for the study: ~80-95 hours

- Data in the Data Shop: Yes

- Dataset: IWT Self-Explanation Study 3 (Spring 2010) (tests only)

- Dataset: IWT Self-Explanation Study 3 (Spring 2010) (tutors only)

- Pre/Post Test Score Data: Yes

- Paper or Online Tests: Online

- Scanned Paper Tests: N/A

- Blank Tests: No

- Answer Keys: No

Study 4: Adaptive Self-Explanation vs. Practice-Only

- Date: Fall 2010

- LearnLab Site and Courses: English LearnLab, Grammar

- Number of Students: 142 students total (tutor data for 131 students)

- Total Participant Hours for the study: ~100 hours

- Data in the Data Shop: Yes

Publications

Wylie, R., Koedinger, K., and Mitamura T. (submitted) Testing the Generality and Efficiency of Self-Explanation in Second Language Learning.

Wylie, R., Sheng, M., Mitamura, T., and Koedinger, K. (submitted) Effects of Adapted Self-Explanation on Robust Learning of Second Language Grammar.

Wylie, R., Koedinger, K., and Mitamura, T. (2010) Extending the Self-Explanation Effect to Second Language Grammar Learning. International Conference of the Learning Sciences. Chicago, Illinois. June 29-July 2, 2010.

Wylie, R., Koedinger, K., and Mitamura, T. (2010) Analogies, Explanation, and Practice: Examining how task types affect second language grammar learning. Tenth International Conference on Intelligent Tutoring Systems. Pittsburgh, Pennsylvania. June 14-18, 2010.

Wylie, R., Koedinger, K., and Mitamura, T. (2009) Is Self-Explanation Always Better? The Effects of Adding Self-Explanation Prompts to an English Grammar Tutor. Cognitive Science. Amsterdam, The Netherlands. July 29 – August 1, 2009.

Future Plans

- Data Analysis for Study 5

- Cross-study data analysis

- Robust Learning Analysis

- Learning gains by Knowledge Component

- Long-term Retention Measure

- Transfer Measure (student writing samples)

- Paper on Efficiency Scores