Difference between revisions of "Plateau study"

(→References) |

(→Dependent variables) |

||

| Line 60: | Line 60: | ||

=== Dependent variables === | === Dependent variables === | ||

* [[Normal post-test]] | * [[Normal post-test]] | ||

| + | |||

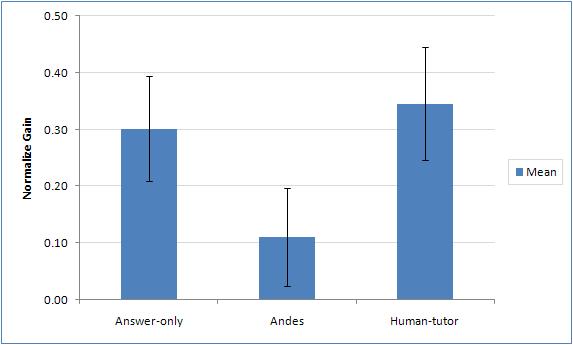

| + | At this point, we have only analyzed the results from the multiple choice test. To measure the change in students' qualitative understanding, we used the following measure: gain = (post - pre)/(100% - pre). | ||

| + | |||

** ''Qualitative Assessment'': | ** ''Qualitative Assessment'': | ||

| − | ** ''Quantiative Assessment'': | + | |

| + | [[Image:mc_normgain.JPG]] | ||

| + | |||

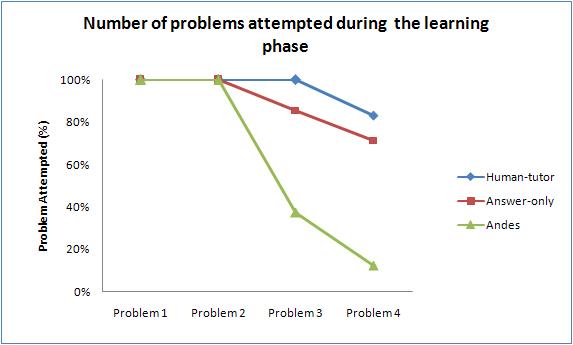

| + | Instead of a plateau, the results look more like an "interaction valley." We were curious why the pattern of results showed the Andes condition performing marginally worse than the Human tutoring condition. We hypothesized that the interface of the Andes interface takes more time to learn than the Answer-only version of Andes. To test this hypothesis, we looked at the number of students, in each condition, that attempted to solve each of the four problems during the learning phase of the experiment. The learning phase was when the experimental manipulation took place. Thus, we would expect that the Andes condition would have attempted fewer problems if they are taking longer to learn the interface. The figure below summarizes the percentage of students who attempted each problem. | ||

| + | |||

| + | [[Image:mc_attempts.JPG]] | ||

| + | |||

| + | It is extremely clear that the Andes condition was unable to solve the same number of problems as the other two conditions. | ||

| + | |||

| + | ** ''Quantiative Assessment'': | ||

=== Results === | === Results === | ||

Revision as of 18:37, 14 April 2008

Contents

- 1 The Interaction Plateau: A comparison between human tutoring, Andes, and computer-aided instruction

The Interaction Plateau: A comparison between human tutoring, Andes, and computer-aided instruction

Robert G.M. Hausmann, Brett van de Sande, & Kurt VanLehn

Summary Table

| PIs | Robert G.M. Hausmann (Pitt), Brett van de Sande (Pitt), & Kurt VanLehn (Pitt) |

| Other Contributers | Tim Nokes (Pitt) |

| Study Start Date | Feb. 21, 2007 |

| Study End Date | March 07, 2008 |

| LearnLab Site | University of Pittsburgh (Pitt) |

| LearnLab Course | Physics |

| Number of Students | N = 21 |

| Total Participant Hours | 42 hrs. |

| DataShop | Anticipated: June 1, 2008 |

Abstract

This study will test the hypothesis that, as the degree of interaction increases, the learning gains first increase then level off. The empirical pattern is called the interaction plateau. We will test the interaction plateau by comparing human tutoring (high interaction) to the Andes intelligent tutoring system (medium interaction) to solving problems with feedback on correct answers (low interaction). We anticipate finding High = Medium > Low for learning gains from these 3 types of physics instruction.

Background and Significance

The goal of this research is to test the interaction plateau hypothesis by comparing human physics tutors to two other forms of physics instruction. The first type of instruction is coached problem solving, via an intelligent tutoring system for physics called Andes. Andes has been shown to produce large learning gains when compared to normal classroom instruction (Kurt VanLehn et al., 2005). The second type of instruction is computer-aided instruction (CAI), which has only shown to be moderately effective. In the present study, the feedback and guidance provided by the full-blown version of Andes will be removed so that the students are responsible for all of the problem-solving steps.

A literature review summarizes the background knowledge: VanLehn, K. (in prep.) The interaction plateau: Is highly interactive, one-on-one, natural language tutoring as effective as simpler forms of instruction?

Reif and Scott (1999) found an interaction plateau when they compared human tutoring, a computer tutor and low-interaction problem solving. All students in their experiment were in the same physics class; the experiment varied only the way that the students did their homework. One group of 15 students did their physics homework problems individually in a six-person room where “two tutors were kept quite busy providing individual help” (ibid, pg. 826). Another 15 students did their homework on a computer tutor that had them either solve a problem or study a solution. When solving a problem, students got immediate feedback and hints on each step. When studying a problem, they were shown steps and asked to determine which one(s) were incorrect. This forced them to derive the steps. Thus, this computer tutor counts as step-based instruction. The remaining 15 students merely did their homework as usual, relying on the textbook, their friends and the course TAs for help. The human tutors and the computer tutors produced learning gains that were not reliably different, and yet both were reliably larger than the low-interaction instruction provided by normal homework (d = 1.31 for human tutoring; d = 1.01 for step-based computer tutoring).

In a series of experiments, VanLehn et al. (2007) taught students to reason out answers to conceptual physics questions such as: “As the earth orbits the Sun, the sun exerts a gravitational force on it. Does the earth also exert a force on the sun? Why or why not?” In all conditions of the experiment, students first studied a short textbook, then solved several training problems. For each problem, the students wrote an short essay-long answer, then were tutored on its flaws, then read a correct, well-written essay. Students were expected to apply a certain set of concept in their essays—these comprised the correct steps. The treatments differed in how they tutored students when the essay lacked a step or had an incorrect step. There were four experimental treatments: (1) Human tutors who communicated via a text-based interface with student; (2) Why2-Atlas and (3) Why2-AutoTutor, both of which were natural language computer tutors designed to approach human tutoring; and (4) a simple step-based computer tutor that “tutored” a missing or incorrect step by merely display text that explained what the correct step was. A control condition had students merely read passages from a textbook without answering conceptual questions. The first 3 treatments all count as natural tutoring, so according to the interaction plateau, they should all have the same learning gains as the simple step-based tutoring system. All four experimental conditions should score higher than the control condition, as it is classified as read-only studying of text. The four experimental conditions are not reliably different, and they all were higher than the read-only studying condition by approximately d = 1.0. Thus, the results of experiments 1 and 2 support the interaction plateau.

In a series of experiments, (Evens & Michael, 2006) tutored medical students in cardiovascular physiology. All students were first taught the basics of the baroreceptor reflex which controls human blood pressure. They were then given a training problem wherein an artificial pacemaker malfunctions and students must fill out a spreadsheet whose rows denoted physiological variables (e.g., heart rate; the blood volume per stroke of the heart, etc.) and whose column denoted time periods. Each cell was filled with a +, - or 0 to indicate that the variable was increasing, decreasing or constant. Each such entry was a step. The authors first developed a step-based tutoring system, CIRCSIM, that presented a short text passage for each incorrectly entered step. They then developed a sophisticated natural language tutoring system, CIRCSIM-tutor, which replaced the text passages with human-like typed dialogue intended to remedy not just the step but the concepts behind the step as well. They also used a read-only studying condition with an experimenter-written text, and they included conditions with expert human tutors interacting in typed text with students. The treatments that count as Natural Tutoring (the expert human tutors and CIRCSIM-tutor) tied with each other and with the step-based computer tutor (CIRCSIM). The only conditions were learning gains were significantly different were the read-only text studying treatments. This pattern is consistent with the interaction plateau.

There are two important reasons for conducting this study. The first is theoretical and the second is applied.

1. Theoretical: No study, to our knowledge, has directly compared human tutoring, an intelligent tutoring system, and computer-aided instruction in a single study.

2. Applied: Testing the interaction plateau hypothesis will help us better understand both how to construction educational software and how to train human tutors.

Glossary

Research question

How is robust learning affected by the amount of interaction the student has with the learning environment?

Independent variables

This study used one independent variable, Interactivity, with three levels. The first level was the most restricted amount of interaction. The students interacted with the learning environment by typing their answers into an text-box, which was embedded in an Answer-only version of Andes. The answer box gave correct/incorrect feedback on the answer, and gave a limited range of hints (e.g., "You forgot the units on your answer."). The second level of interactivity was the full-blown version of Andes. Students received correct/incorrect feedback on each step, and they were free to request hints on each step. Finally, the highest level of interactivity was one-on-one, human tutoring. Tutors were recruited from the physics department, and they were either graduate students or faculty in the Physics program at the University of Pittsburgh.

Hypothesis

This experiment implements the following instructional principle: Interaction plateau

Dependent variables

At this point, we have only analyzed the results from the multiple choice test. To measure the change in students' qualitative understanding, we used the following measure: gain = (post - pre)/(100% - pre).

- Qualitative Assessment:

Instead of a plateau, the results look more like an "interaction valley." We were curious why the pattern of results showed the Andes condition performing marginally worse than the Human tutoring condition. We hypothesized that the interface of the Andes interface takes more time to learn than the Answer-only version of Andes. To test this hypothesis, we looked at the number of students, in each condition, that attempted to solve each of the four problems during the learning phase of the experiment. The learning phase was when the experimental manipulation took place. Thus, we would expect that the Andes condition would have attempted fewer problems if they are taking longer to learn the interface. The figure below summarizes the percentage of students who attempted each problem.

It is extremely clear that the Andes condition was unable to solve the same number of problems as the other two conditions.

- Quantiative Assessment:

Results

Procedure The participants were asked to complete a series of tasks. Upon granting informed consent, participants were then administered a pretest, which consisted of 13 mutliple-choice conceptual physics questions. In addition, they were also given two quantitative problems. Both problems were decomposed into three sub-problems. They were given a total of 20 minutes to complete the pretest.

After completing the pretest, participants were then given a short text to study. They were not given any instructions as to how to study the text. That is, the undergraduate student volunteers studied the short text using the same learning strategies that they use when studying their text for class, either to solve homework problems or take an exam. They were given 15 minutes to study the text.

Next, the experimental intervention took place. Students assigned to the Answer-only condition were given an "answer booklet" in which they were encouraged to write their solution to the problem. The problems were presented both as a problem statement in the Andes tutoring system, as well as on paper. Students assigned to the Andes condition were given the exact same problems. The main difference between these conditions was the help and feedback that they received. The Answer-only condition only recieved feedback on their final numerical answer. If omitted, the system would prompt the student to include the correct units. They also received the red/green flag feedback on the answer. In contrast, the Andes condition received both flag feedback and hints were made available on each problem solving step.

Students assigned to the human tutoring condition were given the same problems to solve. The tutor and student worked face-to-face, using a dry-erase board to display their work. The interaction was audio- and video-taped for later analysis. The experimental intervention lasted 45 minutes.

After tutored problem solving, all of the participants then completed a post-test. The post-test consisted of the same 13 mutliple-choice problems. However, the quantitative problems were not identical to the pretest. Instead, they drew upon the same concepts and principles, but the surface form of the problems was different. Participants were given 20 minutes to solve the post-test problems.

Explanation

Further Information

Annotated bibliography

References

- VanLehn, K. (in prep.) The interaction plateau: Is highly interactive, one-on-one, natural language tutoring as effective as simpler forms of instruction?

- Reif, F., & Scott, L. A. (1999). Teaching scientific thinking skills: Students and computers coaching each other. American Journal of Physics, 67(9), 819-831.

- VanLehn, K., Graesser, A. C., Jackson, G. T., Jordan, P., Olney, A., & Rose, C. P. (2007). When are tutorial dialogues more effective than reading? Cognitive Science, 31(1), 3-62.

- Evens, M., & Michael, J. (2006). One-on-one Tutoring By Humans and Machines. Mahwah, NJ: Erlbaum.