Difference between revisions of "REAP Comparison to Classroom Instruction (Fall 2006)"

| (19 intermediate revisions by the same user not shown) | |||

| Line 22: | Line 22: | ||

| − | [[ | + | [[ABSTRACT]] |

| − | THIS WIKI ENTRY IS | + | THIS WIKI ENTRY IS UNDER CONSTRUCTION. |

| − | This paper focuses on the long-term retention and production of | + | This paper focuses on the long-term retention and production of vocabulary in an intensive English program (IEP). The study compares the learning of words through a computer assisted learning program with an 'ecological' control of standard classroom practice. The study seeks to elucidiate the strengths of both approaches. The paper draws on the practical framework of Coxhead (2001) and Nation (2005) and the theoretical perspectives of Laufer & Hulstijn (2001). The project collected data from two separate classes of ESL learners. Hence the study was run twice with different participants.The vocabulary instruction occurred in intermediate level reading class (intermediate =TOEFL 450 or iBT TOEFL 45, average MTELP score, 58). In the CALL condition, all learners spent 40 minutes per week for 9 weeks reading texts containing words from the Academic Word List. In fall 2006, topic interest was manipulated in the CALL condition. In spring 2007, all of the students received texts that were in line with their interests. In contrast, in classroom instruction, a subset of the learners' normal in-class vocabulary instruction was tracked. Pre-, post and delayed post-test data were collected for the CALL vocabulary learning and the in-class learning conditions. In addition, during this period, all of the students' writing assignments were collected on-line. From this database of written output, each student’s texts were analyzed to determine which words seen during computer training and regular reading class had transferred to their spontaneous output in compositions in their writing class. Results indicate that although the CALL practice led to recognition one semester later, only the words which were practiced during regular reading class vocabulary instruction transferred to their spontaneous writing. This transfer effect is attributed to the deeper processing from spoken and written output that occurred during the regular vocabulary instruction. The data also showed that the production of words seen in the CALL condition alone suffered from more errors in word recognition (‘clang’ associations) and morphological form errors (Schmitt & Meara, 1997). We conclude that these data suggest that some negative views on output practice by Folse (2006) and Mason and Krashen (2005) must be modified to accommodate these data. As a result of this comparision, we are amending REAP to create more interactive production opportunities. |

| − | [[ | + | [[INTRODUCTION]] |

| − | In the fall of 2006 and the spring of 2007, | + | In the fall of 2006 and the spring of 2007, vocabulary learning using REAP [http://reap.cs.cmu.edu/]and in regular class vocabulary instruction was studied. This research therefore focused on a comparison of REAP with the vocabulary instruction that usually occurs in classrooms. In that sense, it is not very tightly controlled study, but it is ecologically valid in the sense that the study reflects what actually happens in classes. |

| − | It is important to note that the REAP treatments in the fall of 2006 and spring 2007 were slightly different. In the fall of 2006, participants were introduced to the personalization of texts that they read. Some students received texts that they were interested by topic. In the fall of 2006, all of the students had their focus words highlighted, but not all students received | + | It is important to note that the REAP treatments in the fall of 2006 and spring 2007 were slightly different. In the fall of 2006, participants were introduced to the personalization of texts that they read. Some students received texts that they were interested by topic, while others received random texts that contained their focus words. In the fall of 2006, all of the students had their focus words highlighted, but not all students received texts that were of interest to them all the time. Details can be read here.[http://learnlab.org/research/wiki/index.php/REAP_Study_on_Personalization_of_Readings_by_Topic_%28Fall_2006%29] Students were all able to select their topics of interest in the spring of 2007 and also had their focus words highlighted. [See the study in level 5 for a comparison of highlighted versus non lighted words,[http://www.learnlab.org/research/wiki/index.php/REAP_Study_on_Focusing_of_Attention_%28Spring_2007%29] ] |

| − | In the classroom conditions, the Reading 4 curriculum supervisor decided on a list of Academic Word List vocabulary items that had been excluded from the tests that the students took to establish their focus word lists. This list included 58 items that in her view the students should know. | + | In the classroom conditions, the Reading 4 curriculum supervisor decided on a list of Academic Word List vocabulary [http://language.massey.ac.nz/staff/awl/] items that had been excluded from the tests that the students took to establish their focus word lists. This list included 58 items that in her view the students should know. |

| − | |||

| − | + | [[BRIEF LITERATURE REVIEW]] | |

| − | + | Recent research in vocabulary acquisition has suggested that the time taken for written output practice may not be well spent (Folse, 2006). Instead of using new words to create new meaningful texts during practice, Folse (2006) has suggested that fill-in-the-blank type exercises are more efficient. Moreover, Krashen and colleagues suggest that form-focussed vocabulary instruction is not efficient (Mason & Krashen, 2004). | |

| + | In contrast, Hulstijn and Laufer (2001) (H&L) have proposed a model that emphasizes output practice.They call this model the 'involvement load hypothesis' for vocabulary acquisition. The involvement load hypothesis suggests that deeper processing leads to better learning. However, definitions of 'depth of processing' during learning have not been made. Hulstijn and Laufer (2001) suggest a definition that contains three parts. Each part is assigned a processing load weight, from 0 to 2. Although H&L do not fully flesh out their model, one can infer the different processing weights for each component of processing. The first is 'need', which they controversially label 'non-cognitive'. A level of 'no need' would be '0'; an externally imposed need would be a weight of 1, and a self-generated need would be a '2'. The second component of processing is 'search'. No search would have a weight of '0', teacher or class provided information would be '1', and self-look up in a dictionary or on line would be '2'. Finally, there is the evaluation stage. A zero involvement would be a non-linguistic response such as choosing from a multiple choice list, i.e. no output at all. A level of '1' would be an activity such as 'fill-in-the-blank'. Finally, a level 2 load would be free production such as in class writing activities. | ||

| + | |||

| + | The problem with both Folse (2006) and H&L is that they selected for in class study a very limited number of words (15 and 10) respectively. In that sense, they are 'bench laboratory studies' conducted in a classroom and lack the validity of a study in which the students know they will be accountable for the learning outcomes. Moreover, the post-test results in both studies showed surprisingly low retention scores. For example, H&L showed that an average of delayed post-test gain for practice with reading and fill-in the blank of 1.6 or 1.7 out of 10, and production only 2.6 or 3.7 out of ten. | ||

| + | |||

| + | However, we know that learners need to master many more than 10-15 words to be able to effectively comprehend and produce academic discourse. Recent proposals have suggested that learners need to know between 5000 and 9000 words to function in an academic environment in English (Nation, 2006, p.60). What is needed is a much closer focus how time can be effectively used to make a 50-150 word (family) gain over time. This goal has been the focus in REAP. However, the time on task for each word in REAP is limited, and we have observed limited gains. | ||

| + | |||

| + | |||

| + | Learning a lexical entry is more than a form-meaning correspondence. Indeed, lexical items are highly complex.[[http://www.learnlab.org/research/papers.php]]. While reading practice in REAP may be sufficient for learning form-meaning links in Levelt's model, the acquisition of syntax and morphology may require deeper processing. In PSLC terminology, robust learning [http://www.learnlab.org/research/wiki/index.php/Robust_learning ]requires learning all knowledge components [http://www.learnlab.org/research/wiki/index.php/Knowledge_component] in the lexeme and lemma. | ||

| + | |||

| + | |||

| + | [[RESEARCH QUESTIONS]] | ||

1. How does REAP vocabulary learning differ from the ecological control 'normal' in class instruction? | 1. How does REAP vocabulary learning differ from the ecological control 'normal' in class instruction? | ||

| + | 2. How do the learning outcomes differ in the immediate number of words learned, and in the number of words the learners transfer to other contexts? | ||

| + | |||

| + | 3. If there are differences, what might the source of those differences be in terms of Hulstijn and Laufer's involvement load hypothesis. | ||

| − | + | 4. Can 'deeper processing' through writing be ‘skipped’ by using a CALL program? | |

| + | 5. What can teachers and computer scientists learn from each other? | ||

| − | + | The two treatments in REAP and in class are summarized in the following table. The major differences from the point of view of the involvement load hypothesis are that in REAP the need is created by the student in his or her self-generated list, whereas in class the teacher decides on the words to be learned. Second, in terms of search, the student is responsible for looking up words in the computer program, whereas in class the teacher assigns group activities for discovery of words. Finally, in terms of 'evaluation', REAP only requires a non-linguistic response to a multiple choice question, whereas in class activities require evaluation where meaningful output is created. | |

| + | The hypothesis then is that the in class learning gains will be far greater than for REAP. The question is how much better the in class activities will be. We explore the involvement load hypothesis scores for REAP and in class activities in the methodology section. | ||

| − | |||

| + | [[METHODOLOGY]] | ||

[[Participants]] | [[Participants]] | ||

| + | |||

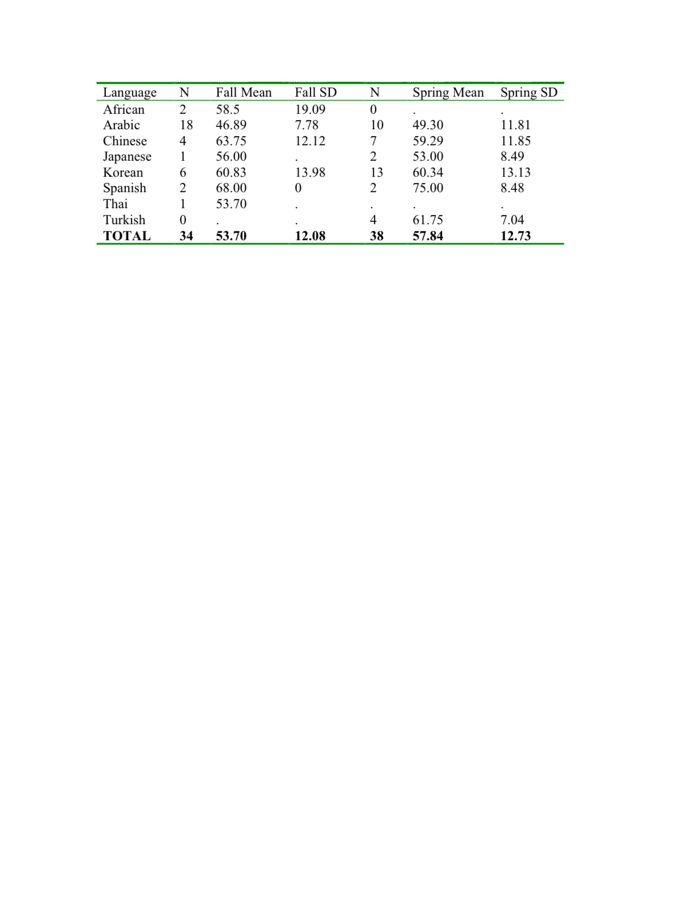

| + | The participants in the two semesters are typical of the heterogeneous first language (L2) classes in Intensive English Programs in general in the United States. Recently, the ELI at the University of Pittsburgh has had larger numbers of Arabic-speaking and Korean speaking learners. Overall, the Arabic-speaking students score lower on overall proficiency tests than the Korean speakers. However, the Arabic speakers are placed in level 4 classes because they have passed level 3 adequately. | ||

[[Image:REAPMTELP0607.jpg|700px]] | [[Image:REAPMTELP0607.jpg|700px]] | ||

| + | The variation in MTELP scores is important. This point will be raised in the discussion section. | ||

| + | |||

| + | |||

| + | [[Procedure]] | ||

| + | |||

| + | |||

| + | The table below summarizes the differences between REAP and the in class treatment of vocabulary. The REAP method is described elsewhere. | ||

| Line 66: | Line 90: | ||

| − | [[Results]] | + | For the vocabulary practice in the classroom and as homework, some more details are necessary. The teachers in the ELI focus a great deal on form, meaning, and collocation through a variety of receptive and productive exercises. |

| + | |||

| + | 1. For homework or in class students are given a list of 7-9 “focus words”. They find the word in the reading and copy the sentence with the word. They determine the part of speech in the context and then find the corresponding definition of the word in an ESL dictionary. | ||

| + | Students follow the same procedure with 3-5 words of their choice. | ||

| + | Students choose 3 words from their total list and write an original sentence with each word. | ||

| + | |||

| + | 2. Teachers may do a variety of vocabulary exercises to practice the words in class including games in pairs/groups, word-definition matching, guided speaking activities requiring the focus words, etc. | ||

| + | |||

| + | 3. Vocabulary tests are given on words from two readings as these readings are finished. (1 test every 1 _ -2 weeks). Tests are cloze with a word bank and answering questions which use the words. In the latter type of question, students must show in their answer that they understand the focus word, for example, by using a synonym. | ||

| + | |||

| + | Ex: What skills are [[essential]] if you want to become a lawyer? | ||

| + | |||

| + | These activities took perhaps 1.25 hours of class time in addition to homework according to the in class teachers. Hence, in class and homework represents more time on task than REAP. This factor has been accepted by H&L as inevitable in this kind of research, because production just takes longer. | ||

| + | |||

| + | |||

| + | In terms of Hulstijn and Laufer's involvement load hypothesis, we can estimate their level of depth of processing 'quantitatively'. | ||

| + | |||

| + | |||

| + | |||

| + | {| border="1" | ||

| + | |+ | ||

| + | |- | ||

| + | | '''Condition''' || NEED || SEARCH || EVALUATION || TOTAL LOAD | ||

| + | |- | ||

| + | | '''REAP''' || 2 || 2|| 0 || 4 | ||

| + | |- | ||

| + | | '''IN CLASS''' || 1 || 1|| 2|| 4 | ||

| + | |} | ||

| + | |||

| + | |||

| + | Hence, both sets of activities have the same involvement load. However, REAP'S involvement load is divided strongly into need and search, whereas the in class activities are spread more over need, and search , plus a very strong evaluation component. Hulstijn and Laufer (2001) make no predictions about where the involvement load will fall, but common sense dictates that involvement needs to be spread across the different factors. For this reason, we anticipate, based on H&Ls results, that the learners in class will do much better in retaining their words. | ||

| + | |||

| + | |||

| + | [[RESULTS]] | ||

| + | |||

| + | |||

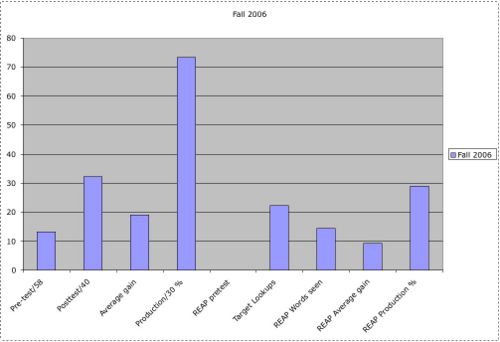

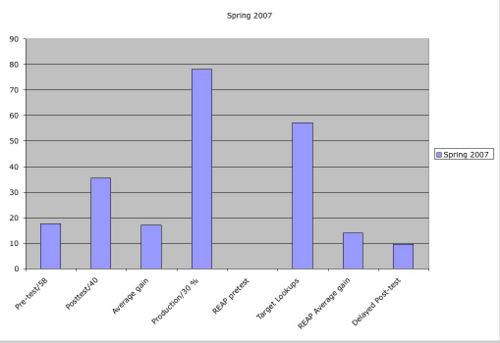

| + | The descriptive results are provided below. We discuss the classroom results first. As can be observed, the supervisor chose some words that were already known to the students. In fall 2006, students knew an average of 13.17 out of 58 words, and in the spring they knew an average of 17.6 out of 58 words. In the post-test, they scored 32.17 and 35.57 out of 40 words tested. This test was a fill in the blank test. Hence, students learned an average of 18 words in each semester in the classroom. In the written production task at the end of term, students scored 73% and 78% on a test of 30 words. | ||

| + | |||

| + | {| border="1" | ||

| + | |+ | ||

| + | |- | ||

| + | | '''Term''' || Fall 2006 || Spring 2007 || | ||

| + | |- | ||

| + | | '''Results''' || [[Image:Fall2006.jpg|500px]] || [[Image:spring2007.jpg|500px]] | ||

| + | |} | ||

| + | |||

| + | |||

| + | For the REAP sessions, it will be recalled that in fall 2006, the students were divided into those who received texts of interest and those who did not. These students' pretest was of course 0. The target look-ups were relatively low, averaging only 22.27 per student over the semester. The average gain for REAP, even under the experimental conditions that didn't favour learning for some students was 9.24 in the cloze test. However, the dramatic difference between in class and in REAP is in the production test. In the production test in fall 2006, the students scored only 29% with REAP words compared to 73% from in class. | ||

| + | |||

| + | A fairer comparison is in the spring of 2007. In this case, all the learners received texts that they were interested in. Of note here, is that the target look-ups in REAP increased to an average of 57 per student. In addition, the composition of the class was more balanced, with fewer Arabic speaking students, who were known to have 'gamed' the system (Juffs et al, in review). In spring, the average gain with REAP increased from 9.24 words in the cloze test to 14.00. Note that this result, 14, is only 3 words less than the cloze gains for the in class vocabulary. Bear in mind also that the time on task was much higher for the in class and homework vocabulary learning. In the spring, therefore, we can conclude that REAP was at least as good as in class work for recognition. | ||

| + | |||

| + | STILL NEED PRODUCTION DATA FOR SPRING 2007. | ||

| + | |||

| + | |||

| + | [[DISCUSSION]] | ||

| + | |||

| + | Robust learning measures. | ||

| + | |||

| + | Learning | ||

| + | These results support our claim that processing load cannot be established on a simple numerical basis. Recall that according Hulstijn and Laufer. For robust learning of all knowledge components of lexeme/lemma, output production (evaluation) is necessary. Written output practice, combined with involvement in class, may produce more learning than CALL programs that provide exposure only even if students are ‘internally motivated’ by self-selecting lists, and self-motivated in their search behaviors. Although evaulation (writing) is time-consuming, students need to use these words in order to master them. | ||

| + | |||

| + | This may seem like an obvious statement. However, given the continued suggestions in the literature that fill in the blank activities (Folse, 2006) and simply listening (Mason and Krashen) is 'more efficient' for lexical learning, we would like to suggest that for robust learning, evaluation in H&L's model is a vital part of mastering all of the knowledge components that make up the lexeme/lemma. | ||

| + | |||

| + | |||

| + | Transfer[http://www.learnlab.org/research/wiki/index.php/Transfer] | ||

| + | |||

| + | We also examined the transfer of words from REAP and in class instruction in the online data base of students' writing. In the fall of 2006, students used 10 words that they had seen REAP in free production in their writing classes. These words are listed by the BNC corpus frequency below: | ||

| + | |||

| + | Focus words seen in REAP: 10 uses | ||

| + | BNC-1,000 [ fams 2 : types 2 : tokens 2 ] assume produce | ||

| + | |||

| + | BNC-2,000 [ fams 1 : types 1 : tokens 1 ] distinction | ||

| + | |||

| + | BNC-3,000 [ fams 1 : types 1 : tokens 1 ] conceive | ||

| + | |||

| + | BNC-4,000 [ fams 1 : types 1 : tokens 1 ] abandon | ||

| + | |||

| + | BNC-5,000 [ fams 1 : types 1 : tokens 1 ] derive | ||

| + | |||

| + | BNC-6,000 [ fams 2 : types 2 : tokens 2 ] cite (x3) prohibit | ||

| + | |||

| + | In contrast, students used words on their in class vocabulary list 840 times. Even allowing for the words that they knew (average 14 out of 58), this represents a large difference in transfer between the REAP and in class treatments. | ||

| + | |||

| + | A final note is in order regarding the MTELP. As with any set of learners in an IEP, learners in the same class may enter with different overall proficiency scores. This cannot be avoided because almost all learners have some kind of exposure to Englush prior to their arrival in the US. As Stanovich (1986) would predict, those students who have a higher proficiency entering the class also learn more. This effect is known as the Matthew effect. Correlations between MTELP scores and vocabulary test scores in fall 2006 are as follows: | ||

| + | |||

| + | MTELP Predicts in class scores: | ||

| + | |||

| + | Pretest: r= 0.52, p ≤.01 | ||

| + | Post-test: r= 0.59, p ≤ .01 | ||

| + | Production: r= 0.58, p ≤.01 | ||

| + | |||

| + | |||

| + | Predicts REAP | ||

| + | Cloze: r= 0.53, p ≤.01 | ||

| + | Production: r= 0.59, p ≤.01 | ||

| + | |||

| + | The MTELP scores were not related to look ups or the number of texts that the students read. | ||

| + | |||

| + | In the spring of 2007, MTELP scores again predict performance, with the exception of the post-test. This finding is significant because here the Matthew effect is NOT seen. In other words vocabulary gains during the class were not related to their overall proficiency. This result suggests that the classroom instruction was able to overcome this powerful effect. | ||

| + | |||

| + | Predicts in class | ||

| + | Pretest: r= 0.63, p ≤.05 | ||

| + | Post-test: r= 0.36, p ≤ .06, ns | ||

| + | Production: r= 0.44, p ≤.02 | ||

| + | |||

| + | Predicts REAP | ||

| + | Cloze: r= 0.58, p ≤.001 | ||

| − | |||

| Line 85: | Line 214: | ||

Juffs, A., Friedline, B. F., Eskenazi, M., Wilson, L., & Heilman, M. (in review). Activity theory and computer-assisted learning of English vocabulary. Applied Linguistics. | Juffs, A., Friedline, B. F., Eskenazi, M., Wilson, L., & Heilman, M. (in review). Activity theory and computer-assisted learning of English vocabulary. Applied Linguistics. | ||

| + | |||

| + | Mason, B. and Krashen, S. (2004). Is form-focused vocabulary instruction worthwhile? RELC Journal, 35, 175-189. | ||

| + | |||

| + | Nation, I. S. P. (2006). How large a vocabulary is needed for listening and reading? Canadian Modern Language Review, 63, 59-82. | ||

| + | |||

| + | Paribakht, T.S., and M. Wesche (1997). Vocabulary Enhancement Activities and Reading for Meaning in Second Language Vocabulary Acquisition. In J. Coady and T. Huckin (eds.), Second Language Vocabulary Acquisition (Cambridge: Cambridge University Press): 174-200. | ||

Stanowicz, K. E. (1986). Matthew effects in reading: some consequences of individual differences in the acquisition of literacy. Reading Research Quarterly, 21, 360-407. | Stanowicz, K. E. (1986). Matthew effects in reading: some consequences of individual differences in the acquisition of literacy. Reading Research Quarterly, 21, 360-407. | ||

Latest revision as of 20:30, 13 May 2008

Logistical Information

| Contributors | Alan Juffs, Lois Wilson, Maxine Eskenazi, Michael Heilman |

| Study Start Date | September 2006 |

| Study End Date | April, 2007 |

| Learnlab Courses | English Language Institute Reading 4 (ESL LearnLab) |

| Number of Students | ~72 |

| Total Participant Hours (est.) | approximately 360 |

| Data in Datashop | no |

THIS WIKI ENTRY IS UNDER CONSTRUCTION.

This paper focuses on the long-term retention and production of vocabulary in an intensive English program (IEP). The study compares the learning of words through a computer assisted learning program with an 'ecological' control of standard classroom practice. The study seeks to elucidiate the strengths of both approaches. The paper draws on the practical framework of Coxhead (2001) and Nation (2005) and the theoretical perspectives of Laufer & Hulstijn (2001). The project collected data from two separate classes of ESL learners. Hence the study was run twice with different participants.The vocabulary instruction occurred in intermediate level reading class (intermediate =TOEFL 450 or iBT TOEFL 45, average MTELP score, 58). In the CALL condition, all learners spent 40 minutes per week for 9 weeks reading texts containing words from the Academic Word List. In fall 2006, topic interest was manipulated in the CALL condition. In spring 2007, all of the students received texts that were in line with their interests. In contrast, in classroom instruction, a subset of the learners' normal in-class vocabulary instruction was tracked. Pre-, post and delayed post-test data were collected for the CALL vocabulary learning and the in-class learning conditions. In addition, during this period, all of the students' writing assignments were collected on-line. From this database of written output, each student’s texts were analyzed to determine which words seen during computer training and regular reading class had transferred to their spontaneous output in compositions in their writing class. Results indicate that although the CALL practice led to recognition one semester later, only the words which were practiced during regular reading class vocabulary instruction transferred to their spontaneous writing. This transfer effect is attributed to the deeper processing from spoken and written output that occurred during the regular vocabulary instruction. The data also showed that the production of words seen in the CALL condition alone suffered from more errors in word recognition (‘clang’ associations) and morphological form errors (Schmitt & Meara, 1997). We conclude that these data suggest that some negative views on output practice by Folse (2006) and Mason and Krashen (2005) must be modified to accommodate these data. As a result of this comparision, we are amending REAP to create more interactive production opportunities.

In the fall of 2006 and the spring of 2007, vocabulary learning using REAP [1]and in regular class vocabulary instruction was studied. This research therefore focused on a comparison of REAP with the vocabulary instruction that usually occurs in classrooms. In that sense, it is not very tightly controlled study, but it is ecologically valid in the sense that the study reflects what actually happens in classes.

It is important to note that the REAP treatments in the fall of 2006 and spring 2007 were slightly different. In the fall of 2006, participants were introduced to the personalization of texts that they read. Some students received texts that they were interested by topic, while others received random texts that contained their focus words. In the fall of 2006, all of the students had their focus words highlighted, but not all students received texts that were of interest to them all the time. Details can be read here.[2] Students were all able to select their topics of interest in the spring of 2007 and also had their focus words highlighted. [See the study in level 5 for a comparison of highlighted versus non lighted words,[3] ]

In the classroom conditions, the Reading 4 curriculum supervisor decided on a list of Academic Word List vocabulary [4] items that had been excluded from the tests that the students took to establish their focus word lists. This list included 58 items that in her view the students should know.

Recent research in vocabulary acquisition has suggested that the time taken for written output practice may not be well spent (Folse, 2006). Instead of using new words to create new meaningful texts during practice, Folse (2006) has suggested that fill-in-the-blank type exercises are more efficient. Moreover, Krashen and colleagues suggest that form-focussed vocabulary instruction is not efficient (Mason & Krashen, 2004).

In contrast, Hulstijn and Laufer (2001) (H&L) have proposed a model that emphasizes output practice.They call this model the 'involvement load hypothesis' for vocabulary acquisition. The involvement load hypothesis suggests that deeper processing leads to better learning. However, definitions of 'depth of processing' during learning have not been made. Hulstijn and Laufer (2001) suggest a definition that contains three parts. Each part is assigned a processing load weight, from 0 to 2. Although H&L do not fully flesh out their model, one can infer the different processing weights for each component of processing. The first is 'need', which they controversially label 'non-cognitive'. A level of 'no need' would be '0'; an externally imposed need would be a weight of 1, and a self-generated need would be a '2'. The second component of processing is 'search'. No search would have a weight of '0', teacher or class provided information would be '1', and self-look up in a dictionary or on line would be '2'. Finally, there is the evaluation stage. A zero involvement would be a non-linguistic response such as choosing from a multiple choice list, i.e. no output at all. A level of '1' would be an activity such as 'fill-in-the-blank'. Finally, a level 2 load would be free production such as in class writing activities.

The problem with both Folse (2006) and H&L is that they selected for in class study a very limited number of words (15 and 10) respectively. In that sense, they are 'bench laboratory studies' conducted in a classroom and lack the validity of a study in which the students know they will be accountable for the learning outcomes. Moreover, the post-test results in both studies showed surprisingly low retention scores. For example, H&L showed that an average of delayed post-test gain for practice with reading and fill-in the blank of 1.6 or 1.7 out of 10, and production only 2.6 or 3.7 out of ten.

However, we know that learners need to master many more than 10-15 words to be able to effectively comprehend and produce academic discourse. Recent proposals have suggested that learners need to know between 5000 and 9000 words to function in an academic environment in English (Nation, 2006, p.60). What is needed is a much closer focus how time can be effectively used to make a 50-150 word (family) gain over time. This goal has been the focus in REAP. However, the time on task for each word in REAP is limited, and we have observed limited gains.

Learning a lexical entry is more than a form-meaning correspondence. Indeed, lexical items are highly complex.[[5]]. While reading practice in REAP may be sufficient for learning form-meaning links in Levelt's model, the acquisition of syntax and morphology may require deeper processing. In PSLC terminology, robust learning [6]requires learning all knowledge components [7] in the lexeme and lemma.

1. How does REAP vocabulary learning differ from the ecological control 'normal' in class instruction?

2. How do the learning outcomes differ in the immediate number of words learned, and in the number of words the learners transfer to other contexts?

3. If there are differences, what might the source of those differences be in terms of Hulstijn and Laufer's involvement load hypothesis.

4. Can 'deeper processing' through writing be ‘skipped’ by using a CALL program?

5. What can teachers and computer scientists learn from each other?

The two treatments in REAP and in class are summarized in the following table. The major differences from the point of view of the involvement load hypothesis are that in REAP the need is created by the student in his or her self-generated list, whereas in class the teacher decides on the words to be learned. Second, in terms of search, the student is responsible for looking up words in the computer program, whereas in class the teacher assigns group activities for discovery of words. Finally, in terms of 'evaluation', REAP only requires a non-linguistic response to a multiple choice question, whereas in class activities require evaluation where meaningful output is created.

The hypothesis then is that the in class learning gains will be far greater than for REAP. The question is how much better the in class activities will be. We explore the involvement load hypothesis scores for REAP and in class activities in the methodology section.

The participants in the two semesters are typical of the heterogeneous first language (L2) classes in Intensive English Programs in general in the United States. Recently, the ELI at the University of Pittsburgh has had larger numbers of Arabic-speaking and Korean speaking learners. Overall, the Arabic-speaking students score lower on overall proficiency tests than the Korean speakers. However, the Arabic speakers are placed in level 4 classes because they have passed level 3 adequately.

The variation in MTELP scores is important. This point will be raised in the discussion section.

The table below summarizes the differences between REAP and the in class treatment of vocabulary. The REAP method is described elsewhere.

For the vocabulary practice in the classroom and as homework, some more details are necessary. The teachers in the ELI focus a great deal on form, meaning, and collocation through a variety of receptive and productive exercises.

1. For homework or in class students are given a list of 7-9 “focus words”. They find the word in the reading and copy the sentence with the word. They determine the part of speech in the context and then find the corresponding definition of the word in an ESL dictionary. Students follow the same procedure with 3-5 words of their choice. Students choose 3 words from their total list and write an original sentence with each word.

2. Teachers may do a variety of vocabulary exercises to practice the words in class including games in pairs/groups, word-definition matching, guided speaking activities requiring the focus words, etc.

3. Vocabulary tests are given on words from two readings as these readings are finished. (1 test every 1 _ -2 weeks). Tests are cloze with a word bank and answering questions which use the words. In the latter type of question, students must show in their answer that they understand the focus word, for example, by using a synonym.

Ex: What skills are essential if you want to become a lawyer?

These activities took perhaps 1.25 hours of class time in addition to homework according to the in class teachers. Hence, in class and homework represents more time on task than REAP. This factor has been accepted by H&L as inevitable in this kind of research, because production just takes longer.

In terms of Hulstijn and Laufer's involvement load hypothesis, we can estimate their level of depth of processing 'quantitatively'.

| Condition | NEED | SEARCH | EVALUATION | TOTAL LOAD |

| REAP | 2 | 2 | 0 | 4 |

| IN CLASS | 1 | 1 | 2 | 4 |

Hence, both sets of activities have the same involvement load. However, REAP'S involvement load is divided strongly into need and search, whereas the in class activities are spread more over need, and search , plus a very strong evaluation component. Hulstijn and Laufer (2001) make no predictions about where the involvement load will fall, but common sense dictates that involvement needs to be spread across the different factors. For this reason, we anticipate, based on H&Ls results, that the learners in class will do much better in retaining their words.

The descriptive results are provided below. We discuss the classroom results first. As can be observed, the supervisor chose some words that were already known to the students. In fall 2006, students knew an average of 13.17 out of 58 words, and in the spring they knew an average of 17.6 out of 58 words. In the post-test, they scored 32.17 and 35.57 out of 40 words tested. This test was a fill in the blank test. Hence, students learned an average of 18 words in each semester in the classroom. In the written production task at the end of term, students scored 73% and 78% on a test of 30 words.

| Term | Fall 2006 | Spring 2007 | |

| Results |  |

|

For the REAP sessions, it will be recalled that in fall 2006, the students were divided into those who received texts of interest and those who did not. These students' pretest was of course 0. The target look-ups were relatively low, averaging only 22.27 per student over the semester. The average gain for REAP, even under the experimental conditions that didn't favour learning for some students was 9.24 in the cloze test. However, the dramatic difference between in class and in REAP is in the production test. In the production test in fall 2006, the students scored only 29% with REAP words compared to 73% from in class.

A fairer comparison is in the spring of 2007. In this case, all the learners received texts that they were interested in. Of note here, is that the target look-ups in REAP increased to an average of 57 per student. In addition, the composition of the class was more balanced, with fewer Arabic speaking students, who were known to have 'gamed' the system (Juffs et al, in review). In spring, the average gain with REAP increased from 9.24 words in the cloze test to 14.00. Note that this result, 14, is only 3 words less than the cloze gains for the in class vocabulary. Bear in mind also that the time on task was much higher for the in class and homework vocabulary learning. In the spring, therefore, we can conclude that REAP was at least as good as in class work for recognition.

STILL NEED PRODUCTION DATA FOR SPRING 2007.

Robust learning measures.

Learning These results support our claim that processing load cannot be established on a simple numerical basis. Recall that according Hulstijn and Laufer. For robust learning of all knowledge components of lexeme/lemma, output production (evaluation) is necessary. Written output practice, combined with involvement in class, may produce more learning than CALL programs that provide exposure only even if students are ‘internally motivated’ by self-selecting lists, and self-motivated in their search behaviors. Although evaulation (writing) is time-consuming, students need to use these words in order to master them.

This may seem like an obvious statement. However, given the continued suggestions in the literature that fill in the blank activities (Folse, 2006) and simply listening (Mason and Krashen) is 'more efficient' for lexical learning, we would like to suggest that for robust learning, evaluation in H&L's model is a vital part of mastering all of the knowledge components that make up the lexeme/lemma.

Transfer[8]

We also examined the transfer of words from REAP and in class instruction in the online data base of students' writing. In the fall of 2006, students used 10 words that they had seen REAP in free production in their writing classes. These words are listed by the BNC corpus frequency below:

Focus words seen in REAP: 10 uses BNC-1,000 [ fams 2 : types 2 : tokens 2 ] assume produce

BNC-2,000 [ fams 1 : types 1 : tokens 1 ] distinction

BNC-3,000 [ fams 1 : types 1 : tokens 1 ] conceive

BNC-4,000 [ fams 1 : types 1 : tokens 1 ] abandon

BNC-5,000 [ fams 1 : types 1 : tokens 1 ] derive

BNC-6,000 [ fams 2 : types 2 : tokens 2 ] cite (x3) prohibit

In contrast, students used words on their in class vocabulary list 840 times. Even allowing for the words that they knew (average 14 out of 58), this represents a large difference in transfer between the REAP and in class treatments.

A final note is in order regarding the MTELP. As with any set of learners in an IEP, learners in the same class may enter with different overall proficiency scores. This cannot be avoided because almost all learners have some kind of exposure to Englush prior to their arrival in the US. As Stanovich (1986) would predict, those students who have a higher proficiency entering the class also learn more. This effect is known as the Matthew effect. Correlations between MTELP scores and vocabulary test scores in fall 2006 are as follows:

MTELP Predicts in class scores:

Pretest: r= 0.52, p ≤.01 Post-test: r= 0.59, p ≤ .01 Production: r= 0.58, p ≤.01

Predicts REAP

Cloze: r= 0.53, p ≤.01

Production: r= 0.59, p ≤.01

The MTELP scores were not related to look ups or the number of texts that the students read.

In the spring of 2007, MTELP scores again predict performance, with the exception of the post-test. This finding is significant because here the Matthew effect is NOT seen. In other words vocabulary gains during the class were not related to their overall proficiency. This result suggests that the classroom instruction was able to overcome this powerful effect.

Predicts in class Pretest: r= 0.63, p ≤.05 Post-test: r= 0.36, p ≤ .06, ns Production: r= 0.44, p ≤.02

Predicts REAP Cloze: r= 0.58, p ≤.001

Allum, P. (2002). CALL and the classroom: the case for comparative research. ReCALL, 14, 146-166.

Barcroft, J. (2004). Effects of sentence writing in second language lexical acquisition. Second Language Research, 20, 303-334.

Barcroft, J. (2006). Negative Effects of forced output on vocabulary learning. Second Language Research, 22, 487-497.

Folse, K. S. (2006). The effect of type of written exercise on L2 vocabulary retention. TESOL Quarterly, 40, 273-293.

Hulstijn, J., & Laufer, B. (2001). Some empirical evidence for the involvement load hypothesis in vocabulary acquisition. Language Learning, 51, 539-558.

Juffs, A., Friedline, B. F., Eskenazi, M., Wilson, L., & Heilman, M. (in review). Activity theory and computer-assisted learning of English vocabulary. Applied Linguistics.

Mason, B. and Krashen, S. (2004). Is form-focused vocabulary instruction worthwhile? RELC Journal, 35, 175-189.

Nation, I. S. P. (2006). How large a vocabulary is needed for listening and reading? Canadian Modern Language Review, 63, 59-82.

Paribakht, T.S., and M. Wesche (1997). Vocabulary Enhancement Activities and Reading for Meaning in Second Language Vocabulary Acquisition. In J. Coady and T. Huckin (eds.), Second Language Vocabulary Acquisition (Cambridge: Cambridge University Press): 174-200.

Stanowicz, K. E. (1986). Matthew effects in reading: some consequences of individual differences in the acquisition of literacy. Reading Research Quarterly, 21, 360-407.