Difference between revisions of "Learning Chinese pronunciation from a “talking headâ€Â"

(→Findings) |

|||

| (6 intermediate revisions by one other user not shown) | |||

| Line 1: | Line 1: | ||

| − | + | ---- | |

| + | Summary Table | ||

| + | *Learning Chinese pronunciation from a “talking head” | ||

| + | *Researchers: Ying Liu, Dominic Massaro, Susan Dunlap, Suemei Wu, Trevor Chen, Derek Chan, Charles Perfetti | ||

| + | *PIs: Ying Liu, Dominic Massaro, Charles Perfetti, | ||

| + | *Others who have contributed 160 hours or more: | ||

| + | *Post-Docs: | ||

| + | *Graduate Students: Trevor Chen | ||

| + | *Study Start Date Sep 1, 2005 | ||

| + | *Study End Date Dec 31, 2006 | ||

| + | *LearnLab Site and Courses , CMU Chinese Online | ||

| + | *Number of Students: 20 | ||

| + | *Total Participant Hours for the study: 40 | ||

| + | *Data in the Data Shop: Yes | ||

| + | ---- | ||

| − | + | == Abstract == | |

| + | In this study, we compared the learning of Chinese pronunciation under three different online instruction methods: audio only, human “talking head”, and computer generated synthetic “talking head”. The learning took place through a web site developed specifically for students learning Chinese in the Chinese Learnlab[http://learnlab.org/learnlabs/chinese/]. Under both “talking head” conditions, the face of the speaker occupied 2/3 of the video screen. When student viewed the human “talking head”, major information came from the shape of the mouth and lip movement accompanied by audio sound. Whereas the synthetic “talking head” is transparent to reveal the internal articulators, which was accompanied by a slower than normal sound to match the “talking head” articulation. We predict [[multimedia sources]] can lead to [[robust learning]] when the [[cognitive load]] is within limit. | ||

| − | + | == Glossary == | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

Visual; audio; video | Visual; audio; video | ||

| − | + | == Research question == | |

Does visual input of a “talking head” enhance the learning of Chinese pronunciation? | Does visual input of a “talking head” enhance the learning of Chinese pronunciation? | ||

| − | + | == Background == | |

Multimedia technology has been used in second language learning for many years. The current available technology makes it possible to deliver not only text information, but also auditory and visual information through the Internet. It has been found that multiple-strategies and multiple modalities facilitate learning (Blum and Mitchell, 1998). For example, research in English showed that visual information on the vertical separation between the lips and the degree of lip spreading/rounding help the understanding of spoken language (Massaro and Cohen, 1990; Cohen and Massaro, 1994). So, does a visually presented “talking head” contains both auditory and visual information help Chinese character learning? Especially the robust learning of Chinese pronunciations which contain difficult consonants and tones? The method has not been tested by any well-designed experiment yet. However, based on a study in which we used a real person “talking head” to train true beginners on Chinese character, we believe it is a very effective learning method. Dr. Massaro’s research group is currently working on developing a animated 3D Chinese virtual speaker: Bao (Massaro, Ouni, Cohen, and Clark, In press). They found both the animated video (Baldi) and natural video were perceived better than voice only condition in a perceptual recognition experiment. The above two video conditions performed equally. We will do a comparison study between audio only, Bao and real person talking heads on our Chinese learners. | Multimedia technology has been used in second language learning for many years. The current available technology makes it possible to deliver not only text information, but also auditory and visual information through the Internet. It has been found that multiple-strategies and multiple modalities facilitate learning (Blum and Mitchell, 1998). For example, research in English showed that visual information on the vertical separation between the lips and the degree of lip spreading/rounding help the understanding of spoken language (Massaro and Cohen, 1990; Cohen and Massaro, 1994). So, does a visually presented “talking head” contains both auditory and visual information help Chinese character learning? Especially the robust learning of Chinese pronunciations which contain difficult consonants and tones? The method has not been tested by any well-designed experiment yet. However, based on a study in which we used a real person “talking head” to train true beginners on Chinese character, we believe it is a very effective learning method. Dr. Massaro’s research group is currently working on developing a animated 3D Chinese virtual speaker: Bao (Massaro, Ouni, Cohen, and Clark, In press). They found both the animated video (Baldi) and natural video were perceived better than voice only condition in a perceptual recognition experiment. The above two video conditions performed equally. We will do a comparison study between audio only, Bao and real person talking heads on our Chinese learners. | ||

| Line 23: | Line 32: | ||

| − | + | == Dependent variables == which are observable and typically measure competence, motivation, interaction, meta-learning, or some other pedagogically desirable outcome; | |

Accuracy of pronouncing Chinese syllables (initials and finals). | Accuracy of pronouncing Chinese syllables (initials and finals). | ||

| − | + | == Independent variables == | |

Three learning methods: audio only (control), human “talking head”, computer synthesized “talking head”. | Three learning methods: audio only (control), human “talking head”, computer synthesized “talking head”. | ||

Different Chinese syllables listed in Table 1. | Different Chinese syllables listed in Table 1. | ||

Table 1. The syllables are all tone-1 Mandarin words (pin-yin) except those with the tones indicated in parentheses. UC = unique consonants; NUC = Non-unique consonants; NUS = Non-unique syllables; US = unique syllables; UV = unique vowels | Table 1. The syllables are all tone-1 Mandarin words (pin-yin) except those with the tones indicated in parentheses. UC = unique consonants; NUC = Non-unique consonants; NUS = Non-unique syllables; US = unique syllables; UV = unique vowels | ||

| − | UC NUC NUS US UV | + | *UC NUC NUS US UV |

| − | ji Pi bao Ju Ge | + | *ji Pi bao Ju Ge |

| − | qie Nie dao qu He | + | *qie Nie dao qu He |

| − | xian Tian gao xu Ke | + | *xian Tian gao xu Ke |

| − | zhen Fen e(2) | + | *zhen Fen e(2) |

| − | chuan kuan U(3) | + | *chuan kuan U(3) |

| − | sha La | + | *sha La |

| − | + | == Hypothesis == | |

We predict that visual input can provide more robust learning of pronouncing Chinese sound when using appropriately. | We predict that visual input can provide more robust learning of pronouncing Chinese sound when using appropriately. | ||

| − | + | == Findings == | |

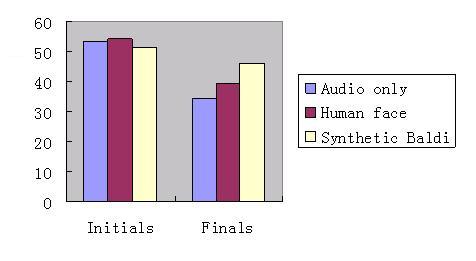

The analysis on finals showed significant condition effect (χ2 (2)=7.39, p=0.025). Further pairwise comparisons showed that synthetic Baldi is significantly better than audio only condition (χ2(1)=7.36, p=0.0067). Least square mean were listed in Table 2. | The analysis on finals showed significant condition effect (χ2 (2)=7.39, p=0.025). Further pairwise comparisons showed that synthetic Baldi is significantly better than audio only condition (χ2(1)=7.36, p=0.0067). Least square mean were listed in Table 2. | ||

| − | + | Figure. Least square mean percentages of improvement based on logistic model | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | [[Image:head1.jpg]] | |

| − | |||

| − | + | == Explanation == | |

It is difficult to learn to speak a language by just listening to it, especially for a second language learner at beginner’s level. Visual cues provide extra information for reach the goal of speaking “natively”. Imitation is best achieved by understanding how the organs produce the sound. Current findings support that Bao (Chinese Baldi) has significant advantage in teaching Chinese vowel pronunciation than audio alone. The human face falls between the above two methods, because it provides some useful facial information but the internal organs are not transparent. We conclude that visual speech provides significant benefit for learners to improve their pronunciation. | It is difficult to learn to speak a language by just listening to it, especially for a second language learner at beginner’s level. Visual cues provide extra information for reach the goal of speaking “natively”. Imitation is best achieved by understanding how the organs produce the sound. Current findings support that Bao (Chinese Baldi) has significant advantage in teaching Chinese vowel pronunciation than audio alone. The human face falls between the above two methods, because it provides some useful facial information but the internal organs are not transparent. We conclude that visual speech provides significant benefit for learners to improve their pronunciation. | ||

| − | As a node under coordinative learning cluster, coordination of visual and audio inputs is the cognitive process leads to more robust learning. | + | As a node under [[coordinative learning]] cluster, [[coordination]] of visual and audio inputs is the cognitive process leads to more [[robust learning]]. |

| − | |||

| − | |||

| + | == Descendents == | ||

None. | None. | ||

| − | + | == Further information == | |

| − | |||

Massaro, D. W., Liu, Y., Chen, T. H., & Perfetti, C. A. (2006). A Multilingual Embodied Conversational Agent for Tutoring Speech and Language Learning. Proceedings of the Ninth International Conference on Spoken Language Processing (Interspeech 2006 - ICSLP, September, Pittsburgh, PA), 825-828.Universität Bonn, Bonn, Germany. | Massaro, D. W., Liu, Y., Chen, T. H., & Perfetti, C. A. (2006). A Multilingual Embodied Conversational Agent for Tutoring Speech and Language Learning. Proceedings of the Ninth International Conference on Spoken Language Processing (Interspeech 2006 - ICSLP, September, Pittsburgh, PA), 825-828.Universität Bonn, Bonn, Germany. | ||

| − | |||

| − | |||

Latest revision as of 15:02, 12 March 2008

Summary Table

- Learning Chinese pronunciation from a “talking head”

- Researchers: Ying Liu, Dominic Massaro, Susan Dunlap, Suemei Wu, Trevor Chen, Derek Chan, Charles Perfetti

- PIs: Ying Liu, Dominic Massaro, Charles Perfetti,

- Others who have contributed 160 hours or more:

- Post-Docs:

- Graduate Students: Trevor Chen

- Study Start Date Sep 1, 2005

- Study End Date Dec 31, 2006

- LearnLab Site and Courses , CMU Chinese Online

- Number of Students: 20

- Total Participant Hours for the study: 40

- Data in the Data Shop: Yes

Contents

Abstract

In this study, we compared the learning of Chinese pronunciation under three different online instruction methods: audio only, human “talking head”, and computer generated synthetic “talking head”. The learning took place through a web site developed specifically for students learning Chinese in the Chinese Learnlab[1]. Under both “talking head” conditions, the face of the speaker occupied 2/3 of the video screen. When student viewed the human “talking head”, major information came from the shape of the mouth and lip movement accompanied by audio sound. Whereas the synthetic “talking head” is transparent to reveal the internal articulators, which was accompanied by a slower than normal sound to match the “talking head” articulation. We predict multimedia sources can lead to robust learning when the cognitive load is within limit.

Glossary

Visual; audio; video

Research question

Does visual input of a “talking head” enhance the learning of Chinese pronunciation?

Background

Multimedia technology has been used in second language learning for many years. The current available technology makes it possible to deliver not only text information, but also auditory and visual information through the Internet. It has been found that multiple-strategies and multiple modalities facilitate learning (Blum and Mitchell, 1998). For example, research in English showed that visual information on the vertical separation between the lips and the degree of lip spreading/rounding help the understanding of spoken language (Massaro and Cohen, 1990; Cohen and Massaro, 1994). So, does a visually presented “talking head” contains both auditory and visual information help Chinese character learning? Especially the robust learning of Chinese pronunciations which contain difficult consonants and tones? The method has not been tested by any well-designed experiment yet. However, based on a study in which we used a real person “talking head” to train true beginners on Chinese character, we believe it is a very effective learning method. Dr. Massaro’s research group is currently working on developing a animated 3D Chinese virtual speaker: Bao (Massaro, Ouni, Cohen, and Clark, In press). They found both the animated video (Baldi) and natural video were perceived better than voice only condition in a perceptual recognition experiment. The above two video conditions performed equally. We will do a comparison study between audio only, Bao and real person talking heads on our Chinese learners.

== Dependent variables == which are observable and typically measure competence, motivation, interaction, meta-learning, or some other pedagogically desirable outcome;

Accuracy of pronouncing Chinese syllables (initials and finals).

Independent variables

Three learning methods: audio only (control), human “talking head”, computer synthesized “talking head”. Different Chinese syllables listed in Table 1. Table 1. The syllables are all tone-1 Mandarin words (pin-yin) except those with the tones indicated in parentheses. UC = unique consonants; NUC = Non-unique consonants; NUS = Non-unique syllables; US = unique syllables; UV = unique vowels

- UC NUC NUS US UV

- ji Pi bao Ju Ge

- qie Nie dao qu He

- xian Tian gao xu Ke

- zhen Fen e(2)

- chuan kuan U(3)

- sha La

Hypothesis

We predict that visual input can provide more robust learning of pronouncing Chinese sound when using appropriately.

Findings

The analysis on finals showed significant condition effect (χ2 (2)=7.39, p=0.025). Further pairwise comparisons showed that synthetic Baldi is significantly better than audio only condition (χ2(1)=7.36, p=0.0067). Least square mean were listed in Table 2.

Figure. Least square mean percentages of improvement based on logistic model

Explanation

It is difficult to learn to speak a language by just listening to it, especially for a second language learner at beginner’s level. Visual cues provide extra information for reach the goal of speaking “natively”. Imitation is best achieved by understanding how the organs produce the sound. Current findings support that Bao (Chinese Baldi) has significant advantage in teaching Chinese vowel pronunciation than audio alone. The human face falls between the above two methods, because it provides some useful facial information but the internal organs are not transparent. We conclude that visual speech provides significant benefit for learners to improve their pronunciation.

As a node under coordinative learning cluster, coordination of visual and audio inputs is the cognitive process leads to more robust learning.

Descendents

None.

Further information

Massaro, D. W., Liu, Y., Chen, T. H., & Perfetti, C. A. (2006). A Multilingual Embodied Conversational Agent for Tutoring Speech and Language Learning. Proceedings of the Ninth International Conference on Spoken Language Processing (Interspeech 2006 - ICSLP, September, Pittsburgh, PA), 825-828.Universität Bonn, Bonn, Germany.