Difference between revisions of "Stoichiometry Study"

(→Hypothesis) |

(→Overview) |

||

| (41 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

==Studying the Learning Effect of Personalization and Worked Examples in the Solving of Stoichiometry Problems== | ==Studying the Learning Effect of Personalization and Worked Examples in the Solving of Stoichiometry Problems== | ||

| − | + | Bruce M. McLaren, Ken Koedinger, and David Yaron | |

| + | |||

| + | ===Overview=== | ||

| + | |||

| + | PI: Bruce M. McLaren | ||

| + | |||

| + | Co-PIs: Ken Koedinger, David Yaron | ||

| + | |||

| + | Others who have contributed 160 hours or more: | ||

| + | |||

| + | * Sung-Joo Lim, Carnegie Mellon University, data analysis and programming | ||

| + | * John laPlante, Carnegie Mellon University, programming | ||

| + | * Jonathan Sewall, Carnegie Mellon University, programming | ||

| + | |||

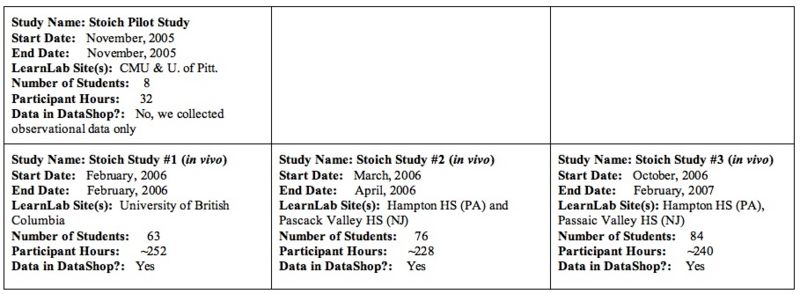

| + | [[Image:StudyTable-Studies123.jpg|800px]] | ||

===Abstract=== | ===Abstract=== | ||

| Line 26: | Line 40: | ||

Can worked examples lead to robust learning when used in conjunction with a highly supportive learning environment, in particular an intelligent tutoring system? | Can worked examples lead to robust learning when used in conjunction with a highly supportive learning environment, in particular an intelligent tutoring system? | ||

| + | |||

| + | Do worked examples lead to more efficient learning? That is, by studying worked examples, can students learn as much as with supported problem solving, but in less time? | ||

| + | |||

| + | ===Hypothesis=== | ||

| + | |||

| + | These research questions led us to the following three hypotheses: | ||

| + | |||

| + | ;H1 | ||

| + | :The use of personalized (or polite) problem statements, feedback, and hints in a supported problem-solving environment (i.e., an intelligent tutoring system) can improve learning in an e-Learning system. | ||

| + | |||

| + | ;H2 | ||

| + | :The use of [[Worked Examples]] in a supported problem-solving environment (i.e., an intelligent tutoring system) can improve learning in an e-Learning system. | ||

| + | |||

| + | ;H3 | ||

| + | :The use of [[Worked Examples]] in a supported problem-solving environment can result in more efficient learning (i.e., learning as much as with supported problem solving only, but in less time). | ||

===Background and Significance=== | ===Background and Significance=== | ||

| Line 41: | Line 70: | ||

===Independent Variables=== | ===Independent Variables=== | ||

| − | To test our hypotheses and the effect of personalization | + | To test our hypotheses and the effect of personalization and worked examples on learning, we designed and have executed two 2 x 2 factorial studies. |

| − | *One independent variable is [[Personalization]] | + | *One independent variable is [[Personalization]], with one level impersonal instruction, feedback, and hints and the other personal instruction, feedback, and hints. |

*The other independent variable is [[Worked Examples]], with one level tutored problem solving alone and the other tutored problem solving together with worked examples. In the former condition, subjects only solve problems using the intelligent tutor; no worked examples are presented. In the latter condition, subjects alternate between observation and self-explanation of a worked example and solving of a tutored problem. This alternating technique has yielded better learning results in prior research (Trafton and Reiser, 1993). | *The other independent variable is [[Worked Examples]], with one level tutored problem solving alone and the other tutored problem solving together with worked examples. In the former condition, subjects only solve problems using the intelligent tutor; no worked examples are presented. In the latter condition, subjects alternate between observation and self-explanation of a worked example and solving of a tutored problem. This alternating technique has yielded better learning results in prior research (Trafton and Reiser, 1993). | ||

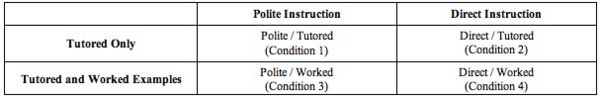

| − | The 2 x 2 factorial design for our third and most recent study is shown below. | + | With respect to personalized language (and because we got a null result in the first two studies), we thought that perhaps our conceptualization and implementation might not be as socially engaging as we had hoped. This was also suggested to us by Rich Mayer, who reviewed the first study. In a recent study that Mayer and colleagues did (Wang, Johnson, Mayer, Rizzo, Shaw, & Collins, in press), based on the work of Brown and Levinson (1987), they found that a polite version of a tutor, which provided polite feedback such as, “You could press the ENTER key”, led to significantly better learning than a direct version of the tutor that used more imperative feedback such as, “Press the ENTER key.” We decided to investigate this in a third in vivo study in which we changed all of the personalized instruction, feedback, and hints of the tutor to more polite forms, similar to that used by Mayer and colleagues. |

| + | |||

| + | Thus, for the third study we changed the first independent variable to "Politness," with one level polite instruction, feedback, and hints and the other direct instruction, feedback, and hints. The 2 x 2 factorial design for our third and most recent study is shown below. | ||

| − | [[Image:Fig2-FactorialDesign.jpg|600px|center]] | + | [[Image:Fig2-FactorialDesign.jpg|600px|center]] |

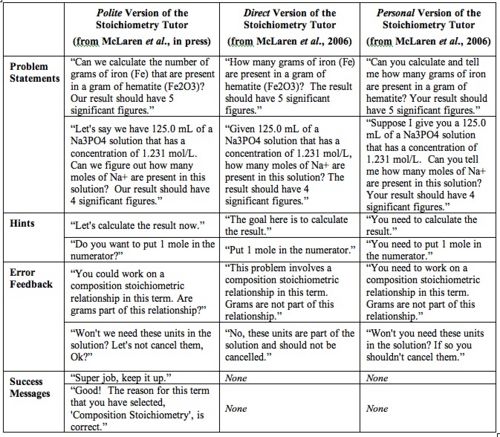

| − | Below is a table that provides examples of the differences in | + | Below is a table that provides examples of the differences in language between the polite version of our tutor and earlier versions. |

[[image:Table1-FeedbackDiffs.jpg|500px|center]] | [[image:Table1-FeedbackDiffs.jpg|500px|center]] | ||

| Line 57: | Line 88: | ||

To evaluate learning, students are asked to solve pre and post-test stoichiometry problems that are isomorphic to one another and to the tutored problems. Thus, we have focused on [[normal post-test]]s in our studies so far. When (and if) we see an effect in a normal post-test, we will conduct studies to test [[retention]]. | To evaluate learning, students are asked to solve pre and post-test stoichiometry problems that are isomorphic to one another and to the tutored problems. Thus, we have focused on [[normal post-test]]s in our studies so far. When (and if) we see an effect in a normal post-test, we will conduct studies to test [[retention]]. | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

===Findings=== | ===Findings=== | ||

| − | In two initial 2 x 2 factorial studies, we found that personalized language and worked examples had no significant effects on learning. On the other hand, there was a significant difference between the pre and posttest in all conditions, suggesting that the intelligent tutor present in all conditions did make a difference in learning. For study 1 we had N = 63 and for study 2 we had N = 76. The | + | In two initial 2 x 2 factorial studies, we found that personalized language and worked examples had no significant effects on learning, thus not supporting hypotheses H1 and H2. On the other hand, there was a significant difference between the pre and posttest in all conditions, suggesting that the intelligent tutor present in all conditions did make a difference in learning. For study 1 we had N = 63 and for study 2 we had N = 76. The results of Study 1 are reported in (McLaren, Lim, Gagnon, Yaron, and Koedinger, 2006). We are currently analyzing the data to test hypothesis H3, that is, to determine if learning with worked examples was more efficient. |

One possible explanation for why neither personalized language nor worked examples have made a difference thus far is the switch from a lab environment to in vivo experimentation. Most of the results from past studies of both personalized language and worked examples come from lab studies, so it may simply be that the realism and messiness of an in vivo study makes it much more difficult for interventions such as these to make a difference to students’ learning. It may also be that the tutoring received by the subjects simply had much more effect on learning than the worked examples or personalized language. | One possible explanation for why neither personalized language nor worked examples have made a difference thus far is the switch from a lab environment to in vivo experimentation. Most of the results from past studies of both personalized language and worked examples come from lab studies, so it may simply be that the realism and messiness of an in vivo study makes it much more difficult for interventions such as these to make a difference to students’ learning. It may also be that the tutoring received by the subjects simply had much more effect on learning than the worked examples or personalized language. | ||

| − | + | We recently concluded the third study in which we investigated the use of polite language, rather than personalized language (as shown in the table above). We have so far only analyzed the first 33 subjects, out of N=84 (for details on the analysis of the first 33 subjects see McLaren, Lim, Yaron, & Yaron, in press). The preliminary data indicates that the polite condition leads to larger learning gains than the non-polite condition, however, not at a statistically significant level. Worked examples also did not make a difference to learning. Thus, once again, hypotheses H1 and H2 were not supported. We are in the process of analyzing the rest of the data from the remaining subjects who participated in this study, including an investigation of hypothesis H3 and the efficiency of learning. | |

| − | |||

| − | |||

===Explanation=== | ===Explanation=== | ||

| − | This study is part of the [[Coordinative Learning]] cluster. The study follows the Coordinative Learning hypothesis that two (or more) [[sources]] of instructional information can lead to improved robust learning. In particular, the study tests whether an ITS and personalized | + | This study is part of the [[Coordinative Learning]] cluster. The study follows the Coordinative Learning hypothesis that two (or more) [[sources]] of instructional information can lead to improved robust learning. In particular, the study tests whether an ITS and personalized (or polite) language used together lead to more robust learning and whether an ITS and worked examples used together lead to more robust learning. |

=== Connections to Other PSLC Studies=== | === Connections to Other PSLC Studies=== | ||

| − | * The key finding of our studies so far has been that learning has not improved when students use an intelligent tutor in conjunction with | + | * The key finding of our studies so far has been that learning has not improved when students use an intelligent tutor in conjunction with other instructional techniques. Two other studies in the [[Coordinative Learning]] cluster, the [[Visual-Verbal Learning (Aleven & Butcher Project)|Aleven/Butcher]] and [[Booth|Booth/Siegler/Koedinger/Rittle-Johnson]] projects, and one study in the [[Interactive Communication]] cluster, the [[Does learning from worked-out examples improve tutored problem solving?|Renkl/Aleven/Salden project]], are also investigating the [[complementary]] effects of intelligent tutoring combined with another instructional technique. |

| − | * Our project differs from the [[Booth|Booth study]] in that we are using only correct examples as a means to strengthen correct [[knowledge components]]. We are not using incorrect examples to weaken incorrect [[knowledge components]], as Booth is in her study. | + | * Our project differs from the [[Booth|Booth study]] in that we are using only correct examples as a means to strengthen correct [[knowledge components]]. We are not using incorrect examples to weaken incorrect [[knowledge components]], as Booth is testing in her study. |

| − | * Our project also relates to the [[Visual-Verbal Learning (Aleven & Butcher Project)|Aleven and Butcher project]] in that we | + | * Our project also relates to the [[Visual-Verbal Learning (Aleven & Butcher Project)|Aleven and Butcher project]] in that we both are exploring the learning value of [[e-Learning Principles]] (they are investigating Contiguity; we are looking at personalization and worked examples). In addition, like their study, we are prompting [[self-explanation]] as a means to promote robust learning. |

===Annotated Bibliography=== | ===Annotated Bibliography=== | ||

| + | |||

| + | *McLaren, B. M., Lim, S., Yaron, D., and Koedinger, K. R. (2007). Can a Polite Intelligent Tutoring System Lead to Improved Learning Outside of the Lab? In the Proceedings of the 13th International Conference on Artificial Intelligence in Education (AIED-07), pp 331-338. [[http://www.learnlab.org/research/wiki/images/5/5a/AIED-07-PoliteTutoring.pdf pdf file]] | ||

| + | *McLaren, B. M., Lim, S., Gagnon, F., Yaron, D., and Koedinger, K. R. (2006). Studying the Effects of Personalized Language and Worked Examples in the Context of a Web-Based Intelligent Tutor; In the Proceedings of the 8th International Conference on Intelligent Tutoring Systems (ITS-2006), pp. 318-328. [[http://www.learnlab.org/research/wiki/images/5/58/ChemStudy1-ITS2006.pdf pdf file]] | ||

| + | *McLaren, B. M. Presentation to the NSF Site Visitors, June, 2006. | ||

| + | |||

| + | ===References=== | ||

*Aleven, V. & Ashley, K. D. (1997). Teaching Case-Based Argumentation Through a Model and Examples: Empirical Evaluation of an Intelligent Learning Environment, Proceedings of AIED-97, 87-94. | *Aleven, V. & Ashley, K. D. (1997). Teaching Case-Based Argumentation Through a Model and Examples: Empirical Evaluation of an Intelligent Learning Environment, Proceedings of AIED-97, 87-94. | ||

| Line 106: | Line 127: | ||

*Mathan, S. (2003). Recasting the Feedback Debate: Benefits of Tutoring Error Detection and Correction Skills. Ph.D. Dissertation, Carnegie Mellon Univ., Pitts., PA. | *Mathan, S. (2003). Recasting the Feedback Debate: Benefits of Tutoring Error Detection and Correction Skills. Ph.D. Dissertation, Carnegie Mellon Univ., Pitts., PA. | ||

*Mayer, R. E., Johnson, W. L., Shaw, E. and Sandhu, S. (2006). Constructing Computer-Based Tutors that are Socially Sensitive: Politeness in Educational Software, International Journal of Human-Computer Studies 64 (2006) 36-42. | *Mayer, R. E., Johnson, W. L., Shaw, E. and Sandhu, S. (2006). Constructing Computer-Based Tutors that are Socially Sensitive: Politeness in Educational Software, International Journal of Human-Computer Studies 64 (2006) 36-42. | ||

| − | |||

| − | |||

| − | |||

*Moreno, R. and Mayer, R. E. (2000). Engaging students in active learning: The case for personalized multimedia messages. Journal of Ed. Psych., 93, 724-733. | *Moreno, R. and Mayer, R. E. (2000). Engaging students in active learning: The case for personalized multimedia messages. Journal of Ed. Psych., 93, 724-733. | ||

*Paas, F. G. W. C. (1992). Training strategies for attaining transfer of problem-solving skill in statistics: A cognitive load approach. Journal of Ed. Psych., 84, 429-434. | *Paas, F. G. W. C. (1992). Training strategies for attaining transfer of problem-solving skill in statistics: A cognitive load approach. Journal of Ed. Psych., 84, 429-434. | ||

Latest revision as of 14:49, 17 January 2008

Contents

[hide]Studying the Learning Effect of Personalization and Worked Examples in the Solving of Stoichiometry Problems

Bruce M. McLaren, Ken Koedinger, and David Yaron

Overview

PI: Bruce M. McLaren

Co-PIs: Ken Koedinger, David Yaron

Others who have contributed 160 hours or more:

- Sung-Joo Lim, Carnegie Mellon University, data analysis and programming

- John laPlante, Carnegie Mellon University, programming

- Jonathan Sewall, Carnegie Mellon University, programming

Abstract

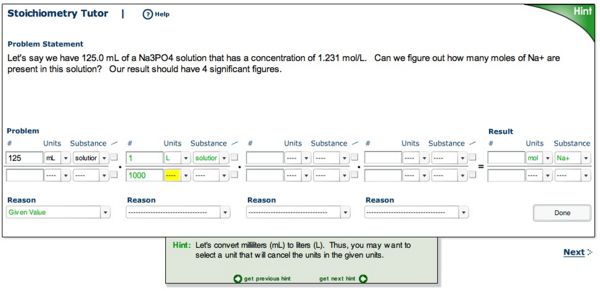

We have been investigating whether personalized (or polite) instructional materials and worked examples can improve learning when used as techniques complementary to an intelligent tutoring system. The study involves online (i.e., web-based) learning of stoichiometry, the basic math required to solve elementary chemistry problems, and uses intelligent tutoring systems developed with the aid of the Cognitive Tutor Authoring Tools (CTAT), a key enabling technology of the PSLC. A screen shot of the tutor we have been using in the studies is shown below. The stoichiometry materials were piloted at CMU and the University of Pittsburgh and have now been used in three separate in vivo studies with students at a university and three high schools.

In a recent book by Clark and Mayer (2003), a number of E-Learning Principles were proposed as guidelines for building e-Learning systems. All are supported by multiple educational psychology and cognitive science studies. We were especially interested in and decided to experiment with two of the Clark and Mayer principles:

- Personalization Principle One: Use Conversational Rather than Formal Style (i.e., first and second person pronouns, informal language)

- Worked Examples Principle One: Replace Some Practice Problems with Worked Examples

In contrast with most previous studies, however, we wished to test these principles in the context of an intelligent tutoring system (ITS), rather than on their own in a standard e-Learning or ITS environment or, as in even earlier studies, in conjunction with problems solved by hand. The key difference is that an intelligent tutoring system provides more than just problem solving practice; it also supplies students with context-specific hints and feedback on their progress.

Glossary

Research Questions

Can personalized (or polite) hints, feedback, and messages lead to robust learning when used in conjunction with a highly supportive learning environment, in particular an intelligent tutoring system?

Can worked examples lead to robust learning when used in conjunction with a highly supportive learning environment, in particular an intelligent tutoring system?

Do worked examples lead to more efficient learning? That is, by studying worked examples, can students learn as much as with supported problem solving, but in less time?

Hypothesis

These research questions led us to the following three hypotheses:

- H1

- The use of personalized (or polite) problem statements, feedback, and hints in a supported problem-solving environment (i.e., an intelligent tutoring system) can improve learning in an e-Learning system.

- H2

- The use of Worked Examples in a supported problem-solving environment (i.e., an intelligent tutoring system) can improve learning in an e-Learning system.

- H3

- The use of Worked Examples in a supported problem-solving environment can result in more efficient learning (i.e., learning as much as with supported problem solving only, but in less time).

Background and Significance

The Clark and Mayer personalization principle proposes that informal speech or text (i.e., conversational style) is more supportive of learning than formal speech or text in an e-Learning environment. In other words, instructions, hints, and feedback should employ first or second-person language (e.g., “You might want to try this”) and should be presented informally (e.g., “Hello there, welcome to the Stoichiometry Tutor! …”) rather than in a more formal tone (e.g., “Problems such as these are solved in the following manner”).

Although the personalization principle runs counter to the intuition that information should be “efficiently delivered” and provided in a business-like manner to a learner, it is consistent with cognitive theories of learning. For instance, educational research has demonstrated that people put forth a greater effort to understand information when they feel they are in a dialogue (Beck, McKeown, Sandora, Kucan, and Worthy, 1996). While consumers of e-Learning content certainly know they are interacting with a computer, and not a human, personalized language helps to create a “dialogue” effect with the computer. E-Learning research in support of the personalization principle is somewhat limited but at least one project has shown positive effects (Moreno and Mayer, 2000). Students who learned from personalized text in a botany e-Learning system performed better on subsequent transfer tasks than students who learned from more formal text in five out of five studies. Note that this project did not explore the use of personalization in a web-based intelligent tutoring setting, as we are doing in our work.

The Clark and Mayer worked example principle proposes that an e-Learning course should present learners with some step-by-step solutions to problems (i.e., worked examples) rather than having them try to solve all problems on their own. Interestingly, this principle also runs counter to many people’s intuition and even to research that stresses the importance of “learning by doing” (Kolb, 1984).

The theory behind worked examples is that solving problems can overload limited working memory, while studying worked examples does not and, in fact, can help build new knowledge (Sweller, 1994). The empirical evidence in support of worked examples is more established and long standing than that of personalization. For instance, in a study of geometry by Paas (1992), students who studied 8 worked examples and solved 4 problems worked for less time and scored higher on a posttest than students who solved all 12 problems. In a study in the domain of probability calculation, Renkl (1997) found that students who employed more principle-based self-explanations benefited more from worked examples than those who did not. Research has also shown that mixing worked examples and problem solving is beneficial to learning. In a study on LISP programming (Trafton and Reiser, 1993), it was shown that alternating between worked examples and problem solving was more beneficial to learners than observing a group of worked examples followed by solving a group of problems.

Previous ITS research has investigated how worked examples can be used to help students as they problem solve (Gott, Lesgold, and Kane, 1996; Aleven and Ashley, 1997). Conati’s and VanLehn’s SE-Coach demonstrated that an ITS can help students self-explain worked examples (2000). However, none of this prior work explicitly studied how worked examples, presented separately from supported problem solving as complementary learning devices, might provide added value to learning with an ITS and avoid cognitive load (Sweller, 1994). Closest to our approach is that of Mathan and Koedinger (2002). They experimented with two different versions of an Excel ITS, one that employed an expert model and one that used an intelligent novice model, complemented by two different types of worked examples, “active” example walkthroughs (examples in which students complete some of the work) and “passive” examples (examples that are just watched). The “active” example walkthroughs led to better learning but only for the students who used the expert model ITS. However, a follow-up study did not replicate these results (Mathan, 2003). This work, as with the other ITS research mentioned above, was not done in the context of a web-based ITS.

Independent Variables

To test our hypotheses and the effect of personalization and worked examples on learning, we designed and have executed two 2 x 2 factorial studies.

- One independent variable is Personalization, with one level impersonal instruction, feedback, and hints and the other personal instruction, feedback, and hints.

- The other independent variable is Worked Examples, with one level tutored problem solving alone and the other tutored problem solving together with worked examples. In the former condition, subjects only solve problems using the intelligent tutor; no worked examples are presented. In the latter condition, subjects alternate between observation and self-explanation of a worked example and solving of a tutored problem. This alternating technique has yielded better learning results in prior research (Trafton and Reiser, 1993).

With respect to personalized language (and because we got a null result in the first two studies), we thought that perhaps our conceptualization and implementation might not be as socially engaging as we had hoped. This was also suggested to us by Rich Mayer, who reviewed the first study. In a recent study that Mayer and colleagues did (Wang, Johnson, Mayer, Rizzo, Shaw, & Collins, in press), based on the work of Brown and Levinson (1987), they found that a polite version of a tutor, which provided polite feedback such as, “You could press the ENTER key”, led to significantly better learning than a direct version of the tutor that used more imperative feedback such as, “Press the ENTER key.” We decided to investigate this in a third in vivo study in which we changed all of the personalized instruction, feedback, and hints of the tutor to more polite forms, similar to that used by Mayer and colleagues.

Thus, for the third study we changed the first independent variable to "Politness," with one level polite instruction, feedback, and hints and the other direct instruction, feedback, and hints. The 2 x 2 factorial design for our third and most recent study is shown below.

Below is a table that provides examples of the differences in language between the polite version of our tutor and earlier versions.

Dependent Variables

To evaluate learning, students are asked to solve pre and post-test stoichiometry problems that are isomorphic to one another and to the tutored problems. Thus, we have focused on normal post-tests in our studies so far. When (and if) we see an effect in a normal post-test, we will conduct studies to test retention.

Findings

In two initial 2 x 2 factorial studies, we found that personalized language and worked examples had no significant effects on learning, thus not supporting hypotheses H1 and H2. On the other hand, there was a significant difference between the pre and posttest in all conditions, suggesting that the intelligent tutor present in all conditions did make a difference in learning. For study 1 we had N = 63 and for study 2 we had N = 76. The results of Study 1 are reported in (McLaren, Lim, Gagnon, Yaron, and Koedinger, 2006). We are currently analyzing the data to test hypothesis H3, that is, to determine if learning with worked examples was more efficient.

One possible explanation for why neither personalized language nor worked examples have made a difference thus far is the switch from a lab environment to in vivo experimentation. Most of the results from past studies of both personalized language and worked examples come from lab studies, so it may simply be that the realism and messiness of an in vivo study makes it much more difficult for interventions such as these to make a difference to students’ learning. It may also be that the tutoring received by the subjects simply had much more effect on learning than the worked examples or personalized language.

We recently concluded the third study in which we investigated the use of polite language, rather than personalized language (as shown in the table above). We have so far only analyzed the first 33 subjects, out of N=84 (for details on the analysis of the first 33 subjects see McLaren, Lim, Yaron, & Yaron, in press). The preliminary data indicates that the polite condition leads to larger learning gains than the non-polite condition, however, not at a statistically significant level. Worked examples also did not make a difference to learning. Thus, once again, hypotheses H1 and H2 were not supported. We are in the process of analyzing the rest of the data from the remaining subjects who participated in this study, including an investigation of hypothesis H3 and the efficiency of learning.

Explanation

This study is part of the Coordinative Learning cluster. The study follows the Coordinative Learning hypothesis that two (or more) sources of instructional information can lead to improved robust learning. In particular, the study tests whether an ITS and personalized (or polite) language used together lead to more robust learning and whether an ITS and worked examples used together lead to more robust learning.

Connections to Other PSLC Studies

- The key finding of our studies so far has been that learning has not improved when students use an intelligent tutor in conjunction with other instructional techniques. Two other studies in the Coordinative Learning cluster, the Aleven/Butcher and Booth/Siegler/Koedinger/Rittle-Johnson projects, and one study in the Interactive Communication cluster, the Renkl/Aleven/Salden project, are also investigating the complementary effects of intelligent tutoring combined with another instructional technique.

- Our project differs from the Booth study in that we are using only correct examples as a means to strengthen correct knowledge components. We are not using incorrect examples to weaken incorrect knowledge components, as Booth is testing in her study.

- Our project also relates to the Aleven and Butcher project in that we both are exploring the learning value of e-Learning Principles (they are investigating Contiguity; we are looking at personalization and worked examples). In addition, like their study, we are prompting self-explanation as a means to promote robust learning.

Annotated Bibliography

- McLaren, B. M., Lim, S., Yaron, D., and Koedinger, K. R. (2007). Can a Polite Intelligent Tutoring System Lead to Improved Learning Outside of the Lab? In the Proceedings of the 13th International Conference on Artificial Intelligence in Education (AIED-07), pp 331-338. [pdf file]

- McLaren, B. M., Lim, S., Gagnon, F., Yaron, D., and Koedinger, K. R. (2006). Studying the Effects of Personalized Language and Worked Examples in the Context of a Web-Based Intelligent Tutor; In the Proceedings of the 8th International Conference on Intelligent Tutoring Systems (ITS-2006), pp. 318-328. [pdf file]

- McLaren, B. M. Presentation to the NSF Site Visitors, June, 2006.

References

- Aleven, V. & Ashley, K. D. (1997). Teaching Case-Based Argumentation Through a Model and Examples: Empirical Evaluation of an Intelligent Learning Environment, Proceedings of AIED-97, 87-94.

- Beck, I., McKeown, M. G., Sandora, C., Kucan, L., and Worthy, J. (1996). Questioning the author: A year long classroom implementation to engage students in text. Elementary School Journal, 96, 385-414.

- Brown, P. and Levinson, S. C. (1987). Politeness: Some Universals in Language Use. Cambridge University Press, New York.

- Clark, R. C. and Mayer, R. E. (2003). e-Learning and the Science of Instruction. Jossey-Bass/Pfeiffer.

- Conati, C. and VanLehn, K. (2000). Toward Computer-Based Support of Meta-Cognitive Skills: a Computational Framework to Coach Self-Explanation. Int’l Journal of Artificial Intelligence in Education, 11, 398-415.

- Gott, S. P., Lesgold, A., & Kane, R. S. (1996). Tutoring for Transfer of Technical Competence. In B. G. Wilson (Ed.), Constructivist Learning Environments, 33-48, Englewood Cliffs, NJ: Educational Technology Publications.

- Kolb, D. A. (1984). Experiential Learning - Experience as the Source of Learning and Development, Prentice-Hall, New Jersey. 1984.

- Mathan, S. and Koedinger, K. R. (2002). An Empirical Assessment of Comprehension Fostering Features in an Intelligent Tutoring System. Proceedings of ITS-2002. Lecture Notes in Computer Science, Vol. 2363, 330-343. Berlin: Springer-Verlag

- Mathan, S. (2003). Recasting the Feedback Debate: Benefits of Tutoring Error Detection and Correction Skills. Ph.D. Dissertation, Carnegie Mellon Univ., Pitts., PA.

- Mayer, R. E., Johnson, W. L., Shaw, E. and Sandhu, S. (2006). Constructing Computer-Based Tutors that are Socially Sensitive: Politeness in Educational Software, International Journal of Human-Computer Studies 64 (2006) 36-42.

- Moreno, R. and Mayer, R. E. (2000). Engaging students in active learning: The case for personalized multimedia messages. Journal of Ed. Psych., 93, 724-733.

- Paas, F. G. W. C. (1992). Training strategies for attaining transfer of problem-solving skill in statistics: A cognitive load approach. Journal of Ed. Psych., 84, 429-434.

- Renkl, A. (1997). Learning from Worked-Out Examples: A Study on Individual Differences. Cognitive Science, 21, 1-29.

- Sweller, J. (1994). Cognitive load theory, learning difficulty and instructional design. Learning and Instruction, 4, 295-312.

- Trafton, J. G. and Reiser, B. J. (1993). The contributions of studying examples and solving problems to skill acquisition. In M. Polson (Ed.) Proceedings of the 15th annual conference of the Cognitive Science Society, 1017-1022.

- Wang, N., Johnson, W. L., Mayer, R. E., Rizzo, P., Shaw, E., & Collins, H. (in press). The Politeness Effect: Pedagogical Agents and Learning Outcomes. To be published in the International Journal of Human-Computer Studies.