Difference between revisions of "In vivo experiment"

m (Reverted edits by Oliverjones (Talk); changed back to last version by Koedinger) |

|||

| (9 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

| − | An in vivo experiment is a laboratory-style multi-condition experiment conducted in the classroom. | + | [[Category:Glossary]] |

| + | An ''in vivo'' experiment is a principle-testing experiment run in the context of an academic course. It is a laboratory-style multi-condition experiment conducted in the natural setting of student course work including the classroom, computer lab, study hall, dorm room, home, etc. The conditions in an ''in vivo'' experiment manipulate a small but crucial, well-defined instructional variable, as opposed to a whole curriculum or educational policy. How ''in vivo'' experimentation is related to other methodologies in the learning and educational sciences is illustrated in the following figure: | ||

| − | In | + | [[Image:In-vivo-method.jpg]] |

| − | + | ''In vivo'' experimentation is different from other methodologies in the learning and educational sciences including 1) design-based research, which does not have control conditions, 2) randomized field trials, which do not test a principle, but a policy or curriculum, and 3) lab experiments, which are not run in a natural course setting. Of course, all of these methodologies have strengths and should be applied at the right time for the right purpose. | |

| − | + | The motivation for the contrast with laboratory experimentation was well articulated by Russ Whitehurst in an [http://www.psychologicalscience.org/observer/getArticle.cfm?id=1935 APS Observer article (March, 2006)]: "In contrast to learning in laboratory settings, learning in classrooms typically involves content of greater complexity and scope, delivered and tested over much longer periods of time, with much greater variability in delivery, and with far more distraction and competition for student time and effort. Before principles of learning from cognitive science can be applied to classroom instruction, we need to understand if the principles generalize beyond well controlled laboratory settings to the complex cognitive and social conditions of the classroom." | |

| − | Regardless of the experimental method used in an in vivo experiment, the | + | An ''in vivo'' experiment can be implemented either in a [[within classroom design]] (i.e. ''students'' are randomly assigned to conditions, regardless the class they belong to) or in a [[between classroom design]] (i.e. ''classes'' are randomly assigned to conditions). |

| + | |||

| + | In typical ''in vivo'' experiments within PSLC, educational technology (like an intelligent tutoring system or an on-line course) is used not only to provide part of the instruction, but also to monitor students' activities and progress through the year. The conditions in an ''in vivo'' experiment may be implemented within an advanced educational technology or outside of it. We strive to have the control condition be exactly what students would do normally in the course. We call this an [[ecological control group]]. Sometimes, however, the control activity is somewhat different from normal activity so as to be more like the treatment (i.e., just on change away). Here are a couple examples (to make this easier to follow, assume that there are just two conditions in the experiment, called the experimental and control conditions): | ||

| + | |||

| + | * '''Technology-based ''in vivo'' experiment with an ecological control''': Here the educational technology is modified to implement the experimental manipulation (by changing just one aspect of the technology). Students assigned to the experimental condition do their work on the modified system. Students assigned to the control condition use the unmodified system, which is what they would normally do (see [[ecological control group]]). [[Post-practice reflection (Katz)]] is an example of this approach. | ||

| + | |||

| + | * '''Activity-based ''in vivo'' experiment without an ecological control:''' For a limited time (e.g., a one-hour classroom period or a two-hour lab period), the control students do one activity and the experimental students do another (which, again, has a single principled difference from the control activity). In the [[Hausmann Study]], for instance, students in both conditions watched videos of problems being solved by their instructor, but in the experimental condition students were [[prompted self-explanation principle|prompted to self-explain]] steps of solutions whereas they were not in the control condition. While this manipulation did ''not'' change the advanced technology regularly used in this course (the Andes Physics tutor), the technology was in use and provided data on student performance. While the control in the [[Hausmann Study]] was much like normal practice in that it was the same content and instructor, it was not a strict [[ecological control group|ecological control]] in that students did not watch instructor videos in normal instruction. | ||

| + | |||

| + | Regardless of the experimental method used in an ''in vivo'' experiment, the educational technologies involved record log data that are used to evaluate the effects of the manipulation. | ||

| + | |||

| + | ''In vivo'' experiments are not new, for instance, Aleven & Koedinger (2002). Going back further, there have been many classroom studies that have had important features of ''in vivo'' experiments. For example, below are summaries from two dissertations that were the basis of the famous Bloom (1984) 2-sigma paper. These dissertations involved 6 experiments. These experiments are borderline ''in vivo'' experiments because mastery and, particularly, tutoring are not "small well-defined instructional variables". Instead, these treatments actually varied a number of different instructional methods at one time. Nevertheless, they illustrate a number of other important features of ''in vivo'' experimentation. | ||

| + | |||

| + | Burke’s (1983) experiments measured immediate learning, far [[transfer]] and [[long-term retention]]. This dissertation involved three classroom experiments comparing conventional instruction, mastery learning and 3-on-1 human tutoring. E1 taught 4th graders probability; E2 taught 5th graders probability; E3 taught fifth graders probability at a different site. Instruction was 3-week module. Tutors were undergrad education students trained for a week to ask good questions and give good feedback (pg. 85). The mastery learning students had to achieve 80% to go on; the tutoring students had to achieve 90%. The conventional instruction students got no feedback from the mastery tests. On immediate post-testing, for lower mental process (like a [[normal post-test]], table 5 pg. 98) tutoring effect sizes averaged 1.66 (with 1.53, 1.34 and 2.11 for E1, E2 and E3, respectively) and mastery learning averaged 0.85 (with .73, .78 and 1.04 for E1, E2 and E3). For higher mental process (like far [[transfer]], Table 6 pg. 104), got for tutoring effect sizes averaged 2.11 (with 1.58, 2.65 and 2.11) and mastery learning averaged 1.19 (with 0.90, 1.47 and 1.21). For [[long-term retention]] 3 weeks later; in E3, the effect size for tutoring was 1.71 for lower mental processes vs. 1.01 for mastery learning. For higher mental processes, effect size was 1.99 for tutoring vs. 1.13 for mastery learning. The researchers also measured time on task (tutoring was higher percentage) and affect rates. | ||

| + | |||

| + | The Anania (1981) thesis involved three classroom experiments, each lasting 3 weeks, comparing conventional teaching, mastery learning and tutoring. The tutors were undergrad education students with no training in tutoring. E1 taught probability to 4th graders; E2 taught probability to 5th graders; E3 taught cartography to 8th graders. E1 and E2 used 3-on-1 tutoring; E3 used 1-on-1 tutoring. Measured immediate learning post-test, time on task, and affect measures. For gains (table 3, pg. 72), effect sizes for tutoring were 1.93 (average of 1.77, 2.06 and 1.95 for E1, E2 and E3) and for mastery learning were 1.00 (avg of 0.61, 1.29 and 1.10). The cutoff score for mastery learning as 80% (pg. 77) vs. 90% for tutoring (pg. 81). | ||

| + | |||

| + | ==== References ==== | ||

| + | |||

| + | * Aleven, V., & Koedinger, K. R. (2002). An effective metacognitive strategy: Learning by doing and explaining with a computer-based Cognitive Tutor. Cognitive Science, 26(2). | ||

| + | * Anania, J. (1981). The Effects of Quality of Instruction on the Cognitive and Affective Learning of Students. Unpublished PhD, University of Chicago, Chicago, IL. | ||

| + | * Bloom, B.S. (1984). The 2-sigma problem: The search for methods of group instruction as effective as one-to-one tutoring. Educational Researcher, 13, 4-16. | ||

| + | * Burke, A. J. (1983). Student's Potential for Learning Contrasted under Tutorial and Group Approaches to Instruction. Unpublished PhD, University of Chicago, Chicago, IL. | ||

| + | * Koedinger, K.R., Aleven, V., Roll, I., & Baker, R. (2009). ''In vivo'' experiments on whether supporting metacognition in intelligent tutoring systems yields robust learning. In D.J. Hacker, J. Dunlosky, & A.C. Graesser (Eds.), Handbook of Metacognition in Education (pp. 897-964). The Educational Psychology Series. New York: Routledge. | ||

| + | * Koedinger, K. R., Corbett, A. C., & Perfetti, C. (2010). The Knowledge-Learning-Instruction (KLI) framework: Toward bridging the science-practice chasm to enhance robust student learning. CMU-HCII Tech Report 10-102. Accessible at [http://reports-archive.adm.cs.cmu.edu/hcii.html]. | ||

| + | * Klahr, D., Perfetti, C. & Koedinger, K. R. (2009). Before Clinical Trials: How theory-guided research can inform educational science. Symposium at the second annual conference of the Society for Research on Educational Effectiveness. See [http://www.sree.org/conferences/2009/pages/program_full.shtml]. | ||

| + | * Salden, R. J. C. M. & Aleven, V. (2009). ''In vivo'' experimentation on self-explanations across domains. Symposium at the Thirteenth Biennial Conference of the European Association for Research on Learning and Instruction. | ||

| + | * Salden, R. J. C. M. & Koedinger, K. R. (2009). ''In vivo'' experimentation on worked examples across domains. Symposium at the Thirteenth Biennial Conference of the European Association for Research on Learning and Instruction. | ||

| + | * VanLehn, K. & Koedinger, K. R. (2007). ''In vivo'' experimentation for understanding robust learning: Pros and cons. Symposium at the annual meeting of the American Educational Research Association. | ||

Latest revision as of 12:43, 8 September 2011

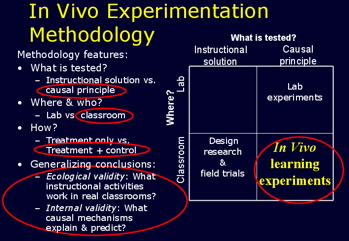

An in vivo experiment is a principle-testing experiment run in the context of an academic course. It is a laboratory-style multi-condition experiment conducted in the natural setting of student course work including the classroom, computer lab, study hall, dorm room, home, etc. The conditions in an in vivo experiment manipulate a small but crucial, well-defined instructional variable, as opposed to a whole curriculum or educational policy. How in vivo experimentation is related to other methodologies in the learning and educational sciences is illustrated in the following figure:

In vivo experimentation is different from other methodologies in the learning and educational sciences including 1) design-based research, which does not have control conditions, 2) randomized field trials, which do not test a principle, but a policy or curriculum, and 3) lab experiments, which are not run in a natural course setting. Of course, all of these methodologies have strengths and should be applied at the right time for the right purpose.

The motivation for the contrast with laboratory experimentation was well articulated by Russ Whitehurst in an APS Observer article (March, 2006): "In contrast to learning in laboratory settings, learning in classrooms typically involves content of greater complexity and scope, delivered and tested over much longer periods of time, with much greater variability in delivery, and with far more distraction and competition for student time and effort. Before principles of learning from cognitive science can be applied to classroom instruction, we need to understand if the principles generalize beyond well controlled laboratory settings to the complex cognitive and social conditions of the classroom."

An in vivo experiment can be implemented either in a within classroom design (i.e. students are randomly assigned to conditions, regardless the class they belong to) or in a between classroom design (i.e. classes are randomly assigned to conditions).

In typical in vivo experiments within PSLC, educational technology (like an intelligent tutoring system or an on-line course) is used not only to provide part of the instruction, but also to monitor students' activities and progress through the year. The conditions in an in vivo experiment may be implemented within an advanced educational technology or outside of it. We strive to have the control condition be exactly what students would do normally in the course. We call this an ecological control group. Sometimes, however, the control activity is somewhat different from normal activity so as to be more like the treatment (i.e., just on change away). Here are a couple examples (to make this easier to follow, assume that there are just two conditions in the experiment, called the experimental and control conditions):

- Technology-based in vivo experiment with an ecological control: Here the educational technology is modified to implement the experimental manipulation (by changing just one aspect of the technology). Students assigned to the experimental condition do their work on the modified system. Students assigned to the control condition use the unmodified system, which is what they would normally do (see ecological control group). Post-practice reflection (Katz) is an example of this approach.

- Activity-based in vivo experiment without an ecological control: For a limited time (e.g., a one-hour classroom period or a two-hour lab period), the control students do one activity and the experimental students do another (which, again, has a single principled difference from the control activity). In the Hausmann Study, for instance, students in both conditions watched videos of problems being solved by their instructor, but in the experimental condition students were prompted to self-explain steps of solutions whereas they were not in the control condition. While this manipulation did not change the advanced technology regularly used in this course (the Andes Physics tutor), the technology was in use and provided data on student performance. While the control in the Hausmann Study was much like normal practice in that it was the same content and instructor, it was not a strict ecological control in that students did not watch instructor videos in normal instruction.

Regardless of the experimental method used in an in vivo experiment, the educational technologies involved record log data that are used to evaluate the effects of the manipulation.

In vivo experiments are not new, for instance, Aleven & Koedinger (2002). Going back further, there have been many classroom studies that have had important features of in vivo experiments. For example, below are summaries from two dissertations that were the basis of the famous Bloom (1984) 2-sigma paper. These dissertations involved 6 experiments. These experiments are borderline in vivo experiments because mastery and, particularly, tutoring are not "small well-defined instructional variables". Instead, these treatments actually varied a number of different instructional methods at one time. Nevertheless, they illustrate a number of other important features of in vivo experimentation.

Burke’s (1983) experiments measured immediate learning, far transfer and long-term retention. This dissertation involved three classroom experiments comparing conventional instruction, mastery learning and 3-on-1 human tutoring. E1 taught 4th graders probability; E2 taught 5th graders probability; E3 taught fifth graders probability at a different site. Instruction was 3-week module. Tutors were undergrad education students trained for a week to ask good questions and give good feedback (pg. 85). The mastery learning students had to achieve 80% to go on; the tutoring students had to achieve 90%. The conventional instruction students got no feedback from the mastery tests. On immediate post-testing, for lower mental process (like a normal post-test, table 5 pg. 98) tutoring effect sizes averaged 1.66 (with 1.53, 1.34 and 2.11 for E1, E2 and E3, respectively) and mastery learning averaged 0.85 (with .73, .78 and 1.04 for E1, E2 and E3). For higher mental process (like far transfer, Table 6 pg. 104), got for tutoring effect sizes averaged 2.11 (with 1.58, 2.65 and 2.11) and mastery learning averaged 1.19 (with 0.90, 1.47 and 1.21). For long-term retention 3 weeks later; in E3, the effect size for tutoring was 1.71 for lower mental processes vs. 1.01 for mastery learning. For higher mental processes, effect size was 1.99 for tutoring vs. 1.13 for mastery learning. The researchers also measured time on task (tutoring was higher percentage) and affect rates.

The Anania (1981) thesis involved three classroom experiments, each lasting 3 weeks, comparing conventional teaching, mastery learning and tutoring. The tutors were undergrad education students with no training in tutoring. E1 taught probability to 4th graders; E2 taught probability to 5th graders; E3 taught cartography to 8th graders. E1 and E2 used 3-on-1 tutoring; E3 used 1-on-1 tutoring. Measured immediate learning post-test, time on task, and affect measures. For gains (table 3, pg. 72), effect sizes for tutoring were 1.93 (average of 1.77, 2.06 and 1.95 for E1, E2 and E3) and for mastery learning were 1.00 (avg of 0.61, 1.29 and 1.10). The cutoff score for mastery learning as 80% (pg. 77) vs. 90% for tutoring (pg. 81).

References

- Aleven, V., & Koedinger, K. R. (2002). An effective metacognitive strategy: Learning by doing and explaining with a computer-based Cognitive Tutor. Cognitive Science, 26(2).

- Anania, J. (1981). The Effects of Quality of Instruction on the Cognitive and Affective Learning of Students. Unpublished PhD, University of Chicago, Chicago, IL.

- Bloom, B.S. (1984). The 2-sigma problem: The search for methods of group instruction as effective as one-to-one tutoring. Educational Researcher, 13, 4-16.

- Burke, A. J. (1983). Student's Potential for Learning Contrasted under Tutorial and Group Approaches to Instruction. Unpublished PhD, University of Chicago, Chicago, IL.

- Koedinger, K.R., Aleven, V., Roll, I., & Baker, R. (2009). In vivo experiments on whether supporting metacognition in intelligent tutoring systems yields robust learning. In D.J. Hacker, J. Dunlosky, & A.C. Graesser (Eds.), Handbook of Metacognition in Education (pp. 897-964). The Educational Psychology Series. New York: Routledge.

- Koedinger, K. R., Corbett, A. C., & Perfetti, C. (2010). The Knowledge-Learning-Instruction (KLI) framework: Toward bridging the science-practice chasm to enhance robust student learning. CMU-HCII Tech Report 10-102. Accessible at [1].

- Klahr, D., Perfetti, C. & Koedinger, K. R. (2009). Before Clinical Trials: How theory-guided research can inform educational science. Symposium at the second annual conference of the Society for Research on Educational Effectiveness. See [2].

- Salden, R. J. C. M. & Aleven, V. (2009). In vivo experimentation on self-explanations across domains. Symposium at the Thirteenth Biennial Conference of the European Association for Research on Learning and Instruction.

- Salden, R. J. C. M. & Koedinger, K. R. (2009). In vivo experimentation on worked examples across domains. Symposium at the Thirteenth Biennial Conference of the European Association for Research on Learning and Instruction.

- VanLehn, K. & Koedinger, K. R. (2007). In vivo experimentation for understanding robust learning: Pros and cons. Symposium at the annual meeting of the American Educational Research Association.