Difference between revisions of "Pavlik - Difficulty and Strategy"

(→Dependent Variables) |

(→Study Four) |

||

| (15 intermediate revisions by the same user not shown) | |||

| Line 2: | Line 2: | ||

==Abstract== | ==Abstract== | ||

| + | The literature on metacognition generally proposes that metacognition is a good thing, but it seems plausible that at some difficulty levels, metacognition breaks down and learning that does occur may be based on shallow procedures that become disconnected to a general understanding. This problem may help explain why students who fall behind fail to catch up, since after they fall behind they may suffer not only because of missing prerequisites, but also because the increased difficulty prevents them from apply the strategies they need to apply to catch up. | ||

==Background & Significance== | ==Background & Significance== | ||

| Line 12: | Line 13: | ||

A previous pilot study (with about 80 students participating) looked at the relationship between various aspects of performance and aspects of self-regulation, strategy use, and motivation, as measured by Pintrich’s Motivated Strategies for Learning Questionnaire (MSLQ; (Pintrich & de Groot, 1990). Two months after taking the MSLQ students were assigned the FaCT system vocabulary practice for Lesson 17 of their curriculum for 30 minutes. Two weeks following this practice there was a post practice survey that asked questions about perceived difficulty, self-regulation and strategies, and perceived value. While the results have not been fully analyzed, in Experiment 1 we found some suggestive relationships between the measures we observed. Specifically, the MSLQ anxiety factor predicted higher reported difficulty (r=.42) on the post practice questionnaire (difficulty reports also correlated negatively (-.4) with performance during practice). Also, some individual post practice difficulty items marginally correlated negatively with amount of time spend practicing. Another suggestive result was that MSLQ anxiety was correlated (.31) with rote type strategic behavior questions on the MSLQ while rote strategic behaviors were also correlated with post practice difficulty (.29). Further analysis will focus on how specific student behaviors (e.g. the amount of practice trials that end with timeout as compared to a guess) is associated with MSLQ responses, post survey responses, or learning and performance measures. | A previous pilot study (with about 80 students participating) looked at the relationship between various aspects of performance and aspects of self-regulation, strategy use, and motivation, as measured by Pintrich’s Motivated Strategies for Learning Questionnaire (MSLQ; (Pintrich & de Groot, 1990). Two months after taking the MSLQ students were assigned the FaCT system vocabulary practice for Lesson 17 of their curriculum for 30 minutes. Two weeks following this practice there was a post practice survey that asked questions about perceived difficulty, self-regulation and strategies, and perceived value. While the results have not been fully analyzed, in Experiment 1 we found some suggestive relationships between the measures we observed. Specifically, the MSLQ anxiety factor predicted higher reported difficulty (r=.42) on the post practice questionnaire (difficulty reports also correlated negatively (-.4) with performance during practice). Also, some individual post practice difficulty items marginally correlated negatively with amount of time spend practicing. Another suggestive result was that MSLQ anxiety was correlated (.31) with rote type strategic behavior questions on the MSLQ while rote strategic behaviors were also correlated with post practice difficulty (.29). Further analysis will focus on how specific student behaviors (e.g. the amount of practice trials that end with timeout as compared to a guess) is associated with MSLQ responses, post survey responses, or learning and performance measures. | ||

| + | |||

| + | This is significant both for the practical implications for intervention design, such as helping us to better develop a design theory of how instructional factors may control strategic choices by students. It also addresses the PSLC thrust goal to "Evaluate interventions aimed at supporting different metacognitive abilities”. For this project, the difference in difficulty between conditions is the intervention, and the metacognitive ability we are looking at is strategy use applied to vocabulary learning. In the case of this domain, strategies include various mnemonic devices intended to make the formation of associations easier. | ||

More background and publications (on people page) at [http://www.optimallearning.org/home/index.html Optimal Learning website] | More background and publications (on people page) at [http://www.optimallearning.org/home/index.html Optimal Learning website] | ||

==Glossary== | ==Glossary== | ||

| + | *Strategy -- Any behaviors that a student uses to solve a problem that are not automatic processes. I.e. for the purposes of this project, a strategy is any '''controlled procedure''' that a student uses in learning. It is assumed that controlled procedures use prior conceptual/declarative knowledge, whereas rote nonstrategic procedures (e.g. repetition) make much less use of this prior knowledge. | ||

| + | |||

==Research questions== | ==Research questions== | ||

===Study One=== | ===Study One=== | ||

| Line 68: | Line 73: | ||

====Explanation==== | ====Explanation==== | ||

| − | Issues preventing Study 1 from producing better results included the lack of post-testing due to problems with institutional support for post-testing in the learnlab home department. | + | Issues preventing Study 1 from producing better results included the lack of post-testing due to problems with institutional support for post-testing in the learnlab home department. Our model from Experiment 1 (see Pavlik, 2010) suggests that the student’s metacognitive assessment of the usefulness of the exercise may motivate them to engage strategies. This highlights the importance of providing instruction that meets certain implicit standards that students have for what works. If these standards are met, it seems that strategic engagement is enhanced. |

===Study Two=== | ===Study Two=== | ||

| Line 105: | Line 110: | ||

====Dependent Variables==== | ====Dependent Variables==== | ||

| − | Before practice sessions on Lesson 15,1,6,17 and 18, an abbreviated version Motivated Strategies and Learning Questionnaire was administered from SurveyMonkey.com. Also included were 6 questions on metacognitive strategies for learning vocabulary. Finally there was a pre-quiz with 16 items from lessons 15-16. | + | Before practice sessions on Lesson 15,1,6,17 and 18, an abbreviated version Motivated Strategies and Learning Questionnaire was administered from SurveyMonkey.com. Also included were 6 questions on metacognitive strategies for learning vocabulary. Finally there was a pre-quiz with 16 items from lessons 15-16. Following practice lessons there was a detailed survey of user attitudes. Finally there was a post-quiz with 16 items from lessons 15-16. During practice, every two minutes the students were cycled through the following questions: |

| − | |||

| − | |||

| − | Following practice lessons there was a detailed survey of user attitudes. Finally there was a post-quiz with 16 items from lessons 15-16. | ||

| − | |||

| − | |||

| − | During practice, every two minutes the students were cycled through the following questions: | ||

*How easy was the recent practice? | *How easy was the recent practice? | ||

| Line 127: | Line 126: | ||

====Results==== | ====Results==== | ||

| + | [[Media:MM_Slides_Pavlik_11-12-10_Thrust_Meeting.pptx|Study 1 and 2 Talk]] | ||

| + | |||

====Explanation==== | ====Explanation==== | ||

| − | |||

| − | |||

| − | + | Preliminary modeling of our data on these questions suggests that difficulty may not be causing directly causing breakdown in learning (such as might be predicted by a cognitive load theory), but rather that difficulty’s effect on strategies may be mediated by student assessments of the usefulness of the procedure being asked of them. In other words, difficulty may be acceptable for students if students judge that the difficulty is useful to them. | |

| + | |||

| + | |||

===Study Three=== | ===Study Three=== | ||

| Line 159: | Line 160: | ||

====Hypothesis==== | ====Hypothesis==== | ||

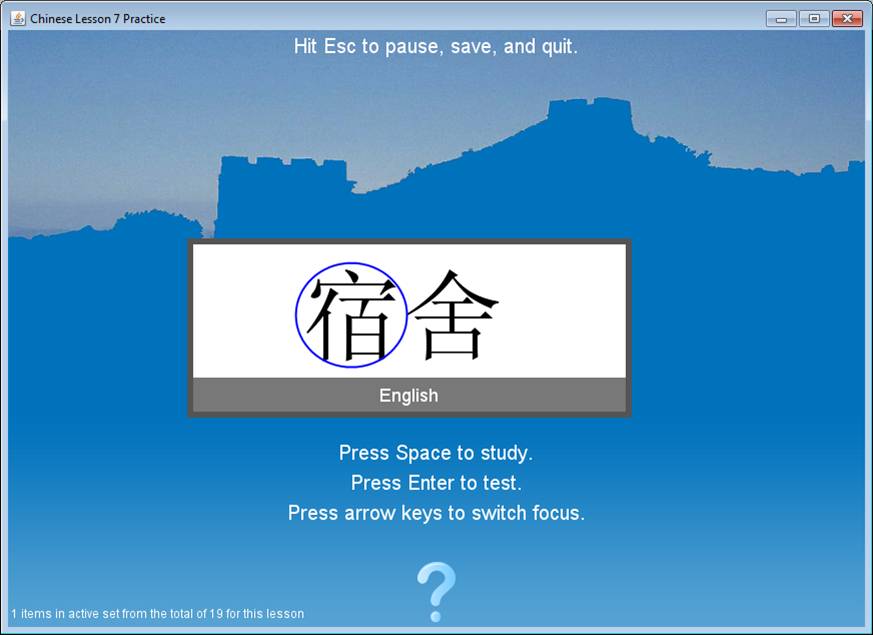

| + | Providing more explicit affordances for strategy use should allow students to use more strategies. Because we will combine a new strategy friendly interface with 3 levels of difficulty, we will be able to measure the precise effect of the difficulty on the student strategy behaviors. More specifically, students can choose to learn new information about each character with each repetition (which we expect students will do less frequently as difficulty increases) or they can choose to review old information (which we expect students will do more frequently as difficulty increases). | ||

| + | |||

| + | [[Image:New Interface.jpg]] | ||

| + | |||

====Independent Variables==== | ====Independent Variables==== | ||

====Dependent Variables==== | ====Dependent Variables==== | ||

====Results==== | ====Results==== | ||

| + | Low participation since it was not included on the syllabus due to an oversight. | ||

| + | High attrition > 50%. | ||

| + | Programmer got everything else right, but missed recording the choice of student practice. | ||

| + | Data may still show differences ins latency as a result of strategy choices. | ||

| + | |||

====Explanation==== | ====Explanation==== | ||

===Study Four=== | ===Study Four=== | ||

| Line 181: | Line 191: | ||

| '''Data available in DataShop''' || TBD<br> | | '''Data available in DataShop''' || TBD<br> | ||

* '''Pre/Post Test Score Data:''' TBD | * '''Pre/Post Test Score Data:''' TBD | ||

| − | * '''Paper or Online Tests:''' | + | * '''Paper or Online Tests:''' TBD |

* '''Scanned Paper Tests:''' TBD | * '''Scanned Paper Tests:''' TBD | ||

* '''Blank Tests:''' TBD | * '''Blank Tests:''' TBD | ||

| Line 192: | Line 202: | ||

====Results==== | ====Results==== | ||

====Explanation==== | ====Explanation==== | ||

| + | |||

==Further Information== | ==Further Information== | ||

===Connections to Other Studies=== | ===Connections to Other Studies=== | ||

Latest revision as of 20:35, 3 January 2011

Difficulty and Strategy

Contents

Abstract

The literature on metacognition generally proposes that metacognition is a good thing, but it seems plausible that at some difficulty levels, metacognition breaks down and learning that does occur may be based on shallow procedures that become disconnected to a general understanding. This problem may help explain why students who fall behind fail to catch up, since after they fall behind they may suffer not only because of missing prerequisites, but also because the increased difficulty prevents them from apply the strategies they need to apply to catch up.

Background & Significance

Currently the FaCT (Fact and Concept Training System) is deployed in the Chinese Learnlab and has been used in French and ESL Learnlabs for prior work. The FaCT system allows both randomized and adaptive model based scheduling of practice items either in the lab or integrated into a class. In past in vivo studies, some students do not seem to be motivated to complete more than the minimum work with the adaptive system to learn vocabulary. There are several motivational and metacognitive factors that could be responsible for the relatively low usage and/or poor performance of some students.

- Students find the tutor to be too difficult or too easy

- Students do not perceive a value to the practice

- Students do not expect to do well

- Students do not use appropriate strategies in the tutor

We plan to conduct in-vivo experiments to determine how interventions to modify these factors may result in improvements in both immediate learning, acceleration of future learning and longer-term transfer performance to in class measures.

A previous pilot study (with about 80 students participating) looked at the relationship between various aspects of performance and aspects of self-regulation, strategy use, and motivation, as measured by Pintrich’s Motivated Strategies for Learning Questionnaire (MSLQ; (Pintrich & de Groot, 1990). Two months after taking the MSLQ students were assigned the FaCT system vocabulary practice for Lesson 17 of their curriculum for 30 minutes. Two weeks following this practice there was a post practice survey that asked questions about perceived difficulty, self-regulation and strategies, and perceived value. While the results have not been fully analyzed, in Experiment 1 we found some suggestive relationships between the measures we observed. Specifically, the MSLQ anxiety factor predicted higher reported difficulty (r=.42) on the post practice questionnaire (difficulty reports also correlated negatively (-.4) with performance during practice). Also, some individual post practice difficulty items marginally correlated negatively with amount of time spend practicing. Another suggestive result was that MSLQ anxiety was correlated (.31) with rote type strategic behavior questions on the MSLQ while rote strategic behaviors were also correlated with post practice difficulty (.29). Further analysis will focus on how specific student behaviors (e.g. the amount of practice trials that end with timeout as compared to a guess) is associated with MSLQ responses, post survey responses, or learning and performance measures.

This is significant both for the practical implications for intervention design, such as helping us to better develop a design theory of how instructional factors may control strategic choices by students. It also addresses the PSLC thrust goal to "Evaluate interventions aimed at supporting different metacognitive abilities”. For this project, the difference in difficulty between conditions is the intervention, and the metacognitive ability we are looking at is strategy use applied to vocabulary learning. In the case of this domain, strategies include various mnemonic devices intended to make the formation of associations easier.

More background and publications (on people page) at Optimal Learning website

Glossary

- Strategy -- Any behaviors that a student uses to solve a problem that are not automatic processes. I.e. for the purposes of this project, a strategy is any controlled procedure that a student uses in learning. It is assumed that controlled procedures use prior conceptual/declarative knowledge, whereas rote nonstrategic procedures (e.g. repetition) make much less use of this prior knowledge.

Research questions

Study One

| Study Name/Number | Study 1 |

| Study Start Date | Oct 2009 |

| Study End Date | Nov 2010 |

| LearnLab Site | Carnegie Mellon Univ., Chinese I Course |

| LearnLab Course | Chinese |

| Number of Students | 80 |

| Total Participant Hours | 160 |

| Data available in DataShop | Yes |

Hypothesis

- Experiment 1: Effect of Difficulty on Strategy Reporting

- Date: November 2009

- Students: 80

- Participant hours: 160 total

- Datashop: Yes

Experiment 1 examined l how difficulty may account for lack of persistence and reduced performance. Specifically, my working model of motivation (based on Zimmerman’s self-regulation theory (2008) and Bandura’s Triadic theory (Zimmerman, 1989)) highlighted the importance of perceived task difficulty in determining efficacy and value, which in turn determine planning, intention and action (which produces a cycle as strategy is applied to the task and difficulty is again evaluated with each action cycle). Given this model, the difficulty factor is notable since it should feed back to influence value (in the case difficulty is too easy) or efficacy (in the case of difficulty being too hard) and this has the potential to collapse the whole process (resulting in a student who quits or persists without engagement) if difficulty is too high or too low. Using all 7-8 sections of Chinese I and II (~80-100 students) over 2 lessons, this study (beginning Fall semester) will used 2 levels of initial difficulty set by fixing the initial learning value in the adaptive student model (setting initial learning high creates a high difficulty condition, etc.). Students were given a range of persistence as acceptable for completion of the in class assignment (from 20 to 40 minutes will be counted for course credit for each lesson). In addition to pre and post motivational surveys, we will also include self-evaluative assessments that periodically (every 1 minute) ask the student’s opinion about their experience. In this recording the goal is to show that self reports of difficulty, efficacy, value, and strategy use will predict persistence and performance during the practice.

Independent Variables

Initial difficulty high or low.

Dependent Variables

Before practice sessions on Lesson 7 and 8, the full version Motivated Strategies and Learning Questionnaire was administered from SurveyMonkey.com. Following practice lessons there was a detailed survey of user attitudes. During practice, every one minute the students were cycled through the following questions:

- How easy was the recent practice?

- too hard

- just right

- too easy

- How useful for learning was the recent practice?

- not useful

- useful

- very useful

- Were you able to use any learning strategies during the recent practice?

- mostly used repetition

- mix of strategy and repetition

- mostly used strategies

Results

Weak results may have been due to having too little variability between difficulty conditions. Despite this, an inverted u-shaped quadratic relationship could be found where median values for easiness ratings during practice predicted higher values for usefulness during practice.

Explanation

Issues preventing Study 1 from producing better results included the lack of post-testing due to problems with institutional support for post-testing in the learnlab home department. Our model from Experiment 1 (see Pavlik, 2010) suggests that the student’s metacognitive assessment of the usefulness of the exercise may motivate them to engage strategies. This highlights the importance of providing instruction that meets certain implicit standards that students have for what works. If these standards are met, it seems that strategic engagement is enhanced.

Study Two

| Study Name/Number | Study 2 |

| Study Start Date | March 2010 |

| Study End Date | April 2010 |

| LearnLab Site | Carnegie Mellon Univ., Chinese II Course |

| LearnLab Course | Chinese |

| Number of Students | 80 |

| Total Participant Hours | 320 |

| Data available in DataShop | Yes

|

Hypothesis

- Experiment 2: Effect of Difficulty and Choice of Difficulty on Student Strategies, Performance, and Persistence

- Date: March and April 2010

- Students: 80

- Participant hours: 320 total

- Datashop: Yes

This experiment was an expanded version of Experiment 1 that included a pre and post test, and manipulated initial difficult of the practice. In addition, we also piloted a strategy questionnaire specific for the Chinese task that provided information on strategies and asked students to rate how often they used these strategies. This experiment will help determine if there are gains to persistence or performance measures from different initial difficulty, and also allow us to understand what the effect of student control of difficulty is. Provided the students use the difficulty slider to better calibrate their motivationally optimal difficulty, and should therefore enhance the use of strategies that relates to the slider. Data is still being analyzed.

Independent Variables

Each student's initial difficulty was randomly assigned. Pre-testing and pre-survey were independent of treatment.

Dependent Variables

Before practice sessions on Lesson 15,1,6,17 and 18, an abbreviated version Motivated Strategies and Learning Questionnaire was administered from SurveyMonkey.com. Also included were 6 questions on metacognitive strategies for learning vocabulary. Finally there was a pre-quiz with 16 items from lessons 15-16. Following practice lessons there was a detailed survey of user attitudes. Finally there was a post-quiz with 16 items from lessons 15-16. During practice, every two minutes the students were cycled through the following questions:

- How easy was the recent practice?

- too hard

- just right

- too easy

- How useful for learning was the recent practice?

- not useful

- useful

- very useful

- Were you able to use any learning strategies during the recent practice?

- mostly used repetition

- mix of strategy and repetition

- mostly used strategies

Results

Explanation

Preliminary modeling of our data on these questions suggests that difficulty may not be causing directly causing breakdown in learning (such as might be predicted by a cognitive load theory), but rather that difficulty’s effect on strategies may be mediated by student assessments of the usefulness of the procedure being asked of them. In other words, difficulty may be acceptable for students if students judge that the difficulty is useful to them.

Study Three

| Study Name/Number | Study 3 |

| Study Start Date | Oct 2010 |

| Study End Date | Nov 2011 |

| LearnLab Site | Carnegie Mellon Univ., Chinese I Course |

| LearnLab Course | Chinese |

| Number of Students | TBD |

| Total Participant Hours | TBD |

| Data available in DataShop | No, errors in data

|

Hypothesis

Providing more explicit affordances for strategy use should allow students to use more strategies. Because we will combine a new strategy friendly interface with 3 levels of difficulty, we will be able to measure the precise effect of the difficulty on the student strategy behaviors. More specifically, students can choose to learn new information about each character with each repetition (which we expect students will do less frequently as difficulty increases) or they can choose to review old information (which we expect students will do more frequently as difficulty increases).

Independent Variables

Dependent Variables

Results

Low participation since it was not included on the syllabus due to an oversight. High attrition > 50%. Programmer got everything else right, but missed recording the choice of student practice. Data may still show differences ins latency as a result of strategy choices.

Explanation

Study Four

| Study Name/Number | Study 4 |

| Study Start Date | TBD |

| Study End Date | TBD |

| LearnLab Site | Carnegie Mellon Univ., Chinese II Course |

| LearnLab Course | Chinese |

| Number of Students | TBD |

| Total Participant Hours | TBD |

| Data available in DataShop | TBD

|

Hypothesis

Independent Variables

Dependent Variables

Results

Explanation

Further Information

Connections to Other Studies

Annotated Bibliography

- Pavlik Jr., P. I. (2006). Transfer effects in Chinese vocabulary learning. In R. Sun (Ed.), Proceedings of the Twenty-Eighth Annual Conference of the Cognitive Science Society (pp. 2579). Mahwah, NJ: Lawrence Erlbaum.

- Pavlik Jr., P. I. (2008). Classroom Testing of a Discrete Trial Practice System. Poster presented at the 34th Annual Meeting of the Association for Behavior Analysis, Chicago, IL.

- Pavlik Jr., P. I. (2010). Data Reduction Methods Applied to Understanding Complex Learning Hypotheses. Poster presented at the Proceedings of the The 3rd International Conference on Educational Data Mining (pp. 311-312), Pittsburgh.

- Pavlik Jr., P. I., Bolster, T., Wu, S., Koedinger, K. R., & MacWhinney, B. (2008). Using Optimally Selected Drill Practice to Train Basic Facts. In B. Woolf, E. Aimer & R. Nkambou (Eds.), Proceedings of the 9th International Conference on Intelligent Tutoring Systems. Montreal, Canada.

- Pavlik Jr., P. I., Presson, N., Dozzi, G., Wu, S.-m., MacWhinney, B., & Koedinger, K. R. (2007). The FaCT (Fact and Concept Training) System: A new tool linking cognitive science with educators. In D. McNamara & G. Trafton (Eds.), Proceedings of the Twenty-Ninth Annual Conference of the Cognitive Science Society (pp. 397-402). Mahwah, NJ: Lawrence Erlbaum.

- Pavlik Jr., P. I., Presson, N., & Hora, D. (2008). Using the FaCT System (Fact and Concept Training System) for Classroom and Laboratory Experiments, Inter-Science Of Learning Center Conference. Pittsburgh, PA.

- Pavlik Jr., P. I., Presson, N., & Koedinger, K. R. (2007). Optimizing knowledge component learning using a dynamic structural model of practice. In R. Lewis & T. Polk (Eds.), Proceedings of the Eighth International Conference of Cognitive Modeling (pp. 37-42). Ann Arbor: University of Michigan.

References

- Atkinson, R. C. (1975). Mnemotechnics in second-language learning. American Psychologist, 30, 821-828.

- Pavlik Jr., P. I. (2005, dissertation). The microeconomics of learning: Optimizing paired-associate memory. Dissertation Abstracts International: Section B: The Sciences and Engineering, 66, 5704.

- Pavlik Jr., P. I. (2007a). Timing is an order: Modeling order effects in the learning of information. In F. E., Ritter, J. Nerb, E. Lehtinen & T. O'Shea (Eds.), In order to learn: How order effects in machine learning illuminate human learning (pp. 137-150). New York: Oxford University Press.

- Pavlik Jr., P. I. (2007b). Understanding and applying the dynamics of test practice and study practice. Instructional Science, 35, 407-441.

- Pavlik Jr., P. I., & Toth, J. (2010). How to Build Bridges between Intelligent Tutoring System Subfields of Research. In J. Kay, V. Aleven & J. Mostow (Eds.), Proceedings of the 10th International Conference on Intelligent Tutoring Systems, Part II (pp. 103-112). Pittsburgh, PA: Springer, Heidelberg.

- Pintrich, P. R., & de Groot, E. V. (1990). Motivational and self-regulated learning components of classroom academic performance. Journal of Educational Psychology, 82, 33-40.

Future Plans

See Study 4.