Difference between revisions of "Self-explanation: Meta-cognitive vs. justification prompts"

(→Background and Significance) |

(→Independent variables) |

||

| (16 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | == | + | == Self-explanation: Meta-cognitive vs. justification prompts == |

''Robert G.M. Hausmann, Brett van de Sande, Sophia Gershman, & Kurt VanLehn'' | ''Robert G.M. Hausmann, Brett van de Sande, Sophia Gershman, & Kurt VanLehn'' | ||

=== Summary Table === | === Summary Table === | ||

{| border="1" cellspacing="0" cellpadding="5" style="text-align: left;" | {| border="1" cellspacing="0" cellpadding="5" style="text-align: left;" | ||

| − | | '''PIs''' || Robert G.M. Hausmann (Pitt), Brett van de Sande (Pitt), Sophia Gershman (WHRHS), & Kurt VanLehn (Pitt) | + | | '''PIs''' || Robert G.M. Hausmann (Pitt), Brett van de Sande (Pitt), <Br> Sophia Gershman (WHRHS), & Kurt VanLehn (Pitt) |

|- | |- | ||

| '''Other Contributers''' || Tim Nokes (Pitt) | | '''Other Contributers''' || Tim Nokes (Pitt) | ||

| Line 20: | Line 20: | ||

| '''Total Participant Hours''' || 150 hrs. | | '''Total Participant Hours''' || 150 hrs. | ||

|- | |- | ||

| − | | '''DataShop''' || | + | | '''DataShop''' || Anticipated: June 1, 2008 |

|} | |} | ||

<br> | <br> | ||

| Line 28: | Line 28: | ||

=== Background and Significance === | === Background and Significance === | ||

| − | The self-explanation effect has been empirically demonstrated to be an effective learning strategy, both in the laboratory and in the classroom. The effect sizes range from ''d'' = .74 – 1.12 in the lab for difficult problems (Chi, DeLeeuw, Chiu, & LaVancher, 1994; McNamara, 2004) to ''d'' = .44 – .92 in the classroom (Hausmann & VanLehn, 2007). However, both the amount and quality of spontaneously produced self-explanations is highly variable (Renkl, 1997). | + | The self-explanation effect has been empirically demonstrated to be an effective learning strategy, both in the laboratory and in the classroom. The effect sizes range from ''d'' = .74 – 1.12 in the lab for difficult problems (Chi, DeLeeuw, Chiu, & LaVancher, 1994; McNamara, 2004) to ''d'' = .44 – .92 in the classroom (Hausmann & VanLehn, 2007). However, both the amount and quality of spontaneously produced self-explanations is highly variable (Renkl, 1997). To increase both the likelihood and quality, different prompting procedures have been designed to solicit student-generated explanations. However, an open question is how to structure the learning environment to maximally support learning from self-explanation. One method to support robust learning is to design instructional prompts that increase the probability that students will frequently generate high-quality self-explanations. What counts as a “high-quality self-explanation?” |

| − | |||

| − | To increase both the likelihood and quality, different prompting procedures have been designed to solicit student-generated explanations. However, an open question is how to structure the learning environment to maximally support learning from self-explanation. One method to support robust learning is to design instructional prompts that increase the probability that students will frequently generate high-quality self-explanations. What counts as a “high-quality self-explanation?” | ||

The answer to that question may depend on the type of knowledge to be learned. Knowledge can be categorized into two types, either procedural or declarative knowledge. In physics, students often learn the procedural skill of solving problems by studying examples. An example is a solution to a problem, which is derived in a series of steps. An example step contains either an application of a physics principle or mathematical operator. The transition from one step to the next can be justified by a reason consisting of the applicable principle or operator. Therefore, an effective prompt for procedural learning asks the student to justify each step of an example with a domain principle or operator. For the purposes of this proposal, we shall call this type of prompt “justification prompts.” | The answer to that question may depend on the type of knowledge to be learned. Knowledge can be categorized into two types, either procedural or declarative knowledge. In physics, students often learn the procedural skill of solving problems by studying examples. An example is a solution to a problem, which is derived in a series of steps. An example step contains either an application of a physics principle or mathematical operator. The transition from one step to the next can be justified by a reason consisting of the applicable principle or operator. Therefore, an effective prompt for procedural learning asks the student to justify each step of an example with a domain principle or operator. For the purposes of this proposal, we shall call this type of prompt “justification prompts.” | ||

| − | Contrast a high-quality self-explanation from problem solving in physics with an explanation from a declarative domain (i.e., the human circulatory system). In this domain, the student’s task is to develop a robust mental model of a physical system. Instead of a solution example broken down by steps, the information is presented as a text, with each sentence presented separately. When each sentence is read, the student’s goal is to revise or augment his or her initial mental model (Chi, 2000). A high-quality explanation in this domain requires the student to consider the relationship between the structure, behavior, and function of the various anatomical features of the circulatory system. When a student begins reading about the heart, she rarely (if ever) comes to the task as a blank slate. More likely, the student has an initial mental model that is flawed in some way. Therefore, the student must revise her initial mental model to align itself with the content of the text. This is generally not an easy task because the reader is required to use her prior knowledge to comprehend the text, while simultaneously revise that same knowledge. In this case, a high-quality self-explanation may consist of reflecting on one’s own understanding, comparing it to the target material, explaining the discrepancies between the two, and revising the mental model. Therefore, we shall refer to prompts that encourage this type | + | Contrast a high-quality self-explanation from problem solving in physics with an explanation from a declarative domain (i.e., the human circulatory system). In this domain, the student’s task is to develop a robust mental model of a physical system. Instead of a solution example broken down by steps, the information is presented as a text, with each sentence presented separately. When each sentence is read, the student’s goal is to revise or augment his or her initial mental model (Chi, 2000). A high-quality explanation in this domain requires the student to consider the relationship between the structure, behavior, and function of the various anatomical features of the circulatory system. When a student begins reading about the heart, she rarely (if ever) comes to the task as a blank slate. More likely, the student has an initial mental model that is flawed in some way. Therefore, the student must revise her initial mental model to align itself with the content of the text. This is generally not an easy task because the reader is required to use her prior knowledge to comprehend the text, while simultaneously revise that same knowledge. In this case, a high-quality self-explanation may consist of reflecting on one’s own understanding, comparing it to the target material, explaining the discrepancies between the two, and revising the mental model. Therefore, we shall refer to prompts that encourage this type of behavior as “meta-cognitive prompts.” |

Thus, different types of self-explanation prompts may lead to different learning outcomes. Justification-based prompts may inspire more gap-filling activities, while meta-cognitive prompts may evoke more mental model repair. While both types of prompting techniques have been used in prior research on self-explaining, what remains to be explored, however, is a systematic exploration of the differential impact prompting for justifications or meta-cognitive activities on robust learning. | Thus, different types of self-explanation prompts may lead to different learning outcomes. Justification-based prompts may inspire more gap-filling activities, while meta-cognitive prompts may evoke more mental model repair. While both types of prompting techniques have been used in prior research on self-explaining, what remains to be explored, however, is a systematic exploration of the differential impact prompting for justifications or meta-cognitive activities on robust learning. | ||

| Line 45: | Line 43: | ||

=== Research question === | === Research question === | ||

| − | How is [[robust learning]] affected by | + | How is [[robust learning]] affected by justification-based vs. meta-cognitive prompts for self-explanation? |

=== Independent variables === | === Independent variables === | ||

| Line 52: | Line 50: | ||

[[Prompting]] for an explanation was intended to increase the probability that the individual or dyad will traverse a useful learning-event path. Meta-cognitive prompts were designed to increase the students' awareness of their developing knowledge. The justification-based prompts were designed to motivate students to explicitly articulate the principle needed to solve a particular problem. Finally, the attention-focusing prompts were designed as a set of control prompts. The purpose was to steer their attention to the examples that they are studying, without motivating any particular type of active cognitive processing. | [[Prompting]] for an explanation was intended to increase the probability that the individual or dyad will traverse a useful learning-event path. Meta-cognitive prompts were designed to increase the students' awareness of their developing knowledge. The justification-based prompts were designed to motivate students to explicitly articulate the principle needed to solve a particular problem. Finally, the attention-focusing prompts were designed as a set of control prompts. The purpose was to steer their attention to the examples that they are studying, without motivating any particular type of active cognitive processing. | ||

| + | |||

| + | <b>Figure 1</b>. An example from the Justification-based condition<Br><Br> | ||

| + | [[Image:JUST.JPG]]<Br> | ||

| + | |||

| + | <b>Figure 2</b>. An example from the Meta-cognitive condition<Br><Br> | ||

| + | [[Image:META.JPG]]<Br> | ||

| + | |||

| + | <b>Figure 3</b>. An example from the Attention-focusing condition<Br><Br> | ||

| + | [[Image:ATTN.JPG]]<Br> | ||

=== Hypothesis === | === Hypothesis === | ||

| − | + | This experiment implements the following instructional principle: [[Prompted Self-explanation]] | |

=== Dependent variables === | === Dependent variables === | ||

| Line 71: | Line 78: | ||

All of the participants were enrolled in a year-long, high-school physics course. The task domain, electrodynamics, was taught at the beginning of the Spring semester. Therefore, all of the students were familiar with the Andes physics tutor. They did not need any training in the interface. Unlike our previous [[Hausmann_Study2 | lab experiment]], they did not solve a warm-up problem. Instead, they started the experiment with a fairly complex problem. | All of the participants were enrolled in a year-long, high-school physics course. The task domain, electrodynamics, was taught at the beginning of the Spring semester. Therefore, all of the students were familiar with the Andes physics tutor. They did not need any training in the interface. Unlike our previous [[Hausmann_Study2 | lab experiment]], they did not solve a warm-up problem. Instead, they started the experiment with a fairly complex problem. | ||

| − | Once they finished, participants then watched a video solving an ''isomorphic problem''. Note that this procedure is slightly different from previous research, which used examples presented before solving problems (e.g., Sweller & Cooper, 1985; Exper. 2). The videos decomposed into steps, and students were prompted to explain each step. The cycle of explaining examples and solving problems repeated until either | + | Once they finished, participants then watched a video solving an ''isomorphic problem''. Note that this procedure is slightly different from previous research, which used examples presented before solving problems (e.g., Sweller & Cooper, 1985; Exper. 2). The videos decomposed into steps, and students were prompted to explain each step. The cycle of explaining examples and solving problems repeated until either 3 problems were solved or the class period was over. The problems were designed to become progressively more complex. |

| + | |||

| + | The results reported below are from a median split conducted on the assistance score on the first problem. The students who were below the median are included in the analyses because the strong prior-knowledge students were unaffected by the different types of prompts. | ||

| + | |||

| + | * [[Normal post-test]] | ||

| + | ** ''Near [[transfer]], immediate'': The prompting for justifications demonstrating lower normalized assistance scores for later problems than the other two conditions. <center>[[Image:assist_results.JPG]]</center> | ||

| + | ** In addition, the justification-based prompting condition demonstrated faster solution times than the other two conditions for the last problem. <center>[[Image:time_results.JPG]]</center> | ||

| + | |||

| + | * [[Robust learning]] | ||

| + | ** ''Near transfer, retention'': to be collected and analyzed. | ||

| + | ** ''Near and far transfer'': to be collected and analyzed. | ||

| + | ** ''[[Accelerated future learning]]'': to be collected and analyzed. | ||

=== Explanation === | === Explanation === | ||

| + | Data analyses are preliminary and ongoing. Thus, the results reported above are speculative at this point. However, the trends suggest that justification prompting for physics may provide a learning advantage for later, more complex and difficult problems. One reason for the superiority of justification-based prompting is related to the match between the instructional materials and the cognitive task demands. That is, there are two sequential learning events that occur during the experiment. The first is learning from problem solving. In this case, the student must search the problem space in order to arrive at a fully specified solution. In so doing, he or she will most likely access and apply principles from physics. There are multiple competing features in the environment that cue the retrieval of these principles. The valid features that signal the correct application of a principle are called the "applicability conditions." During example studying, the student is released from the task of accessing the principles and is instead asked to generation the conditions of applicability. Generating the justification for a problem-solving action is commensurate with this task. | ||

| + | |||

| + | Prompting for meta-cognitive reflection and evaluation, however, may not fit the task as well. Instead, students who do not have strong prior knowledge may find the lack of structure unhelpful. Weaker students may need a more structured learning environment. | ||

| + | The attention prompts are ambiguous because 2 of them may inspire the student to self-explain. A close analysis of the audio portions of the data that were collected during the example-studying phase may allow for a direct test of this hypothesis. | ||

=== Further Information === | === Further Information === | ||

| Line 81: | Line 103: | ||

==== References ==== | ==== References ==== | ||

| + | # Chi, M. T. H. (2000). Self-explaining expository texts: The dual processes of generating inferences and repairing mental models. In R. Glaser (Ed.), Advances in instructional psychology (pp. 161-238). Mahwah, NJ: Lawrence Erlbaum Associates, Inc. [http://www.pitt.edu/~chi/papers/advances.pdf] | ||

| + | # Chi, M. T. H., DeLeeuw, N., Chiu, M.-H., & LaVancher, C. (1994). Eliciting self-explanations improves understanding. Cognitive Science, 18, 439-477. [http://www.pitt.edu/~chi/papers/ChideLeeuwChiuLaVancher.pdf] | ||

| + | # Hausmann, R. G. M., & VanLehn, K. (2007). Explaining self-explaining: A contrast between content and generation. In R. Luckin, K. R. Koedinger & J. Greer (Eds.), Artificial intelligence in education: Building technology rich learning contexts that work (Vol. 158, pp. 417-424). Amsterdam: IOS Press. [http://learnlab.org/uploads/mypslc/publications/hausmannvanlehn2007_final.pdf] | ||

| + | # McNamara, D. S. (2004). SERT: Self-explanation reading training. Discourse Processes, 38(1), 1-30. [http://www.leaonline.com/doi/abs/10.1207/s15326950dp3801_1?journalCode=dp] | ||

| + | # Renkl, A. (1997). Learning from worked-out examples: A study on individual differences. Cognitive Science, 21(1), 1-29. [http://www.leaonline.com/doi/pdf/10.1207/s15516709cog2101_1] | ||

==== Connections ==== | ==== Connections ==== | ||

Latest revision as of 17:36, 10 October 2008

Contents

Self-explanation: Meta-cognitive vs. justification prompts

Robert G.M. Hausmann, Brett van de Sande, Sophia Gershman, & Kurt VanLehn

Summary Table

| PIs | Robert G.M. Hausmann (Pitt), Brett van de Sande (Pitt), Sophia Gershman (WHRHS), & Kurt VanLehn (Pitt) |

| Other Contributers | Tim Nokes (Pitt) |

| Study Start Date | Sept. 1, 2007 |

| Study End Date | Aug. 31, 2008 |

| LearnLab Site | Watchung Hills Regional High School (WHRHS) |

| LearnLab Course | Physics |

| Number of Students | N = 75 |

| Total Participant Hours | 150 hrs. |

| DataShop | Anticipated: June 1, 2008 |

Abstract

The literature on studying examples and text in general shows that students learn more when they are prompted to self-explain the text as they read it. Experimenters have generally used two types of prompts: meta-cognitive and justification. An example of a meta-cognitive prompt would be, "What did this sentence tell you that you didn't already know?" and an example of a justification prompt would be, "What reasoning or principles justifies this sentence's claim?" To date, no study has included both types of prompts, and yet there are good theoretical reasons to expect them to have differential impacts on student learning. This study will directly compare them in a single experiment using high schools physics students.

Background and Significance

The self-explanation effect has been empirically demonstrated to be an effective learning strategy, both in the laboratory and in the classroom. The effect sizes range from d = .74 – 1.12 in the lab for difficult problems (Chi, DeLeeuw, Chiu, & LaVancher, 1994; McNamara, 2004) to d = .44 – .92 in the classroom (Hausmann & VanLehn, 2007). However, both the amount and quality of spontaneously produced self-explanations is highly variable (Renkl, 1997). To increase both the likelihood and quality, different prompting procedures have been designed to solicit student-generated explanations. However, an open question is how to structure the learning environment to maximally support learning from self-explanation. One method to support robust learning is to design instructional prompts that increase the probability that students will frequently generate high-quality self-explanations. What counts as a “high-quality self-explanation?”

The answer to that question may depend on the type of knowledge to be learned. Knowledge can be categorized into two types, either procedural or declarative knowledge. In physics, students often learn the procedural skill of solving problems by studying examples. An example is a solution to a problem, which is derived in a series of steps. An example step contains either an application of a physics principle or mathematical operator. The transition from one step to the next can be justified by a reason consisting of the applicable principle or operator. Therefore, an effective prompt for procedural learning asks the student to justify each step of an example with a domain principle or operator. For the purposes of this proposal, we shall call this type of prompt “justification prompts.”

Contrast a high-quality self-explanation from problem solving in physics with an explanation from a declarative domain (i.e., the human circulatory system). In this domain, the student’s task is to develop a robust mental model of a physical system. Instead of a solution example broken down by steps, the information is presented as a text, with each sentence presented separately. When each sentence is read, the student’s goal is to revise or augment his or her initial mental model (Chi, 2000). A high-quality explanation in this domain requires the student to consider the relationship between the structure, behavior, and function of the various anatomical features of the circulatory system. When a student begins reading about the heart, she rarely (if ever) comes to the task as a blank slate. More likely, the student has an initial mental model that is flawed in some way. Therefore, the student must revise her initial mental model to align itself with the content of the text. This is generally not an easy task because the reader is required to use her prior knowledge to comprehend the text, while simultaneously revise that same knowledge. In this case, a high-quality self-explanation may consist of reflecting on one’s own understanding, comparing it to the target material, explaining the discrepancies between the two, and revising the mental model. Therefore, we shall refer to prompts that encourage this type of behavior as “meta-cognitive prompts.”

Thus, different types of self-explanation prompts may lead to different learning outcomes. Justification-based prompts may inspire more gap-filling activities, while meta-cognitive prompts may evoke more mental model repair. While both types of prompting techniques have been used in prior research on self-explaining, what remains to be explored, however, is a systematic exploration of the differential impact prompting for justifications or meta-cognitive activities on robust learning.

Glossary

Research question

How is robust learning affected by justification-based vs. meta-cognitive prompts for self-explanation?

Independent variables

Only one independent variable, with three levels, was used:

- Prompt Type: meta-cognitive prompts vs. justification-based prompts vs. attention-focusing prompts

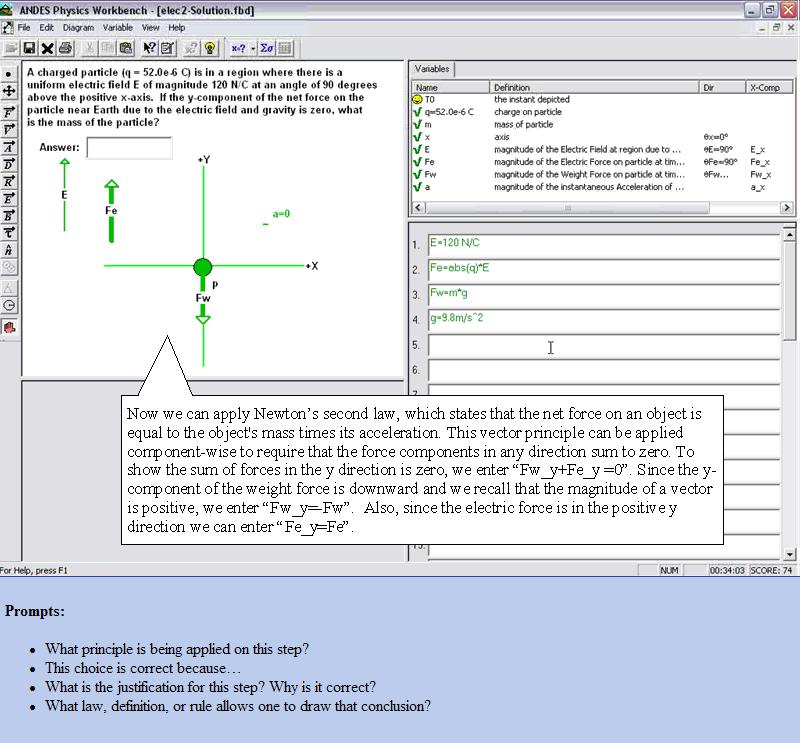

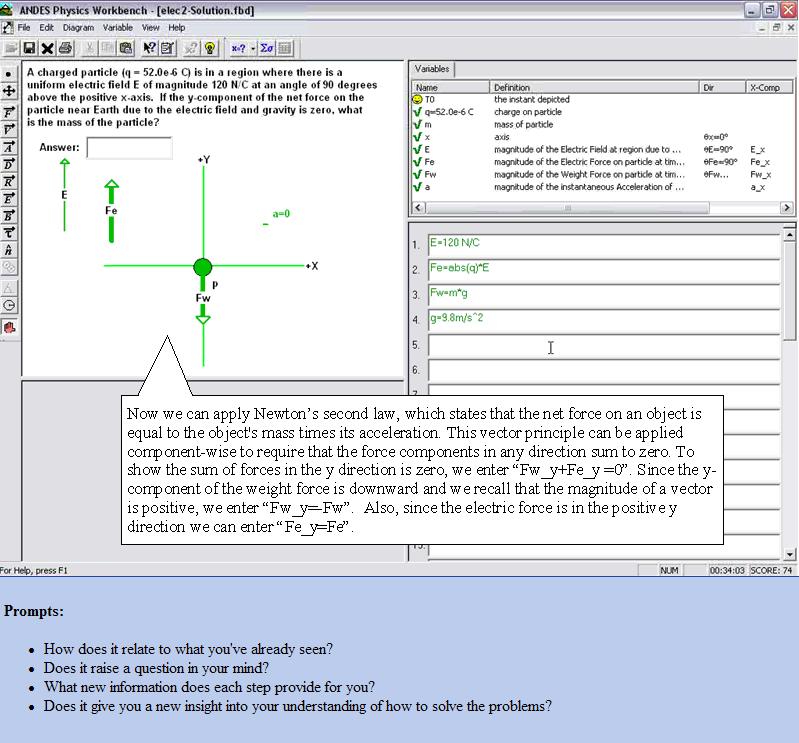

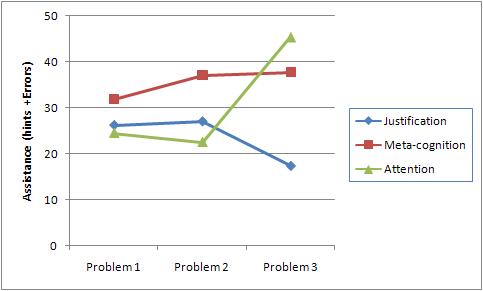

Prompting for an explanation was intended to increase the probability that the individual or dyad will traverse a useful learning-event path. Meta-cognitive prompts were designed to increase the students' awareness of their developing knowledge. The justification-based prompts were designed to motivate students to explicitly articulate the principle needed to solve a particular problem. Finally, the attention-focusing prompts were designed as a set of control prompts. The purpose was to steer their attention to the examples that they are studying, without motivating any particular type of active cognitive processing.

Figure 1. An example from the Justification-based condition

Figure 2. An example from the Meta-cognitive condition

Figure 3. An example from the Attention-focusing condition

Hypothesis

This experiment implements the following instructional principle: Prompted Self-explanation

Dependent variables

- Normal post-test

- Near transfer, immediate: During training, worked examples alternated with problems, and the problems were solved using Andes. Each problem was similar to the example that preceded it, so performance on it is a measure of normal learning (near transfer, immediate testing). The log data were analyzed and assistance scores (sum of errors and help requests, normalized by the number of transactions) were calculated.

- Robust learning

- Long-term retention: On the student’s regular mid-term exam, one problem was similar to the training. Since this exam occurred a week after the training, and the training took place in just under 2 hours, the student’s performance on this problem is considered a test of long-term retention.

- Near and far transfer: After training, students did their regular homework problems using Andes. Students did them whenever they wanted, but most completed them just before the exam. The homework problems were divided based on similarity to the training problems, and assistance scores were calculated.

- Accelerated future learning: The training was on electrical fields, and it was followed in the course by a unit on magnetic fields. Log data from the magnetic field homework was analyzed as a measure of acceleration of future learning.

Results

Procedure Participants were randomly assigned to condition. The first activity was to train the participants in their respective explanation activities. They read the instructions to the experiment, presented on a webpage, followed by the prompts used after each step of the example.

All of the participants were enrolled in a year-long, high-school physics course. The task domain, electrodynamics, was taught at the beginning of the Spring semester. Therefore, all of the students were familiar with the Andes physics tutor. They did not need any training in the interface. Unlike our previous lab experiment, they did not solve a warm-up problem. Instead, they started the experiment with a fairly complex problem.

Once they finished, participants then watched a video solving an isomorphic problem. Note that this procedure is slightly different from previous research, which used examples presented before solving problems (e.g., Sweller & Cooper, 1985; Exper. 2). The videos decomposed into steps, and students were prompted to explain each step. The cycle of explaining examples and solving problems repeated until either 3 problems were solved or the class period was over. The problems were designed to become progressively more complex.

The results reported below are from a median split conducted on the assistance score on the first problem. The students who were below the median are included in the analyses because the strong prior-knowledge students were unaffected by the different types of prompts.

- Normal post-test

- Near transfer, immediate: The prompting for justifications demonstrating lower normalized assistance scores for later problems than the other two conditions.

- In addition, the justification-based prompting condition demonstrated faster solution times than the other two conditions for the last problem.

- Near transfer, immediate: The prompting for justifications demonstrating lower normalized assistance scores for later problems than the other two conditions.

- Robust learning

- Near transfer, retention: to be collected and analyzed.

- Near and far transfer: to be collected and analyzed.

- Accelerated future learning: to be collected and analyzed.

Explanation

Data analyses are preliminary and ongoing. Thus, the results reported above are speculative at this point. However, the trends suggest that justification prompting for physics may provide a learning advantage for later, more complex and difficult problems. One reason for the superiority of justification-based prompting is related to the match between the instructional materials and the cognitive task demands. That is, there are two sequential learning events that occur during the experiment. The first is learning from problem solving. In this case, the student must search the problem space in order to arrive at a fully specified solution. In so doing, he or she will most likely access and apply principles from physics. There are multiple competing features in the environment that cue the retrieval of these principles. The valid features that signal the correct application of a principle are called the "applicability conditions." During example studying, the student is released from the task of accessing the principles and is instead asked to generation the conditions of applicability. Generating the justification for a problem-solving action is commensurate with this task.

Prompting for meta-cognitive reflection and evaluation, however, may not fit the task as well. Instead, students who do not have strong prior knowledge may find the lack of structure unhelpful. Weaker students may need a more structured learning environment.

The attention prompts are ambiguous because 2 of them may inspire the student to self-explain. A close analysis of the audio portions of the data that were collected during the example-studying phase may allow for a direct test of this hypothesis.

Further Information

Annotated bibliography

References

- Chi, M. T. H. (2000). Self-explaining expository texts: The dual processes of generating inferences and repairing mental models. In R. Glaser (Ed.), Advances in instructional psychology (pp. 161-238). Mahwah, NJ: Lawrence Erlbaum Associates, Inc. [1]

- Chi, M. T. H., DeLeeuw, N., Chiu, M.-H., & LaVancher, C. (1994). Eliciting self-explanations improves understanding. Cognitive Science, 18, 439-477. [2]

- Hausmann, R. G. M., & VanLehn, K. (2007). Explaining self-explaining: A contrast between content and generation. In R. Luckin, K. R. Koedinger & J. Greer (Eds.), Artificial intelligence in education: Building technology rich learning contexts that work (Vol. 158, pp. 417-424). Amsterdam: IOS Press. [3]

- McNamara, D. S. (2004). SERT: Self-explanation reading training. Discourse Processes, 38(1), 1-30. [4]

- Renkl, A. (1997). Learning from worked-out examples: A study on individual differences. Cognitive Science, 21(1), 1-29. [5]