Difference between revisions of "Post-practice reflection (Katz)"

(→Post-practice reflection in a first-year physics course: Does mixed-initiative interaction support robust learning better than tutor-led interaction or canned text?) |

(→Independent Variables) |

||

| (15 intermediate revisions by 3 users not shown) | |||

| Line 3: | Line 3: | ||

=== Summary Table === | === Summary Table === | ||

| − | + | {| border="1" cellspacing="0" cellpadding="5" style="text-align: left;" | |

| − | + | | '''PI''' || Sandra Katz | |

| − | + | |- | |

| − | * | + | | '''Other Contributors'''<br> |

| − | + | * '''Post-Docs:''' | |

| − | + | | <br>John Connelly | |

| − | + | |- | |

| − | + | | '''Study Start Date''' || 1/1/05 | |

| − | + | |- | |

| − | + | | '''Study End Date''' || 12/31/05 | |

| − | + | |- | |

| + | | '''LearnLab Site''' || USNA | ||

| + | |- | ||

| + | | '''LearnLab Course''' || General Physics I | ||

| + | |- | ||

| + | | '''Number of Students''' || ''N'' = 123 | ||

| + | |- | ||

| + | | '''Total Participant Hours''' || approx. 500 hrs | ||

| + | |- | ||

| + | | '''DataShop''' || No; Andes data still incompatible | ||

| + | |} | ||

=== Abstract === | === Abstract === | ||

| Line 42: | Line 52: | ||

=== Dependent Variables === | === Dependent Variables === | ||

| − | * ''Gains in qualitative and quantitative knowledge''. Post-test score,and pre-test to post-test gain scores, on near transfer (normal | + | * ''Gains in qualitative and quantitative knowledge''. Post-test score,and pre-test to post-test gain scores, on near transfer ([[normal post-test]]) and far [[transfer]] (robust learning) items. |

* ''Short-term retention''. Performance on course exam that covered target topic (work and energy), 1-2 weeks after the intervention. | * ''Short-term retention''. Performance on course exam that covered target topic (work and energy), 1-2 weeks after the intervention. | ||

| − | * '' | + | * ''[[Long-term retention]]''. Performance on final exam, taken several weeks after the intervention. |

=== Independent Variables === | === Independent Variables === | ||

| − | Students were block-randomly assigned to one of four dialogue conditions: control (Ctrl), with no KCDs; canned text response (CTR), with free-response KCDs followed by pre-scripted expert responses; standard KCDs (KCD), with shorter-answer questions and tutor responses | + | Students were block-randomly assigned to one of four dialogue conditions, with reflective KCDs presented after selected Andes problems: |

| + | * control (Ctrl), with no reflection questions and KCDs; just the standard Andes problems: | ||

| + | [[Image:E1b-50.jpg]] | ||

| + | |||

| + | * canned text response (CTR), with free-response KCDs followed by pre-scripted expert responses; students' responses were not given any positive or negative feedback: | ||

| + | [[Image:5ctr.jpg]] | ||

| + | |||

| + | * standard KCDs (KCD), with shorter-answer questions and tutor responses that indicated what parts of the student's response were incomplete or incorrect and tried to elicit the correct aspects: | ||

| + | [[Image:5kcd.jpg]] | ||

| + | |||

| + | * limited mixed-initiative KCDs (MIX), same as the standard KCDs but with on-demand hypertext question-and-answer links after selected tutor questions: | ||

| + | [[Image:5mix.jpg]] | ||

However, there were no significant differences between categorical condition assignments, even after reclassifying as Ctrl subjects those in the three treatment conditions who saw no KCDs and after collapsing the KCD and MIX conditions (due to minimal usage of mixed-initiative links, for the most part the two conditions were equivalent). We therefore treated KCD and problem completion as continuous variables. | However, there were no significant differences between categorical condition assignments, even after reclassifying as Ctrl subjects those in the three treatment conditions who saw no KCDs and after collapsing the KCD and MIX conditions (due to minimal usage of mixed-initiative links, for the most part the two conditions were equivalent). We therefore treated KCD and problem completion as continuous variables. | ||

| Line 56: | Line 77: | ||

The following variables were entered into a regression analysis, with post-test score as the dependent variable: | The following variables were entered into a regression analysis, with post-test score as the dependent variable: | ||

| − | *Number of problems completed before the post-test was administered | + | *Number of problems completed before the post-test was administered |

| − | *Number of reflection questions that the student completed | + | *Number of reflection questions that the student completed |

*CQPR—grade point average | *CQPR—grade point average | ||

| − | *College major grouping | + | *College major grouping |

*Pre-test score | *Pre-test score | ||

| Line 85: | Line 106: | ||

* ''The student takes the learning-by-doing path unless it becomes too difficult''. We are unable to determine why students chose not to take the learning-by doing path. Perhaps doing the reflection questions was too difficult for some students, but we suspect that this was not the case. A more likely explanation is that students did not have the time to complete these questions, and there were no negative consequences for avoiding them. In the follow-up study that we are currently running, students are required to complete the reflection questions in order to get credit for completing a problem. | * ''The student takes the learning-by-doing path unless it becomes too difficult''. We are unable to determine why students chose not to take the learning-by doing path. Perhaps doing the reflection questions was too difficult for some students, but we suspect that this was not the case. A more likely explanation is that students did not have the time to complete these questions, and there were no negative consequences for avoiding them. In the follow-up study that we are currently running, students are required to complete the reflection questions in order to get credit for completing a problem. | ||

| − | === References === | + | === Further Information === |

| + | ==== Annotated bibliography ==== | ||

| + | * Presentation to site visitors, 2005 | ||

| + | * Poster presentation at the 2006 American Association for Physics Teachers (AAPT) Conference | ||

| + | * Full-paper accepted at AIED 2007 | ||

| + | |||

| + | ==== References ==== | ||

*Collins, A., Brown, J. S., & Newman, S. E. (1989). Cognitive apprenticeship: Teaching the craft of reading, writing and mathematics. In L. B. Resnick (Ed.), ''Knowing, learning and instruction: Essays in honor of Robert Glaser'' (pp. 543-494). Hillsdale, NJ: Erlbaum. | *Collins, A., Brown, J. S., & Newman, S. E. (1989). Cognitive apprenticeship: Teaching the craft of reading, writing and mathematics. In L. B. Resnick (Ed.), ''Knowing, learning and instruction: Essays in honor of Robert Glaser'' (pp. 543-494). Hillsdale, NJ: Erlbaum. | ||

*Lee, A. Y., & Hutchison, L. (1998). Improving learning from examples through reflection. ''Journal of Experimental Psychology: Applied, 4'' (3), 187-210. | *Lee, A. Y., & Hutchison, L. (1998). Improving learning from examples through reflection. ''Journal of Experimental Psychology: Applied, 4'' (3), 187-210. | ||

| Line 92: | Line 119: | ||

*Katz, S., O’Donnell, G., & Kay, H. (2000). An approach to analyzing the role and structure of reflective dialogue. ''International Journal of Artificial Intelligence and Education, 11'', 320-343. | *Katz, S., O’Donnell, G., & Kay, H. (2000). An approach to analyzing the role and structure of reflective dialogue. ''International Journal of Artificial Intelligence and Education, 11'', 320-343. | ||

| − | === Connections === | + | ==== Connections ==== |

This project shares features with the following research projects: | This project shares features with the following research projects: | ||

Use of Questions during learning | Use of Questions during learning | ||

| − | * [[ | + | * [[Reflective Dialogues (Katz)|Reflective Dialogues (Katz, Connelly & Treacy, 2006)]] |

| + | * [[Extending Reflective Dialogue Support (Katz & Connelly)|Extending Reflective Dialogue Support (Katz & Connelly, 2007)]] | ||

* [[Craig_questions|Deep-level questions during example studying (Craig & Chi)]] | * [[Craig_questions|Deep-level questions during example studying (Craig & Chi)]] | ||

* [[FrenchCulture | FrenchCulture (Amy Ogan, Christopher Jones, Vincent Aleven)]] | * [[FrenchCulture | FrenchCulture (Amy Ogan, Christopher Jones, Vincent Aleven)]] | ||

| + | * [[Rummel_Scripted_Collaborative_Problem_Solving|Collaborative Extensions to the Cognitive Tutor Algebra: Scripted Collaborative Problem Solving (Rummel, Diziol, McLaren, & Spada)]] | ||

| + | |||

Self explanations during learning | Self explanations during learning | ||

Latest revision as of 20:16, 3 November 2008

Post-practice reflection in a first-year physics course: Does mixed-initiative interaction support robust learning better than tutor-led interaction or canned text?

Sandra Katz

Summary Table

| PI | Sandra Katz |

Other Contributors

|

John Connelly |

| Study Start Date | 1/1/05 |

| Study End Date | 12/31/05 |

| LearnLab Site | USNA |

| LearnLab Course | General Physics I |

| Number of Students | N = 123 |

| Total Participant Hours | approx. 500 hrs |

| DataShop | No; Andes data still incompatible |

Abstract

We conducted an in vivo experiment within the PSLC Physics LearnLab to address two questions about reflection on recent problem-solving activity within Andes: (1) Does post-practice reflection support robust learning of physics—that is, students’ ability to transfer what they learned during instruction to novel situations? and (2) What is the preferred delivery mode for post-practice reflection in intelligent tutoring systems? We compared pre-test to post-test learning gains of students who were randomly assigned to one of four conditions that represent points on a scale of increasingly generative student activity: (1) a control group who solved a set of problems in Andes without any reflective activity, (2) a self-explanation group, who solved Andes problems, responded to several “reflection questions” (Lee & Hutchison, 1998) after each problem and received a canned, expert-generated explanation that they were prompted to compare with their response, (3) a “tutor-led interaction” group, who solved the same Andes problems and reflection questions (RQs) as the didactic group, but the questions were embedded in Knowledge Construction Dialogues (KCD’s) that guided students in generating a correct response, and (4) a “scaffolded mixed initiative KCD group,” which solved the same problems and responded to the same reflection questions as the other reflection groups, embedded in similar KCD’s as those presented to the “tutor-led interaction” group, with the added support of follow-up questioning menus that allowed students to take initiative.

Unfortunately, participation in the three experimental conditions was too low to compare the effectiveness of these treatments. However, a yoked pairs analysis revealed that the more reflection questions that students did, of any type, the better they did, with respect to both post-test scores and pre-test to post-test gain scores. Similarly, regression analysis revealed that the number of dialogues a student completed had a significant positive effect on post-test score, independent of the number of problems he or she completed. These findings provide empirical support for post-practice reflection in an ITS that is a central component of a course.

Glossary

Research questions

Do reflection questions after physics problem solving support robust learning? Is more interactive reflection, between the automated tutor and student, more effective in supporting robust learning than less interactive reflection?

Background and Significance

Despite the well-established role that post-practice, reflective dialogue plays in apprentice-style learning (Collins, Brown, & Newman, 1989), few studies have been conducted to test their effectiveness. Hence, there is little empirical support for incorporating post-practice reflection in courses led by a human teacher or within intelligent tutoring systems (ITSs). Nor is there much guidance on how to implement reflection effectively, especially within the constraints of natural-language understanding and generation capabilities.

Research by Lee and Hutchison (1998) focused on the effectiveness of reflection questions posed after students studied worked examples on balancing chemistry equations in a computer-based learning environment. This study found that reflection questions enhanced students’ problem-solving ability. However, the role of reflection in promoting conceptual understanding was not addressed; nor did the study investigate whether reflection questions after problem-solving exercises (as opposed to example studying) support learning.

Two laboratory studies conducted by Katz and her colleagues addressed these questions. In a longitudinal analysis of student performance on avionics tasks in Sherlock 2 (e.g., Katz et al., 1998), Katz, O’Donnell and Kay (2000) found that discussions that took place between an avionics expert and two students were more effective in resolving misconceptions when they were distributed across problem solving and debrief than were discussions that took place during problem solving alone. However, due to constraints inherent in the research setting, there was no control group in this study—that is, avionics trainees who did not experience debrief led by a domain expert—and no instrument to measure performance gains. A follow-up study by Katz, Allbritton, & Connelly (2003) addressed these limitations in a different domain, first-year college physics. Forty-six students taking college physics solved problems in Andes in one of three conditions: with no reflection questions after problem solving (control group), with reflection questions discussed with human tutors, or with the same reflection questions followed by canned feedback (without a human tutor). A comparison of pre-test and post-test scores was conducted to measure learning gains. The main result was that students learned more with reflection questions than without, but the two conditions with reflection questions (canned feedback and human tutoring) did not differ significantly.

The current study is significant because it validated the results of these laboratory studies in an actual classroom setting. Specifically, it showed that post-practice reflection supports learning, as measured by pre-test to post-test learning gain scores. Due to low participation, however, this experiment did not shed light on the question of which modality of reflection is most effective.

Dependent Variables

- Gains in qualitative and quantitative knowledge. Post-test score,and pre-test to post-test gain scores, on near transfer (normal post-test) and far transfer (robust learning) items.

- Short-term retention. Performance on course exam that covered target topic (work and energy), 1-2 weeks after the intervention.

- Long-term retention. Performance on final exam, taken several weeks after the intervention.

Independent Variables

Students were block-randomly assigned to one of four dialogue conditions, with reflective KCDs presented after selected Andes problems:

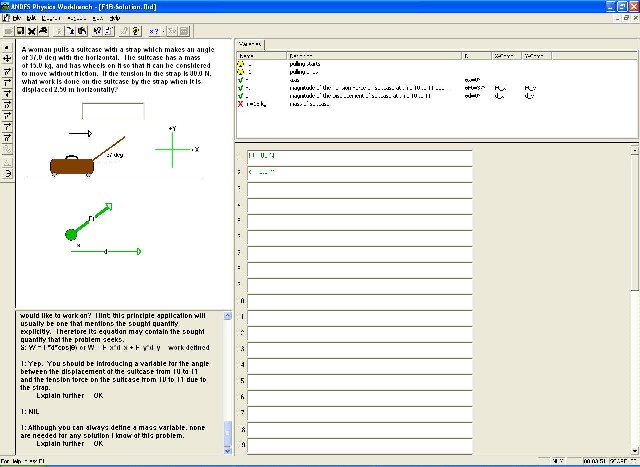

- control (Ctrl), with no reflection questions and KCDs; just the standard Andes problems:

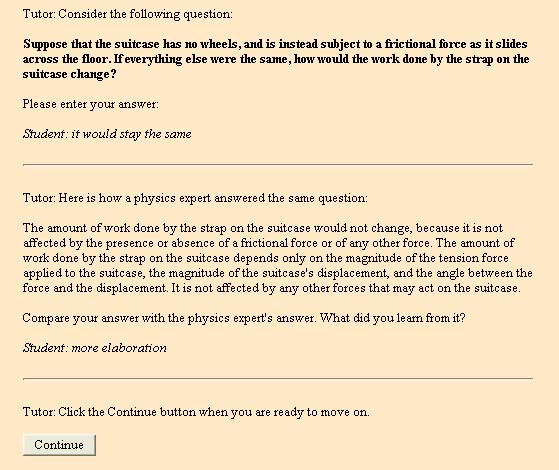

- canned text response (CTR), with free-response KCDs followed by pre-scripted expert responses; students' responses were not given any positive or negative feedback:

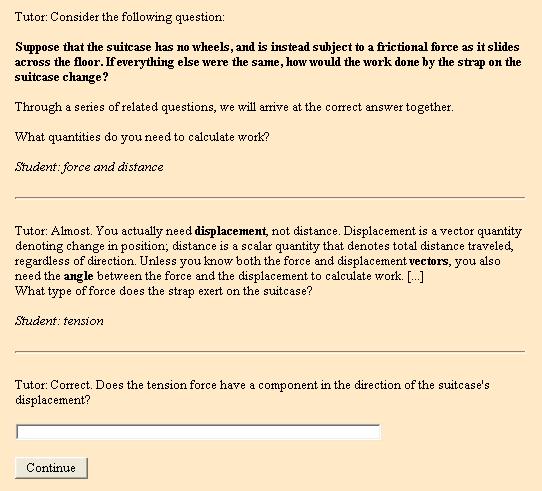

- standard KCDs (KCD), with shorter-answer questions and tutor responses that indicated what parts of the student's response were incomplete or incorrect and tried to elicit the correct aspects:

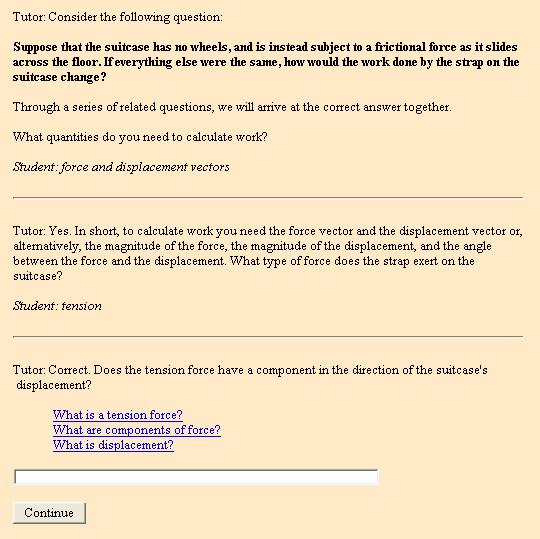

- limited mixed-initiative KCDs (MIX), same as the standard KCDs but with on-demand hypertext question-and-answer links after selected tutor questions:

However, there were no significant differences between categorical condition assignments, even after reclassifying as Ctrl subjects those in the three treatment conditions who saw no KCDs and after collapsing the KCD and MIX conditions (due to minimal usage of mixed-initiative links, for the most part the two conditions were equivalent). We therefore treated KCD and problem completion as continuous variables.

The following variables were entered into a regression analysis, with post-test score as the dependent variable:

- Number of problems completed before the post-test was administered

- Number of reflection questions that the student completed

- CQPR—grade point average

- College major grouping

- Pre-test score

Hypotheses

- Because responding to reflection questions is a form of “active learning,” students in any of the three experimental conditions (self-explanation of canned text, standard KCD’s, tutor-led KCDs) should outperform students in the control (no-reflection) condition. This hypothesis was supported.

- The more interactive the reflection modality the better, so mixed-initiative > standard KCD > self-explanation of canned text. We were unable to test this hypothesis, due to low participation.

Findings

- Gain scores summary:

- There were no significant differences in mean gain score (or in pre-test and post-test scores) by condition (4 conditions; 3 treatment, 1 control) or by collapsed effective condition (Ctrl, CTR, KCD).

- Yoked pairs analysis, comparing students from the same major groups with identical pre-tests scores (and minimal CQPR disparity) who completed no reflection questions with students who completed five or more questions (n = 19 pairs), showed that “treated” subjects tended to out-gain “untreated” subjects.

- Regression analysis summary:

- The number of reflection questions completed had a significant positive effect on post-test scores.

- Exam score summary:

- For the final exam, there was no significant impact of the number of reflection questions completed.

- For one course section’s hourly exam on work and energy, there was a significant, positive impact of the number of reflection questions completed, but only when CQPR was dropped from the model. For the other section’s hourly exam, “treated” subjects significantly outperformed “untreated” subjects, despite being outnumbered 3 to 1.

Explanation

This study is part of the Interactive Communication cluster, and its hypothesis is a specialization of the IC cluster’s central hypothesis. The IC cluster’s central hypothesis is that robust learning occurs when two conditions are met:

- The learning event space should have paths that are mostly learning-by-doing along with alternative paths where a second agent does most of the work. Since this experiment did not deal with collaboration between agents, it did not test this condition. It did, however, show that the more that students engage in one form of learning-by-doing—mainly, post-practice reflection—the more they learn.

- The student takes the learning-by-doing path unless it becomes too difficult. We are unable to determine why students chose not to take the learning-by doing path. Perhaps doing the reflection questions was too difficult for some students, but we suspect that this was not the case. A more likely explanation is that students did not have the time to complete these questions, and there were no negative consequences for avoiding them. In the follow-up study that we are currently running, students are required to complete the reflection questions in order to get credit for completing a problem.

Further Information

Annotated bibliography

- Presentation to site visitors, 2005

- Poster presentation at the 2006 American Association for Physics Teachers (AAPT) Conference

- Full-paper accepted at AIED 2007

References

- Collins, A., Brown, J. S., & Newman, S. E. (1989). Cognitive apprenticeship: Teaching the craft of reading, writing and mathematics. In L. B. Resnick (Ed.), Knowing, learning and instruction: Essays in honor of Robert Glaser (pp. 543-494). Hillsdale, NJ: Erlbaum.

- Lee, A. Y., & Hutchison, L. (1998). Improving learning from examples through reflection. Journal of Experimental Psychology: Applied, 4 (3), 187-210.

- Katz, S., & Allbritton, D., & Connelly, J. (2003). Going beyond the problem given: How human tutors use post-solution discussions to support transfer. International Journal of Artificial Intelligence and Education, 13 (1), 79-116.

- Katz, S., Lesgold, A., Hughes, E., Peters, D., Eggan, G., Gordin, M., & Greenberg, L. (1998). Sherlock II: An intelligent tutoring system built upon the LRDC tutor framework. In C. P. Bloom & R. B. Loftin (Eds.), Facilitating the development and use of interactive learning environments (pp. 227-258). Mahwah, NJ: Erlbaum.

- Katz, S., O’Donnell, G., & Kay, H. (2000). An approach to analyzing the role and structure of reflective dialogue. International Journal of Artificial Intelligence and Education, 11, 320-343.

Connections

This project shares features with the following research projects:

Use of Questions during learning

- Reflective Dialogues (Katz, Connelly & Treacy, 2006)

- Extending Reflective Dialogue Support (Katz & Connelly, 2007)

- Deep-level questions during example studying (Craig & Chi)

- FrenchCulture (Amy Ogan, Christopher Jones, Vincent Aleven)

- Collaborative Extensions to the Cognitive Tutor Algebra: Scripted Collaborative Problem Solving (Rummel, Diziol, McLaren, & Spada)

Self explanations during learning