Difference between revisions of "The Help Tutor Roll Aleven McLaren"

(→Hypothesis) |

(→Background and Significance) |

||

| (20 intermediate revisions by 4 users not shown) | |||

| Line 4: | Line 4: | ||

=== Meta-data === | === Meta-data === | ||

| − | PI's: Vincent Aleven, Ido Roll, Bruce M. McLaren | + | PI's: Vincent Aleven, Ido Roll, Bruce M. McLaren, Ken Koedinger |

| − | Other | + | Other Contributors: EJ Ryu (programmer) |

{| border="1" | {| border="1" | ||

! Study # !! Start Date !! End Date !! LearnLab Site !! # of Students !! Total Participant Hours !! DataShop? | ! Study # !! Start Date !! End Date !! LearnLab Site !! # of Students !! Total Participant Hours !! DataShop? | ||

| Line 16: | Line 16: | ||

| '''3''' || 5/2005 || 5/2005 || Hampton & Wilkinsburg (Geometry) || 60 || 270 || No, incompatible format | | '''3''' || 5/2005 || 5/2005 || Hampton & Wilkinsburg (Geometry) || 60 || 270 || No, incompatible format | ||

|- | |- | ||

| − | | '''4''' || 2/2006 || 4/2006 || CWCTC (Geometry) || 84 || 1,008 || | + | | '''4''' || 2/2006 || 4/2006 || CWCTC (Geometry) || 84 || 1,008 || Yes |

|} | |} | ||

| Line 34: | Line 34: | ||

Not only that teaching [[metacognition]] holds the promise of improving current learning of the domain of interest, but also, or even mainly, it can accelerate future learning and successful regulation of independent learning. One example to metacognitive knowledge is help-seeking [[knowledge component]]s: The ability to identify the need for help, and to elicit appropriate assistance from the [[relevant resources|help facilities]. | Not only that teaching [[metacognition]] holds the promise of improving current learning of the domain of interest, but also, or even mainly, it can accelerate future learning and successful regulation of independent learning. One example to metacognitive knowledge is help-seeking [[knowledge component]]s: The ability to identify the need for help, and to elicit appropriate assistance from the [[relevant resources|help facilities]. | ||

| − | However, considerable evidence shows that metacognitive [[knowledge component]]s are in need of better support. For example, while working with Intelligent Tutoring Systems, students try to "[[game the system]]" or do not [[self-explanation|self-explain]] enough. Similarly, research shows that students' [[help-seeking behavior]] leaves much room for improvement. | + | However, considerable evidence shows that metacognitive [[knowledge component]]s are in need of better support. For example, while working with Intelligent Tutoring Systems, students try to "[[Gaming the system|game the system]]" or do not [[self-explanation|self-explain]] enough. Similarly, research shows that students' [[help-seeking behavior]] leaves much room for improvement. |

==== Shallow help seeking [[knowledge component]]s ==== | ==== Shallow help seeking [[knowledge component]]s ==== | ||

| Line 141: | Line 141: | ||

==== Instructional Principles ==== | ==== Instructional Principles ==== | ||

| − | + | The main principles being evaluated here is whether [[Roll_help seeking principle | instruction should support meta-cognition in the context of problem solving]] by using principles of cognitive tutoring such as: | |

| − | + | * Giving direct instruction | |

| − | + | * Giving immediate feedback on errors | |

| − | + | * Prompting for self-assessment | |

| + | |||

| + | This utilizes the following instructional principles: | ||

| + | |||

| + | * The Self-Assessment Tutor utilizes the [[Reflection questions]] principle | ||

| + | * The Help Tutor itself utilizes the [[Tutoring feedback]] principle | ||

| + | * The Help Seeking Instruction utilizes the [[Explicit instruction]] principle. | ||

=== Findings === | === Findings === | ||

| Line 155: | Line 161: | ||

# As a result, students are expected to become better future learners. | # As a result, students are expected to become better future learners. | ||

| − | [[Image: | + | [[Image:Roll_Pyramid.jpg]] |

| Line 163: | Line 169: | ||

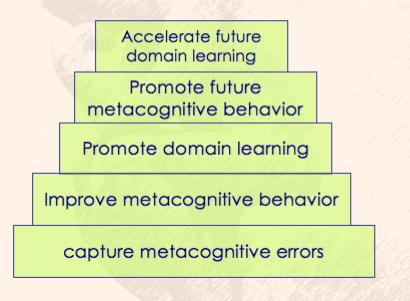

This data suggests that the help-seeking model captures appropriate actions, and that the goal was achieved - the Help Tutor captures help-seeking errors. | This data suggests that the help-seeking model captures appropriate actions, and that the goal was achieved - the Help Tutor captures help-seeking errors. | ||

| − | [[Image:help- | + | [[Image:help-seeking_and_learning.jpg]] |

==== Evaluation of goal 2: Improve metacognitive behavior ==== | ==== Evaluation of goal 2: Improve metacognitive behavior ==== | ||

| − | + | Students’ hint usage improved significantly while working with the Help Tutor across several measures, most notably, help-seeking error rate, frequency of asking for bottom-out hints, and hint reading time. Study 2 also shows that these aspects of improvement persist even beyond the scope of the Help Tutor. These improvements consistently reached significance only after an extended period of time of working with the Help Tutor (i.e., after the second part of the study). We hypothesize that since the Help Tutor feedback appeared in two different areas of geometry learning, students could more easily acquire the domain-independent help-seeking skills and thus transfer them better to the other subject matter areas addressed between and after the two in which the Help Tutor was used. | |

| + | The addition of the help-seeking instruction and self-assessment episodes in Study 2 led to improvements in students’ conceptual help seeking knowledge, time to read hints, and an apparent improvement in hint-to-error ratio. The fact that students were more likely to ask for hints rather than committing more errors provide behavioral evidence that self-assessment instruction provided by both the Self-Assessment tutor and by certain messages in the Help Tutor appear to lead students to be more aware of their need for help, reinforcing the causal link between self-assessment and strategic help seeking (Tobias & Everson, 2002). | ||

| + | Although students’ hint usage improved, no major improvements in the deliberateness of students’ solution attempts were found (besides that indicated by the differences in hint-to-error ratio). It may be that the Help Tutor did not support this aspect of learning well enough. An alternative explanation is that as long as students attempted to solve problems (whether successfully or not) they were too occupied by the problem-solving attempts and thus did not pay attention (and cognitive resources) to the Help Tutor until they reached an impasse that required them to ask for help. This may be an outcome of our design decision to give feedback in the context of domain learning. | ||

| − | [[Image:help- | + | [[Image:help-seeking_behavior.jpg]] |

This data suggests that while the system was not able to improve all aspects of the desired metacognitive behavior, it did improve students' behavior on the common types of errors. | This data suggests that while the system was not able to improve all aspects of the desired metacognitive behavior, it did improve students' behavior on the common types of errors. | ||

| Line 179: | Line 187: | ||

Study 1 results: | Study 1 results: | ||

| − | [[Image: | + | [[Image:study_1_results.jpg]] |

Study 2 results: | Study 2 results: | ||

| − | [[Image: | + | [[Image:study_2_results.jpg]] |

| − | * Notice, since the tests in times 1 and 2 evaluated different instructional units (Angles vs. Quadrilaterals), lower grades at time 2 do not suggest decrease in knowledge. | + | * Notice, since the tests in times 1 and 2 evaluated different instructional units (Angles vs. Quadrilaterals), lower grades at time 2 do not suggest decrease in knowledge. |

==== Evaluation of goal 4: Improve future metacognitive behavior ==== | ==== Evaluation of goal 4: Improve future metacognitive behavior ==== | ||

| − | + | As mentioned earlier, the two units of study 2 were spread apart by one month. We collected data from students' behavior in the months between the two units and following the study. During these months students repeated previous material in preparation for statewide exams. | |

| + | As seen in the table below, the main effects of the help-seeking environment persisted even once students moved on to working with the native Cognitive Tutor! Overall, students who received help-seeking support during the study took more time for their actions following the study, especially for reading hints - their hint reading time before asking for additional hint is longer by almost one effect size in the month following the study (12 vs. 8 seconds, t(44)=3.0, p<.01). Also, students who were in the Help condition did not drill down through the hints as often, though this effect is only marginal significant (average hint level: 2.2 vs. 2.6, t(51)=1.9, p=.06). These effects are more consistently significant after both units, suggesting that having the study stretched across two units indeed helped students better acquire the domain-independent help-seeking skills. | ||

| + | |||

| + | |||

| + | [[Image:four months of HT.jpg]] | ||

| + | |||

| + | |||

| + | |||

| + | In addition, students' help seeking behavior was evaluated in a transfer environment - the paper and pencil tests. | ||

Hypothetical help seeking dilemmas, such as the one described below, were used to evaluate declarative help-seeking knowledge. | Hypothetical help seeking dilemmas, such as the one described below, were used to evaluate declarative help-seeking knowledge. | ||

| Line 204: | Line 220: | ||

In study 2 (which included the explicit help-seeking instruction and the Self-Assessment tutor in addition to the Help Tutor) students in in the Treatment condition demonstrated better declarative help-seeking knowledge (compared with Control group students) but no better procedural knowledge | In study 2 (which included the explicit help-seeking instruction and the Self-Assessment tutor in addition to the Help Tutor) students in in the Treatment condition demonstrated better declarative help-seeking knowledge (compared with Control group students) but no better procedural knowledge | ||

| − | [[Image: | + | [[Image:Declarative_knowledge.jpg]] |

| + | [[Image:Procedural_knowledge.jpg]] | ||

==== Evaluation of goal 5: Improve future domain learning ==== | ==== Evaluation of goal 5: Improve future domain learning ==== | ||

| Line 213: | Line 230: | ||

Overall, the following pattern of results emerges from the studies: | Overall, the following pattern of results emerges from the studies: | ||

| − | + | * The Help Seeking Support Environment intervened appropriate actions during the learning process. | |

| − | + | * Students improved their help-seeking behavior while working with the system. | |

| − | - | + | * Students demonstrated improved help-seeking behavior even once support was removed, after working with the Help Tutor along two months and two different topics. |

| − | + | * Furthermore, students acquired better help-seeking declarative knowledge. | |

| − | + | * However, students' domain learning did not improve. | |

=== Explanation === | === Explanation === | ||

| − | + | ==== 1 Assessing help-seeking behavior ==== | |

| − | + | These studies put forward several direct measures of help seeking. Most notably, we found that the online help-seeking model is able to capture faulty help-seeking behavior in a manner that is consistent with domain learning. The operational nature of the model (i.e., the fact that it can be run on a computer) puts it in unique position, compared to other models of help-seeking behavior. Furthermore, formative assessment using the model is done in a transparent manner that does not interrupt the learning process. These qualities of the model enable us to do a micro-genetic (moment-by-moment) analysis of help-seeking behavior over extended periods of time, quite unique in the literature on help seeking and on self-regulated learning. In addition, the detailed evaluation of students’ actions allows us to adapt the learning process to the learner in novel ways – that is, adapt not only to students’ domain knowledge and behavior, but also to their metacognitive knowledge and behavior. | |

| − | + | Students’ online behavior as assessed by the help-seeking model also correlated with paper-and-pencil measures of help seeking knowledge and behavior. This result provides some support that the measures capture a metacognitive behavior that is domain and environment independent in nature. A combination of these instruments with domain learning measures can be used to investigate the different factors affecting learning behaviors and outcomes. | |

| − | + | ==== 2. Improving help-seeking behavior and knowledge ==== | |

| − | + | Students’ hint usage improved significantly while working with the Help Tutor across several measures, most notably, help-seeking error rate, frequency of asking for bottom-out hints, and hint reading time. Study 2 also shows that these aspects of improvement persist even beyond the scope of the Help Tutor. These improvements consistently reached significance only after an extended period of time of working with the Help Tutor (i.e., after the second part of the study). We hypothesize that since the Help Tutor feedback appeared in two different areas of geometry learning, students could more easily acquire the domain-independent help-seeking skills and thus transfer them better to the other subject matter areas addressed between and after the two in which the Help Tutor was used. | |

| − | ==== | + | The addition of the help-seeking instruction and self-assessment episodes in Study 2 led to improvements in students’ conceptual help seeking knowledge, time to read hints, and an apparent improvement in hint-to-error ratio. The fact that students were more likely to ask for hints rather than committing more errors provide behavioral evidence that self-assessment instruction provided by both the Self-Assessment tutor and by certain messages in the Help Tutor appear to lead students to be more aware of their need for help, reinforcing the causal link between self-assessment and strategic help seeking (Tobias & Everson, 2002). |

| − | + | Although students’ hint usage improved, no major improvements in the deliberateness of students’ solution attempts were found (besides that indicated by the differences in hint-to-error ratio). It may be that the Help Tutor did not support this aspect of learning well enough. An alternative explanation is that as long as students attempted to solve problems (whether successfully or not) they were too occupied by the problem-solving attempts and thus did not pay attention (and cognitive resources) to the Help Tutor until they reached an impasse that required them to ask for help. This may be an outcome of our design decision to give feedback in the context of domain learning. | |

| − | + | ==== 3 Using metacognitive improvement to improve domain learning ==== | |

| − | + | The two studies did not find an effect of the improved help-seeking behavior on domain learning. One possible explanation might be that the help-seeking model focuses on the wrong actions, although the negative correlation with learning across studies suggests otherwise. Alternatively, perhaps the improvement in help-seeking behavior was not sufficient to measurably impact domain-level learning. However, we would expect to see at least a trend in the test scores, rather than the virtual tie we observed across the three units. | |

| − | + | A third explanation (see paper #8 by authors) is that poor help-seeking behavior may be more a consequence of poor motivation toward domain learning than it is a cause. Students who do not care to learn geometry may exhibit poor help seeking behaviors and learn less, thus yielding a correlation. However, in this case, poor help seeking behavior may not reflect a lack of help-seeking skill per se, but a lack of motivation to deeply engage in help seeking and associated deep learning strategies (like attempting to encode instructional material in terms of deeper domain-relevant features rather than more superficial perceptual features). Indeed Help condition students changed their help-seeking behaviors and appeared to do so in a lasting way, but perhaps associated changes in other deep learning strategies are needed before clear effects on domain learning can be observed. | |

| − | ==== | + | To elaborate, consider that the help-seeking model focuses on how deliberately students seek help, but does not evaluate the content of the help or how it is being used. It makes sure that students take enough time to read the hint, but does not assess how this information is being processed. One of the implicit assumptions of this line of research is that students can learn from given explanations in contextual hints. However, it may be that this process is more challenging. For example, Renkl showed that at times instructional explanations actually hinder students’ tendency to self-explain and thus learn (Renkl, 2002). In other words, perhaps students do not learn enough from help not only because of how they obtain it, but also because of how they process it. Reevaluating the existing help-seeking literature supports this hypothesis. Very few experiments have actively manipulated help seeking in ITSs to date. Those who manipulated the content of the help or students’ reaction to it often found that interventions that increase reflection yield better learning: Dutke and Reimer (2000) found that principle-based hints are better than operative ones; Ringenberg and VanLehn (2006) found that analogous solved examples may be better than conventional hints; Schworm and Renkl (2002) found that deep reflection questions caused more learning compared with conventional hints; and Baker et al. (2006) showed that auxiliary exercises for students who misuse hints help them learn better. At the same time, Baker (2006) found that reducing help abuse (and other gaming behaviors) may not contribute to learning gains by itself, a similar result to the ones presented in this paper. |

| − | The | ||

| − | |||

=== Connections=== | === Connections=== | ||

| Line 246: | Line 261: | ||

=== Further Information === | === Further Information === | ||

| − | |||

| − | |||

| − | |||

| − | |||

=== Annotated bibliography === | === Annotated bibliography === | ||

| Line 264: | Line 275: | ||

# Roll, I., Ryu, E., Sewall, J., Leber, B., McLaren, B.M., Aleven, V., & Koedinger, K.R. (2006) Towards Teaching Metacognition: Supporting Spontaneous Self-Assessment. in proceedings of 8th International Conference on Intelligent Tutoring Systems, 738-40. Berlin: Springer Verlag. [[http://www.andrew.cmu.edu/user/iroll/Publications.html pdf]] | # Roll, I., Ryu, E., Sewall, J., Leber, B., McLaren, B.M., Aleven, V., & Koedinger, K.R. (2006) Towards Teaching Metacognition: Supporting Spontaneous Self-Assessment. in proceedings of 8th International Conference on Intelligent Tutoring Systems, 738-40. Berlin: Springer Verlag. [[http://www.andrew.cmu.edu/user/iroll/Publications.html pdf]] | ||

# Roll, I., Aleven, V., McLaren, B.M., Ryu, E., Baker, R.S., & Koedinger, K.R. (2006) The Help Tutor: Does Metacognitive Feedback Improves Students' Help-Seeking Actions, Skills and Learning? in proceedings of 8th Int C on Intelligent Tutoring Systems, 360-9. Berlin: Springer Verlag. [[http://www.andrew.cmu.edu/user/iroll/Publications.html pdf]] | # Roll, I., Aleven, V., McLaren, B.M., Ryu, E., Baker, R.S., & Koedinger, K.R. (2006) The Help Tutor: Does Metacognitive Feedback Improves Students' Help-Seeking Actions, Skills and Learning? in proceedings of 8th Int C on Intelligent Tutoring Systems, 360-9. Berlin: Springer Verlag. [[http://www.andrew.cmu.edu/user/iroll/Publications.html pdf]] | ||

| − | # Roll, I., Aleven, V., McLaren, B.M., & Koedinger, K.R. ( | + | # Roll, I., Aleven, V., McLaren, B. M., & Koedinger, K. R. (2007). Can help seeking be tutored? Searching for the secret sauce of metacognitive tutoring. International Conference on Artificial Intelligence in Education, , 203-10. [[http://www.andrew.cmu.edu/user/iroll/Publications.html pdf]] |

| + | # Roll, I., Aleven, V., McLaren, B. M., & Koedinger, K. R. (2007). Designing for metacognition - applying cognitive tutor principles to the tutoring of help seeking. Metacognition and Learning, 2(2). [[http://www.andrew.cmu.edu/user/iroll/Publications.html pdf]] | ||

# Schworm, S., & Renkl, A. (2002) Learning by solved example problems: Instructional explanations reduce self-explanation activity. in proceedings of The 24Th Annual Conference of the Cognitive Science Society, 816-21. Mahwah, NJ: Erlbaum. | # Schworm, S., & Renkl, A. (2002) Learning by solved example problems: Instructional explanations reduce self-explanation activity. in proceedings of The 24Th Annual Conference of the Cognitive Science Society, 816-21. Mahwah, NJ: Erlbaum. | ||

# Yudelson, M.V., Medvedeva, O., Legowski, E., Castine, M., Jukic, D., & Crowley, R.S. (2006) Mining Student Learning Data to Develop High Level Pedagogic Strategy in a Medical ITS. in proceedings of Workshop on Educational Data Mining at AAAI 2006, Menlo Park, CA: AAAI.# Roll, I., Aleven, V., & Koedinger, K.R. (2004) Promoting Effective Help-Seeking Behavior through Declarative Instruction. in proceedings of 7th International Conference on Intelligent Tutoring Systems, 857-9. Berlin: Springer-Verlag. [[http://www.andrew.cmu.edu/user/iroll/Publications.html pdf]] | # Yudelson, M.V., Medvedeva, O., Legowski, E., Castine, M., Jukic, D., & Crowley, R.S. (2006) Mining Student Learning Data to Develop High Level Pedagogic Strategy in a Medical ITS. in proceedings of Workshop on Educational Data Mining at AAAI 2006, Menlo Park, CA: AAAI.# Roll, I., Aleven, V., & Koedinger, K.R. (2004) Promoting Effective Help-Seeking Behavior through Declarative Instruction. in proceedings of 7th International Conference on Intelligent Tutoring Systems, 857-9. Berlin: Springer-Verlag. [[http://www.andrew.cmu.edu/user/iroll/Publications.html pdf]] | ||

Latest revision as of 00:11, 16 December 2010

Contents

- 1 Towards Tutoring Metacognition - The Case of Help Seeking

- 1.1 Meta-data

- 1.2 Abstract

- 1.3 Background and Significance

- 1.4 Glossary

- 1.5 Research questions

- 1.6 Independent Variables

- 1.7 Dependent variables

- 1.8 Hypothesis

- 1.9 Findings

- 1.9.1 Evaluation of goal 1: Capture metacognitive errors

- 1.9.2 Evaluation of goal 2: Improve metacognitive behavior

- 1.9.3 Evaluation of goal 3: Improve domain learning

- 1.9.4 Evaluation of goal 4: Improve future metacognitive behavior

- 1.9.5 Evaluation of goal 5: Improve future domain learning

- 1.9.6 Summary of results

- 1.10 Explanation

- 1.11 Connections

- 1.12 Further Information

- 1.13 Annotated bibliography

Towards Tutoring Metacognition - The Case of Help Seeking

Ido Roll, Vincent Aleven, Bruce M. McLaren, Kenneth Koedinger

Meta-data

PI's: Vincent Aleven, Ido Roll, Bruce M. McLaren, Ken Koedinger

Other Contributors: EJ Ryu (programmer)

| Study # | Start Date | End Date | LearnLab Site | # of Students | Total Participant Hours | DataShop? |

|---|---|---|---|---|---|---|

| 1 | 2004 | 2004 | Analysis of existing data | 40 | 280 | No, old data |

| 2 | 2005 | 2005 | Analysis of existing data | 70 | 105 | No, old data |

| 3 | 5/2005 | 5/2005 | Hampton & Wilkinsburg (Geometry) | 60 | 270 | No, incompatible format |

| 4 | 2/2006 | 4/2006 | CWCTC (Geometry) | 84 | 1,008 | Yes |

Abstract

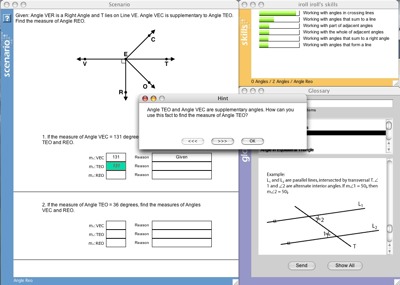

While working with a tutoring system, students are expected to regulate their own learning process. However, often, they demonstrate inadequate metacognitive process in doing so. For example, students often ask for help too frequently or not frequently enough. In this project we built an Intelligent Tutoring System to teach metacognition, and in particular, to improve students' help-seeking behavior. Our Help Seeking Support Environment includes three components:

- Direct help seeking instruction, given by the teacher

- A Self-Assessment Tutor, to help students evaluate their own need for help

- The Help Tutor - a domain-independent agent that can be added as an adjunct to a cognitive tutor. Rather than making help-seeking decisions for the students, the Help Tutor teaches better help-seeking skills by tracing students actions on a (meta)cognitive help-seeking model and giving students appropriate feedback.

In a series of in vivo experiments, the Help Tutor accurately detected help-seeking errors that were associated with poorer learning and with poorer declarative and procedural knowledge components of help seeking. The main findings were that students made fewer help-seeking errors while working with the Help Tutor and acquired better help seeking declarative knowledge components. However, we did not find evidence that this led to an improvement in learning at the domain level or to better help-seeking behavior in a paper-and-pencil environment. We pose a number of hypotheses in an attempt to explain these results. We question the current focus of metacognitive tutoring, and suggest ways to reexamine the role of help facilities and of metacognitive tutoring within Intelligent Tutoring Systems.

Background and Significance

Not only that teaching metacognition holds the promise of improving current learning of the domain of interest, but also, or even mainly, it can accelerate future learning and successful regulation of independent learning. One example to metacognitive knowledge is help-seeking knowledge components: The ability to identify the need for help, and to elicit appropriate assistance from the [[relevant resources|help facilities]. However, considerable evidence shows that metacognitive knowledge components are in need of better support. For example, while working with Intelligent Tutoring Systems, students try to "game the system" or do not self-explain enough. Similarly, research shows that students' help-seeking behavior leaves much room for improvement.

Shallow help seeking knowledge components

Research shows that students do not use their help-seeking konwledge components approrpiately. For example, Aleven et al. (2006) show that 30% of students' actions were consecutive fast help requests (a common form of help abuse, termed 'clicking through hints'), without taking enough time to read the requested hints. Extensive log-file analysis suggests that students apply faulty knowledge components such as the following:

Faulty procedural knowledge components: Cognitive aspects:

If I don’t know the answer => I should guess

Motivational aspects:

If I get the answer correct => I achieved the goal

Social aspects:

If I ask for help => I am weak

Faulty declarative knowledge components:

Asking for hints will always reduce my skill level

Making an error is better than asking for a hint

Only weak people ask for help

Teaching vs. supporting metacognition

Several systems support students' metacognitive actions in a way that encourages, or even forces, students to learn productively and efficiently. For example, a tutoring system can require the student to self-explain. While this approach is likely to improve domain learning in the supported environment, the effect is not likely to persist beyond the scope of the tutoring system, and therefore is not likely to help students become better future learners.

Towards that end, we chose not to support students' help seeking actions, but to teach them better help-seeking skills. Rather than making the metacognitive decisions for the students (for example, by preventing help-seeking errors or gaming opportunities), this study focuses on helping students refine their Help Seeking knowledge components and acquire better feature validity of their help-seeking metacognitive skills.

By doing so, we examine whether metacognitive knowledge can be taught using familiar conventional domain-level pedagogies.

Glossary

Research questions

- Can conventional and well-established instructional principles in the domain level be used to tutor metacognitive knowledge components such as Help Seeking knowledge components?

- Does the practice of better metacognitive behavior translates, in turn, to better domain learning?

In addition, the project makes the following contributions:

- An improved understanding of the nature of help-seeking knowledge and its acquisition.

- A novel framework for the design of goals, interaction and assessment for metacognitive tutoring.

Independent Variables

Two studies were performed with the Help Tutor. In both studies the independent variable was the existence of help seeking support. Control condition used the conventional Geometry Cognitive Tutor

The treatment condition varied between studies:

- Study one: The Geometry Cognitive Tutor + the Help Tutor

- Study two: The Geometry Cognitive Tutor + the Help Seeking Support Environment (help seeking explicit instruction, self-assessment tutor, and Help Tutor)

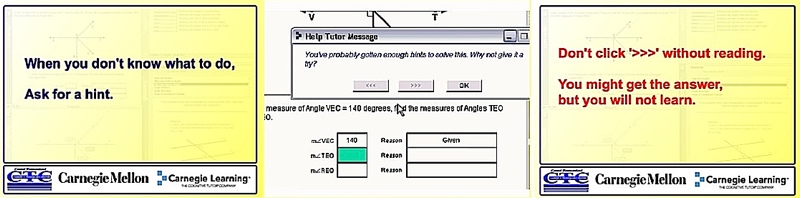

The Help Tutor:

The Help Tutor is an a Cognitive Tutor in its own right that identifies recommended types of actions by tracing students’ interaction with the Geometry Cognitive Tutor relative to a metacognitive help-seeking model. When students perform actions that deviate from the recommended ones, the Help Tutor presents a message that stresses the recommended action to be taken. Messages from the metacognitive Help Tutor and the domain-level Cognitive Tutor are coordinated, so that the student receives only the most helpful message at each point [2].

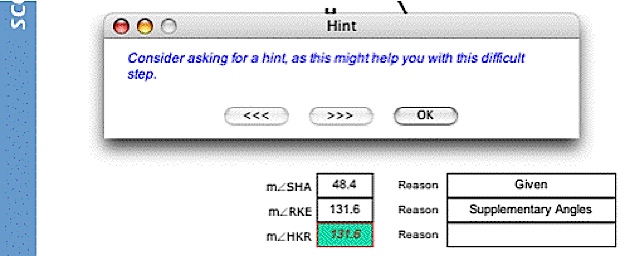

The Self-Assessment Tutor: The ability to correctly self-assess one’s own knowledge level is correlated with strategic use of help (Tobias and Everson, 2002). The Self-Assessment Tutor is designed to tutor students on their self-assessment skills; to help students make appropriative learning decisions based on their self assessment; and mainly, to give students a tutoring environment, low on cognitive load, in which they can practice using their help-seeking skills. The curriculum used by the Treatment group in study two consists of interleaving Self Assessment and Cognitive Tutor + Help Tutor sessions, with the Self Assessment sessions taking about 10% of the students’ time. During each self-assessment session the student assesses the skills to be practiced in the subsequent Cognitive Tutor section.

Explicit help-seeking instruction: As White and Frederiksen demonstrated (1998), reflecting in the classroom environment on the desired metacognitive process helps students internalize it. With that goal in mind, we created a short classroom lesson about help seeking with the following objectives: to give students a better declarative understanding of desired and effective help-seeking behavior; to improve their dispositions and attitudes towards seeking help; and to frame the help-seeking knowledge as an important learning goal, alongside Geometry knowledge, for the coming few weeks. The instruction includes a video presentation with examples of productive and faulty help-seeking behavior and the appropriate help-seeking principles.

Dependent variables

The study uses two levels of dependent measures:

- Directly assessing Help Seeking skills

- Assessing domain-level learning, and by that evaluating the contribution of the help-seeking skills.

1. Assessments of help-seeking konwledge:

- Normal post-test:

- Declarative: hypothetical help-seeking dilemmas

- Procedural: Help seeking error rate while working with the tutor

- Transfer: Ability to use optional hints embedded within certain test items in the paper test.

2. Assessments of domain konwledge:

- Normal post-test: Problem solving and explanation items like those in the tutor's instruction.

- Transfer:

- Data insufficiency (or "not enough information") items.

- Conceptual understanding items (study two only)

Hypothesis

The combination of explicit help-seeking instruction, on-time feedback on help seeking errors, and raising awareness to knowledge deficits will

- Improve feature validity of students' help seeking skills

and thus, in turn, will

- Improve learning of domain knowledge by using those skills effectively.

Instructional Principles

The main principles being evaluated here is whether instruction should support meta-cognition in the context of problem solving by using principles of cognitive tutoring such as:

- Giving direct instruction

- Giving immediate feedback on errors

- Prompting for self-assessment

This utilizes the following instructional principles:

- The Self-Assessment Tutor utilizes the Reflection questions principle

- The Help Tutor itself utilizes the Tutoring feedback principle

- The Help Seeking Instruction utilizes the Explicit instruction principle.

Findings

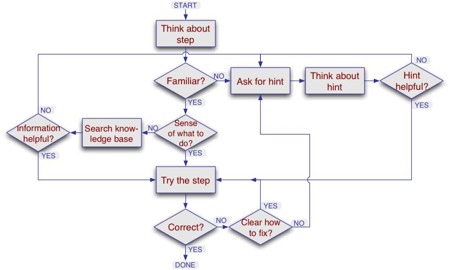

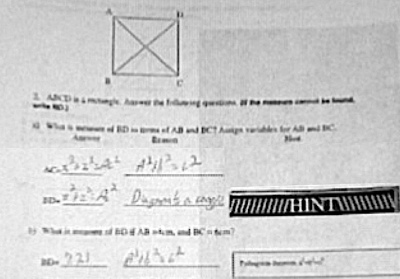

As seen below (adapted from Roll et al. 2006), metacognitive tutoring has the following goals:

- First, the tutoring system should capture metacognitive errors (in our case, help-seeking errors).

- Then, it should lead to an improved metacognitive behavior within the tutoring system.

- This, in turn, should lead to an improvement in the domain learning.

- The effect should persist beyond the scope of the tutoring system.

- As a result, students are expected to become better future learners.

Evaluation of goal 1: Capture metacognitive errors

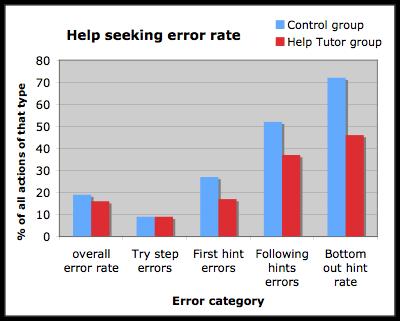

In study 1, 17% of students actions were classified as errors. These errors were singificantly negatively correlated with learning (r=-0.42) - the more help-seeking errors captured by the system, the smaller the improvement from pre- to post-test. This data suggests that the help-seeking model captures appropriate actions, and that the goal was achieved - the Help Tutor captures help-seeking errors.

Evaluation of goal 2: Improve metacognitive behavior

Students’ hint usage improved significantly while working with the Help Tutor across several measures, most notably, help-seeking error rate, frequency of asking for bottom-out hints, and hint reading time. Study 2 also shows that these aspects of improvement persist even beyond the scope of the Help Tutor. These improvements consistently reached significance only after an extended period of time of working with the Help Tutor (i.e., after the second part of the study). We hypothesize that since the Help Tutor feedback appeared in two different areas of geometry learning, students could more easily acquire the domain-independent help-seeking skills and thus transfer them better to the other subject matter areas addressed between and after the two in which the Help Tutor was used. The addition of the help-seeking instruction and self-assessment episodes in Study 2 led to improvements in students’ conceptual help seeking knowledge, time to read hints, and an apparent improvement in hint-to-error ratio. The fact that students were more likely to ask for hints rather than committing more errors provide behavioral evidence that self-assessment instruction provided by both the Self-Assessment tutor and by certain messages in the Help Tutor appear to lead students to be more aware of their need for help, reinforcing the causal link between self-assessment and strategic help seeking (Tobias & Everson, 2002). Although students’ hint usage improved, no major improvements in the deliberateness of students’ solution attempts were found (besides that indicated by the differences in hint-to-error ratio). It may be that the Help Tutor did not support this aspect of learning well enough. An alternative explanation is that as long as students attempted to solve problems (whether successfully or not) they were too occupied by the problem-solving attempts and thus did not pay attention (and cognitive resources) to the Help Tutor until they reached an impasse that required them to ask for help. This may be an outcome of our design decision to give feedback in the context of domain learning.

This data suggests that while the system was not able to improve all aspects of the desired metacognitive behavior, it did improve students' behavior on the common types of errors.

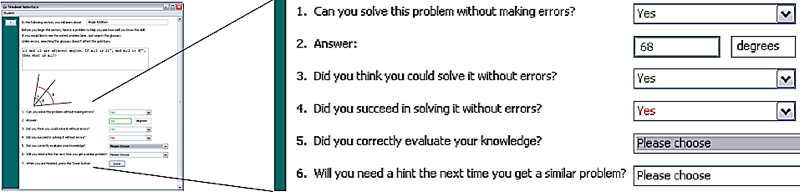

Evaluation of goal 3: Improve domain learning

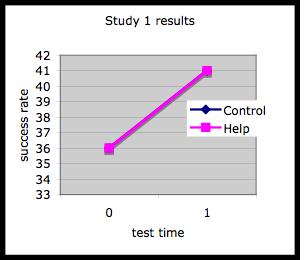

While students' help seeking behavior improved while working with the Help Tutor (in study 1) or the full Help Seeking Support Environment (in study 2), we did not observe differences in learning between the two conditions on neither study 1 nor study 2.

Study 1 results:

Study 2 results:

- Notice, since the tests in times 1 and 2 evaluated different instructional units (Angles vs. Quadrilaterals), lower grades at time 2 do not suggest decrease in knowledge.

Evaluation of goal 4: Improve future metacognitive behavior

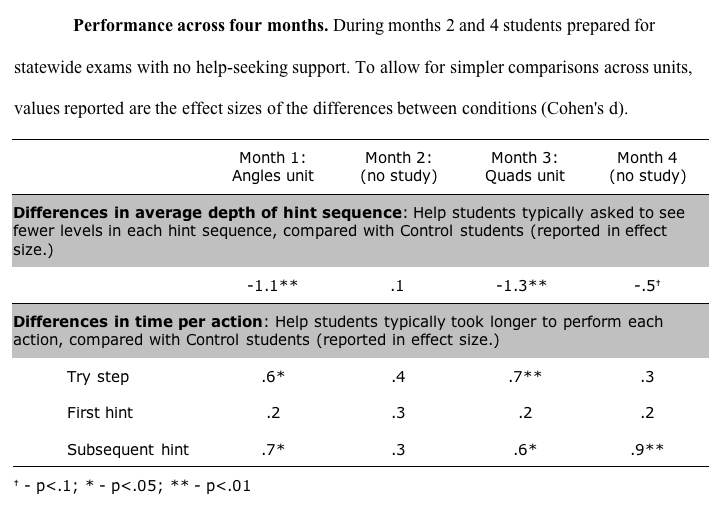

As mentioned earlier, the two units of study 2 were spread apart by one month. We collected data from students' behavior in the months between the two units and following the study. During these months students repeated previous material in preparation for statewide exams. As seen in the table below, the main effects of the help-seeking environment persisted even once students moved on to working with the native Cognitive Tutor! Overall, students who received help-seeking support during the study took more time for their actions following the study, especially for reading hints - their hint reading time before asking for additional hint is longer by almost one effect size in the month following the study (12 vs. 8 seconds, t(44)=3.0, p<.01). Also, students who were in the Help condition did not drill down through the hints as often, though this effect is only marginal significant (average hint level: 2.2 vs. 2.6, t(51)=1.9, p=.06). These effects are more consistently significant after both units, suggesting that having the study stretched across two units indeed helped students better acquire the domain-independent help-seeking skills.

In addition, students' help seeking behavior was evaluated in a transfer environment - the paper and pencil tests.

Hypothetical help seeking dilemmas, such as the one described below, were used to evaluate declarative help-seeking knowledge.

1. You tried to answer a question that you know, but for some reason the tutor says that your answer is wrong. What should you do? [ ] First I would review my calculations. Perhaps I can find the mistake myself? [ ] The Tutor must have made a mistake. I will retype the same answer again. [ ] I would ask for a hint, to understand my mistake.

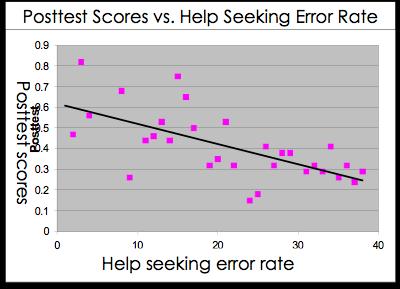

Procedural help-seeking skills were evaluated using embedded hints in the tests (see figure in the Dependant Measures section above).

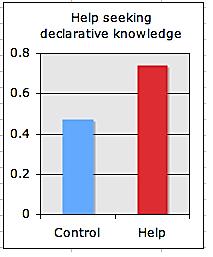

In study 1 (which included only the Help Tutor component,) students in the Treatment condition demonstrated neither better declarative nor procedural help-seeking knowledge, compared with the Control condition.

In study 2 (which included the explicit help-seeking instruction and the Self-Assessment tutor in addition to the Help Tutor) students in in the Treatment condition demonstrated better declarative help-seeking knowledge (compared with Control group students) but no better procedural knowledge

Evaluation of goal 5: Improve future domain learning

Due to technical difficulties, this goal was not evaluated in both studies.

Summary of results

Overall, the following pattern of results emerges from the studies:

- The Help Seeking Support Environment intervened appropriate actions during the learning process.

- Students improved their help-seeking behavior while working with the system.

- Students demonstrated improved help-seeking behavior even once support was removed, after working with the Help Tutor along two months and two different topics.

- Furthermore, students acquired better help-seeking declarative knowledge.

- However, students' domain learning did not improve.

Explanation

1 Assessing help-seeking behavior

These studies put forward several direct measures of help seeking. Most notably, we found that the online help-seeking model is able to capture faulty help-seeking behavior in a manner that is consistent with domain learning. The operational nature of the model (i.e., the fact that it can be run on a computer) puts it in unique position, compared to other models of help-seeking behavior. Furthermore, formative assessment using the model is done in a transparent manner that does not interrupt the learning process. These qualities of the model enable us to do a micro-genetic (moment-by-moment) analysis of help-seeking behavior over extended periods of time, quite unique in the literature on help seeking and on self-regulated learning. In addition, the detailed evaluation of students’ actions allows us to adapt the learning process to the learner in novel ways – that is, adapt not only to students’ domain knowledge and behavior, but also to their metacognitive knowledge and behavior. Students’ online behavior as assessed by the help-seeking model also correlated with paper-and-pencil measures of help seeking knowledge and behavior. This result provides some support that the measures capture a metacognitive behavior that is domain and environment independent in nature. A combination of these instruments with domain learning measures can be used to investigate the different factors affecting learning behaviors and outcomes.

2. Improving help-seeking behavior and knowledge

Students’ hint usage improved significantly while working with the Help Tutor across several measures, most notably, help-seeking error rate, frequency of asking for bottom-out hints, and hint reading time. Study 2 also shows that these aspects of improvement persist even beyond the scope of the Help Tutor. These improvements consistently reached significance only after an extended period of time of working with the Help Tutor (i.e., after the second part of the study). We hypothesize that since the Help Tutor feedback appeared in two different areas of geometry learning, students could more easily acquire the domain-independent help-seeking skills and thus transfer them better to the other subject matter areas addressed between and after the two in which the Help Tutor was used. The addition of the help-seeking instruction and self-assessment episodes in Study 2 led to improvements in students’ conceptual help seeking knowledge, time to read hints, and an apparent improvement in hint-to-error ratio. The fact that students were more likely to ask for hints rather than committing more errors provide behavioral evidence that self-assessment instruction provided by both the Self-Assessment tutor and by certain messages in the Help Tutor appear to lead students to be more aware of their need for help, reinforcing the causal link between self-assessment and strategic help seeking (Tobias & Everson, 2002). Although students’ hint usage improved, no major improvements in the deliberateness of students’ solution attempts were found (besides that indicated by the differences in hint-to-error ratio). It may be that the Help Tutor did not support this aspect of learning well enough. An alternative explanation is that as long as students attempted to solve problems (whether successfully or not) they were too occupied by the problem-solving attempts and thus did not pay attention (and cognitive resources) to the Help Tutor until they reached an impasse that required them to ask for help. This may be an outcome of our design decision to give feedback in the context of domain learning.

3 Using metacognitive improvement to improve domain learning

The two studies did not find an effect of the improved help-seeking behavior on domain learning. One possible explanation might be that the help-seeking model focuses on the wrong actions, although the negative correlation with learning across studies suggests otherwise. Alternatively, perhaps the improvement in help-seeking behavior was not sufficient to measurably impact domain-level learning. However, we would expect to see at least a trend in the test scores, rather than the virtual tie we observed across the three units. A third explanation (see paper #8 by authors) is that poor help-seeking behavior may be more a consequence of poor motivation toward domain learning than it is a cause. Students who do not care to learn geometry may exhibit poor help seeking behaviors and learn less, thus yielding a correlation. However, in this case, poor help seeking behavior may not reflect a lack of help-seeking skill per se, but a lack of motivation to deeply engage in help seeking and associated deep learning strategies (like attempting to encode instructional material in terms of deeper domain-relevant features rather than more superficial perceptual features). Indeed Help condition students changed their help-seeking behaviors and appeared to do so in a lasting way, but perhaps associated changes in other deep learning strategies are needed before clear effects on domain learning can be observed. To elaborate, consider that the help-seeking model focuses on how deliberately students seek help, but does not evaluate the content of the help or how it is being used. It makes sure that students take enough time to read the hint, but does not assess how this information is being processed. One of the implicit assumptions of this line of research is that students can learn from given explanations in contextual hints. However, it may be that this process is more challenging. For example, Renkl showed that at times instructional explanations actually hinder students’ tendency to self-explain and thus learn (Renkl, 2002). In other words, perhaps students do not learn enough from help not only because of how they obtain it, but also because of how they process it. Reevaluating the existing help-seeking literature supports this hypothesis. Very few experiments have actively manipulated help seeking in ITSs to date. Those who manipulated the content of the help or students’ reaction to it often found that interventions that increase reflection yield better learning: Dutke and Reimer (2000) found that principle-based hints are better than operative ones; Ringenberg and VanLehn (2006) found that analogous solved examples may be better than conventional hints; Schworm and Renkl (2002) found that deep reflection questions caused more learning compared with conventional hints; and Baker et al. (2006) showed that auxiliary exercises for students who misuse hints help them learn better. At the same time, Baker (2006) found that reducing help abuse (and other gaming behaviors) may not contribute to learning gains by itself, a similar result to the ones presented in this paper.

Connections

- The Help Tutor attempts to extend traditional tutoring beyond the common domains. In that, it is similar to the work of Amy Ogan on tutoring French Culture

- The manipulation of interaction between the student and the tutor, which is "natural" in the control condition, is guided by the help tutor. This is similar to the scripting manipulation of the Rummel Scripted Collaborative Problem Solving and the Walker A Peer Tutoring Addition projects.

- Another example for studying the effects of hints is Ringenberg's study, in which hints are compared to examples.

- Going to do an in-vivo study at a LearnLab site? Check out how to answer teacher's FAQ

Further Information

Annotated bibliography

- Aleven, V., & Koedinger, K.R. (2000) Limitations of student control: Do students know when they need help? in proceedings of 5th International Conference on Intelligent Tutoring Systems, 292-303. Berlin: Springer Verlag. [pdf]

- Aleven, V., McLaren, B.M., Roll, I., & Koedinger, K.R. (2004) Toward tutoring help seeking - Applying cognitive modeling to meta-cognitive skills . in proceedings of 7th International Conference on Intelligent Tutoring Systems, 227-39. Berlin: Springer-Verlag. [pdf]

- Aleven, V., Roll, I., McLaren, B.M., Ryu, E.J., & Koedinger, K.R. (2005) An architecture to combine meta-cognitive and cognitive tutoring: Pilot testing the Help Tutor. in proceedings of 12th International Conference on Artificial Intelligence in Education, Amsterdam, The Netherlands: IOS press. [pdf]

- Aleven, V., McLaren, B.M., Roll, I., & Koedinger, K.R. (2006). Toward meta-cognitive tutoring: A model of help seeking with a Cognitive Tutor. Int Journal of Artificial Intelligence in Education(16), 101-30 [pdf]

- Baker, R.S., Corbett, A.T., & Koedinger, K.R. (2004) Detecting Student Misuse of Intelligent Tutoring Systems. in proceedings of 7Th International Conference on Intelligent Tutoring Systems, 531-40.

- Baker, R.S., Roll, I., Corbett, A.T., & Koedinger, K.R. (2005) Do Performance Goals Lead Students to Game the System? in proceedings of 12Th International Conference on Artificial Intelligence in Education, 57-64. Amsterdam, The Netherlands: IOS Press.

- Chang, K.K., Beck, J.E., Mostow, J., & Corbett, A. (2006) Does Help Help? A Bayes Net Approach to Modeling Tutor Interventions. in proceedings of Workshop on Educational Data Mining at AAAI 2006, 41-6. Menlo Park, California: AAAI.

- Feldstein, M.S. (1964). The Social Time Presence Discount Rate in Cost-Benefit Analysis. The Economic Journal 74(294), 360-79

- Roll, I., Baker, R.S., Aleven, V., McLaren, B.M., & Koedinger, K.R. (2005) Modeling Students’ Metacognitive Errors in Two Intelligent Tutoring Systems. in L. Ardissono, (Eds.), in proceedings of User Modeling 2005, 379-88. Berlin: Springer-Verlag. [pdf]

- Roll, I., Ryu, E., Sewall, J., Leber, B., McLaren, B.M., Aleven, V., & Koedinger, K.R. (2006) Towards Teaching Metacognition: Supporting Spontaneous Self-Assessment. in proceedings of 8th International Conference on Intelligent Tutoring Systems, 738-40. Berlin: Springer Verlag. [pdf]

- Roll, I., Aleven, V., McLaren, B.M., Ryu, E., Baker, R.S., & Koedinger, K.R. (2006) The Help Tutor: Does Metacognitive Feedback Improves Students' Help-Seeking Actions, Skills and Learning? in proceedings of 8th Int C on Intelligent Tutoring Systems, 360-9. Berlin: Springer Verlag. [pdf]

- Roll, I., Aleven, V., McLaren, B. M., & Koedinger, K. R. (2007). Can help seeking be tutored? Searching for the secret sauce of metacognitive tutoring. International Conference on Artificial Intelligence in Education, , 203-10. [pdf]

- Roll, I., Aleven, V., McLaren, B. M., & Koedinger, K. R. (2007). Designing for metacognition - applying cognitive tutor principles to the tutoring of help seeking. Metacognition and Learning, 2(2). [pdf]

- Schworm, S., & Renkl, A. (2002) Learning by solved example problems: Instructional explanations reduce self-explanation activity. in proceedings of The 24Th Annual Conference of the Cognitive Science Society, 816-21. Mahwah, NJ: Erlbaum.

- Yudelson, M.V., Medvedeva, O., Legowski, E., Castine, M., Jukic, D., & Crowley, R.S. (2006) Mining Student Learning Data to Develop High Level Pedagogic Strategy in a Medical ITS. in proceedings of Workshop on Educational Data Mining at AAAI 2006, Menlo Park, CA: AAAI.# Roll, I., Aleven, V., & Koedinger, K.R. (2004) Promoting Effective Help-Seeking Behavior through Declarative Instruction. in proceedings of 7th International Conference on Intelligent Tutoring Systems, 857-9. Berlin: Springer-Verlag. [pdf]